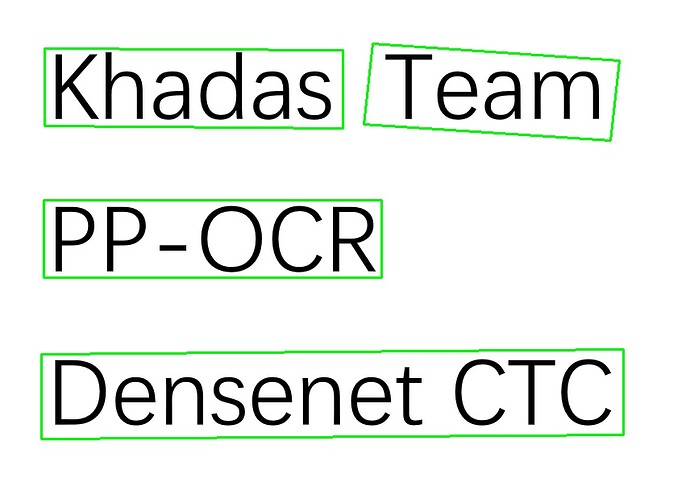

Hi Louis, i have tried using the C++ Demo to do realtime text recognition. I’m using your DenseNet CTC demo, using the cv::VideoCapture, and run postprocess_densenet_ctc for each frame, but i got set input size too big, please check it error.

My Code:

/****************************************************************************

*

* Copyright (c) 2019 by amlogic Corp. All rights reserved.

*

* The material in this file is confidential and contains trade secrets

* of amlogic Corporation. No part of this work may be disclosed,

* reproduced, copied, transmitted, or used in any way for any purpose,

* without the express written permission of amlogic Corporation.

*

***************************************************************************/

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include "nn_sdk.h"

#include "nn_util.h"

#include "postprocess_util.h"

#include <opencv2/objdetect/objdetect.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/imgcodecs.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/types_c.h>

#include <opencv2/opencv.hpp>

#include <opencv2/imgproc/imgproc_c.h>

#include <getopt.h>

#include <sys/time.h>

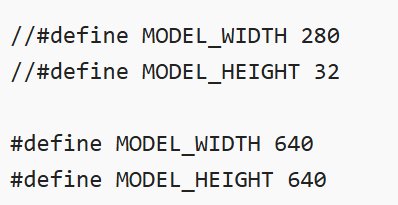

//#define MODEL_WIDTH 280

//#define MODEL_HEIGHT 32

#define MODEL_WIDTH 640

#define MODEL_HEIGHT 640

#define DEFAULT_WIDTH 1280

#define DEFAULT_HEIGHT 720

int default_width = DEFAULT_WIDTH;

int default_height = DEFAULT_HEIGHT;

struct option longopts[] = {

{ "model", required_argument, NULL, 'm' },

{ "width", required_argument, NULL, 'w' },

{ "height", required_argument, NULL, 'h' },

{ "help", no_argument, NULL, 'H' },

{ 0, 0, 0, 0 }

};

nn_input inData;

cv::Mat img;

aml_module_t modelType;

static int input_width,input_high,input_channel;

typedef enum _amlnn_detect_type_ {

Accuracy_Detect_Yolo_V3 = 0

} amlnn_detect_type;

void* init_network_file(const char *mpath) {

void *qcontext = NULL;

aml_config config;

memset(&config, 0, sizeof(aml_config));

config.nbgType = NN_ADLA_FILE;

config.path = mpath;

config.modelType = ADLA_LOADABLE;

config.typeSize = sizeof(aml_config);

qcontext = aml_module_create(&config);

if (qcontext == NULL) {

printf("amlnn_init is fail\n");

return NULL;

}

if (config.nbgType == NN_ADLA_MEMORY && config.pdata != NULL) {

free((void*)config.pdata);

}

return qcontext;

}

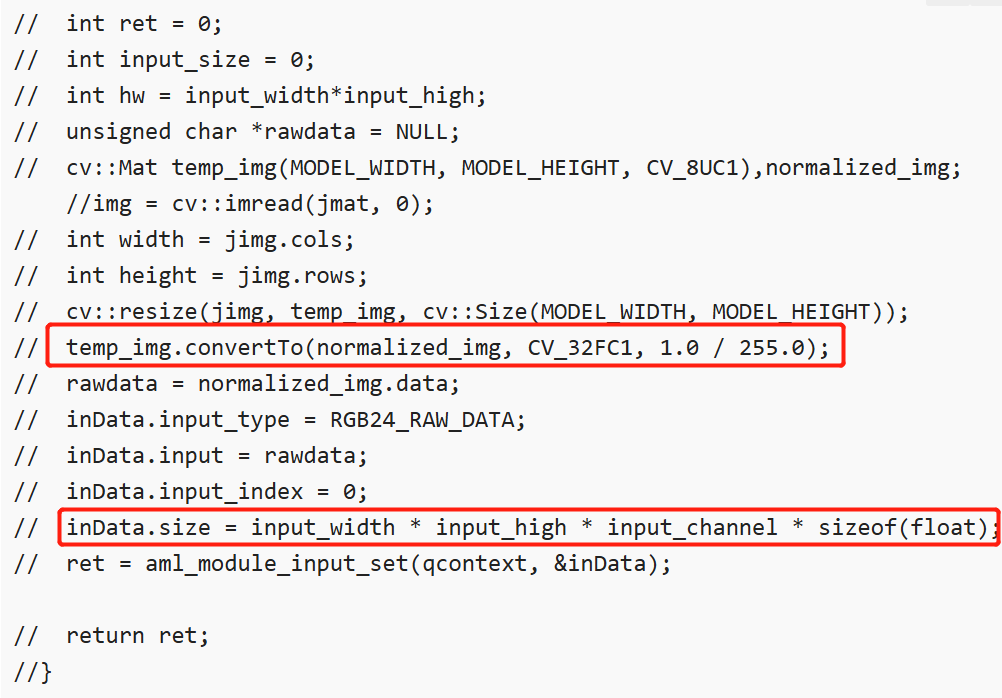

//int set_input(void *qcontext, const cv::Mat jimg) {

// int ret = 0;

// int input_size = 0;

// int hw = input_width*input_high;

// unsigned char *rawdata = NULL;

// cv::Mat temp_img(MODEL_WIDTH, MODEL_HEIGHT, CV_8UC1),normalized_img;

//img = cv::imread(jmat, 0);

// int width = jimg.cols;

// int height = jimg.rows;

// cv::resize(jimg, temp_img, cv::Size(MODEL_WIDTH, MODEL_HEIGHT));

// temp_img.convertTo(normalized_img, CV_32FC1, 1.0 / 255.0);

// rawdata = normalized_img.data;

// inData.input_type = RGB24_RAW_DATA;

// inData.input = rawdata;

// inData.input_index = 0;

// inData.size = input_width * input_high * input_channel * sizeof(float);

// ret = aml_module_input_set(qcontext, &inData);

// return ret;

//}

int run_network(void *qcontext) {

//int ret = 0;

//nn_output *outdata = NULL;

//aml_output_config_t outconfig;

//memset(&outconfig, 0, sizeof(aml_output_config_t));

//outconfig.format = AML_OUTDATA_RAW;//AML_OUTDATA_RAW or AML_OUTDATA_FLOAT32

//outconfig.typeSize = sizeof(aml_output_config_t);

//outdata = (nn_output*)aml_module_output_get(qcontext, outconfig);

//if (outdata == NULL) {

// printf("aml_module_output_get error\n");

// return -1;

//}

//char result[35] = {0};

//int result_len = 0;

//postprocess_densenet_ctc(outdata, result, &result_len);

//printf("%d\n", result_len);

//printf("%s\n", result);

//return ret;

int ret = 0;

int frames = 0;

nn_output *outdata = NULL;

struct timeval time_start, time_end;

float total_time = 0;

aml_output_config_t outconfig;

memset(&outconfig, 0, sizeof(aml_output_config_t));

outconfig.format = AML_OUTDATA_FLOAT32;//AML_OUTDATA_RAW or AML_OUTDATA_FLOAT32

outconfig.typeSize = sizeof(aml_output_config_t);

outconfig.order = AML_OUTPUT_ORDER_NCHW;

obj_detect_out_t yolov3_detect_out;

cv::namedWindow("Image Window");

int input_size = 0;

int hw = input_width*input_high;

unsigned char *rawdata = NULL;

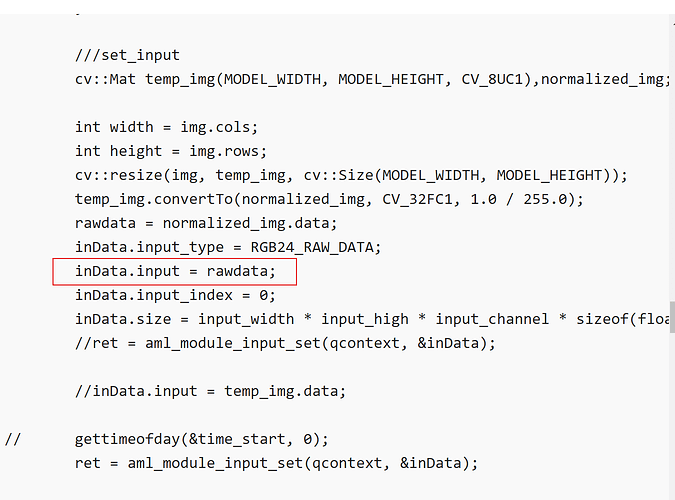

cv::Mat temp_img(MODEL_WIDTH, MODEL_HEIGHT, CV_8UC1);

inData.input_type = RGB24_RAW_DATA;

inData.input_index = 0;

inData.size = input_width * input_high * input_channel;

std::string pipeline = std::string("v4l2src device=/dev/media0 io-mode=mmap ! video/x-raw,format=NV12,width=") + std::to_string(default_width) + std::string(",height=") + std::to_string(default_height) + std::string(",framerate=30/1 ! videoconvert ! appsink");

cv::VideoCapture cap(pipeline);

gettimeofday(&time_start, 0);

if (!cap.isOpened()) {

std::cout << "capture device failed to open!" << std::endl;

cap.release();

exit(-1);

}

while(1) {

gettimeofday(&time_start, 0);

if (!cap.read(img)) {

std::cout<<"Capture read error"<<std::endl;

break;

}

printf("Input");

cv::resize(img, temp_img, cv::Size(MODEL_WIDTH, MODEL_HEIGHT));

cv::cvtColor(temp_img, temp_img, cv::COLOR_RGB2BGR);

inData.input = temp_img.data;

// gettimeofday(&time_start, 0);

ret = aml_module_input_set(qcontext, &inData);

if (ret != 0) {

printf("set_input fail.\n");

return -1;

}

outdata = (nn_output*)aml_module_output_get(qcontext, outconfig);

if (outdata == NULL) {

printf("aml_module_output_get error\n");

return -1;

}

printf("Output");

++frames;

total_time += (float)((time_end.tv_sec - time_start.tv_sec) + (time_end.tv_usec - time_start.tv_usec) / 1000.0f / 1000.0f);

if (total_time >= 1.0f) {

int fps = (int)(frames / total_time);

printf("Inference FPS: %i\n", fps);

frames = 0;

total_time = 0;

}

char result[35] = {0};

int result_len = 0;

printf("Start DenseNet CTC");

postprocess_densenet_ctc(outdata, result, &result_len);

printf("Result of DenseNet CTC");

printf("%d\n", result_len);

printf("%s\n", result);

cv::imshow("Image Window",img);

cv::waitKey(1);

}

return ret;

}

int destroy_network(void *qcontext) {

int ret = aml_module_destroy(qcontext);

return ret;

}

int main(int argc,char **argv)

{

int c;

int ret = 0;

void *context = NULL;

char *model_path = NULL;

//char *input_data = NULL;

input_width = MODEL_WIDTH;

input_high = MODEL_HEIGHT;

//input_channel = 1;

input_channel = 3;

while ((c = getopt_long(argc, argv, "m:w:h:H", longopts, NULL)) != -1) {

switch (c) {

case 'w':

default_width = atoi(optarg);

break;

case 'h':

default_height = atoi(optarg);

break;

case 'm':

model_path = optarg;

break;

//case 'p':

// input_data = optarg;

// break;

default:

printf("%s[-m model path] [-w camera width] [-h camera height] [-H]\n", argv[0]);

exit(1);

}

}

context = init_network_file(model_path);

if (context == NULL) {

printf("init_network fail.\n");

return -1;

}

//ret = set_input(context, input_data);

//if (ret != 0) {

// printf("set_input fail.\n");

// return -1;

//}

ret = run_network(context);

if (ret != 0) {

printf("run_network fail.\n");

return -1;

}

ret = destroy_network(context);

if (ret != 0) {

printf("destroy_network fail.\n");

return -1;

}

return ret;

}

The Error I Got:

QStandardPaths: XDG_RUNTIME_DIR not set, defaulting to '/tmp/runtime-root'

WARNING: Some incorrect rendering might occur because the selected Vulkan device (Mali-G52) doesn't support base Zink requirements: feats.features.logicOp feats.features.fillModeNonSolid feats.features.shaderClipDistance

[API:aml_v4l2src_connect:271]Enter, devname : /dev/media0

func_name: aml_src_get_cam_method

initialize func addr: 0x7f6c9f167c

finalize func addr: 0x7f6c9f1948

start func addr: 0x7f6c9f199c

stop func addr: 0x7f6c9f1a4c

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 1, type 0x20000, name isp-csiphy), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 4, type 0x20000, name isp-adapter), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 7, type 0x20000, name isp-test-pattern-gen), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 9, type 0x20000, name isp-core), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 20, type 0x20001, name imx415-0), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 24, type 0x10001, name isp-ddr-input), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 28, type 0x10001, name isp-param), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 32, type 0x10001, name isp-stats), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 36, type 0x10001, name isp-output0), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 40, type 0x10001, name isp-output1), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 44, type 0x10001, name isp-output2), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 48, type 0x10001, name isp-output3), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id 52, type 0x10001, name isp-raw), ret 0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:235:carm_src_is_usb]carm_src_is_usb:info(id -2147483596, type 0x0, name ), ret -1

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:239:carm_src_is_usb]carm_src_is_usb:error Invalid argument

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:79:cam_src_select_socket]select socket:/tmp/camctrl0.socket

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:103:cam_src_obtain_devname]fork ok, pid:7369

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:103:cam_src_obtain_devname]fork ok, pid:0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camsrc.c:107:cam_src_obtain_devname]execl /usr/bin/camctrl

[2024-10-22 07:42:39] DEBUG [amlv4l2src camctrl.cc:925:main][camctrl.cc:main:925]

[2024-10-22 07:42:39] DEBUG [amlv4l2src camctrl.cc:889:parse_opt]media device name: /dev/media0

[2024-10-22 07:42:39] DEBUG [amlv4l2src camctrl.cc:898:parse_opt]Server socket: /tmp/camctrl0.socket

Opening media device /dev/media0

Enumerating entities

Found 13 entities

Enumerating pads and links

mediaStreamInit[35]: mediaStreamInit ++.

mediaStreamInit[39]: media devnode: /dev/media0

mediaStreamInit[56]: ent 0, name isp-csiphy

mediaStreamInit[56]: ent 1, name isp-adapter

mediaStreamInit[56]: ent 2, name isp-test-pattern-gen

mediaStreamInit[56]: ent 3, name isp-core

mediaStreamInit[56]: ent 4, name imx415-0

mediaStreamInit[56]: ent 5, name isp-ddr-input

mediaStreamInit[56]: ent 6, name isp-param

mediaStreamInit[56]: ent 7, name isp-stats

mediaStreamInit[56]: ent 8, name isp-output0

mediaStreamInit[56]: ent 9, name isp-output1

mediaStreamInit[56]: ent 10, name isp-output2

mediaStreamInit[56]: ent 11, name isp-output3

mediaStreamInit[56]: ent 12, name isp-raw

mediaStreamInit[96]: get lens_ent fail

mediaLog[30]: v4l2_video_open: open subdev device node /dev/video63 ok, fd 5

mediaStreamInit[151]: mediaStreamInit open video0 fd 5

mediaLog[30]: v4l2_video_open: open subdev device node /dev/video64 ok, fd 6

mediaStreamInit[155]: mediaStreamInit open video1 fd 6

mediaLog[30]: v4l2_video_open: open subdev device node /dev/video65 ok, fd 7

mediaStreamInit[159]: mediaStreamInit open video2 fd 7

mediaLog[30]: v4l2_video_open: open subdev device node /dev/video66 ok, fd 8

mediaStreamInit[163]: mediaStreamInit open video3 fd 8

mediaStreamInit[172]: media stream init success

fetchPipeMaxResolution[27]: find matched sensor configs 3840x2160

media_set_wdrMode[420]: media_set_wdrMode ++ wdr_mode : 0

media_set_wdrMode[444]: media_set_wdrMode success --

media_set_wdrMode[420]: media_set_wdrMode ++ wdr_mode : 4

media_set_wdrMode[444]: media_set_wdrMode success --

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:374:link_and_activate_subdev]link and activate subdev successfully

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:407:media_stream_config]config media stream successfully

mediaLog[30]: v4l2_video_open: open subdev device node /dev/video62 ok, fd 13

mediaLog[30]: VIDIOC_QUERYCAP: success

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:172:check_capability]entity[isp-stats] -> video[/dev/video62], cap.driver:aml-camera, capabilities:0x85200001, device_caps:0x5200001

mediaLog[30]: v4l2_video_open: open subdev device node /dev/video61 ok, fd 14

mediaLog[30]: VIDIOC_QUERYCAP: success

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:172:check_capability]entity[isp-param] -> video[/dev/video61], cap.driver:aml-camera, capabilities:0x85200001, device_caps:0x5200001

mediaLog[30]: set format ok, ret 0.

mediaLog[30]: set format ok, ret 0.

mediaLog[30]: request buf ok

mediaLog[30]: request buf ok

mediaLog[30]: query buffer success

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:546:isp_alg_param_init]isp stats query buffer, length: 262144, offset: 0

mediaLog[30]: query buffer success

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:546:isp_alg_param_init]isp stats query buffer, length: 262144, offset: 262144

mediaLog[30]: query buffer success

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:546:isp_alg_param_init]isp stats query buffer, length: 262144, offset: 524288

mediaLog[30]: query buffer success

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:546:isp_alg_param_init]isp stats query buffer, length: 262144, offset: 786432

mediaLog[30]: query buffer success

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:568:isp_alg_param_init]isp param query buffer, length: 262144, offset: 0

alg2User func addr: 0x7fab7f8ed8

alg2Kernel func addr: 0x7fab7f8f08

algEnable func addr: 0x7fab7f8d70

algDisable func addr: 0x7fab7f8e90

algFwInterface func addr: 0x7fab7f9008

matchLensConfig[43]: LKK: fail to match lensConfig

cmos_get_ae_default_imx415[65]: cmos_get_ae_default

cmos_get_ae_default_imx415[116]: cmos_get_ae_default++++++

cmos_get_ae_default_imx415[65]: cmos_get_ae_default

cmos_get_ae_default_imx415[116]: cmos_get_ae_default++++++

aisp_enable[984]: tuning device not exist!

aisp_enable[987]: 3a commit b56e430e80b995bb88cecff66a3a6fc17abda2c7

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 16351232, 0, 0, 0

mediaLog[30]: streamon success

mediaLog[30]: streamon success

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:650:isp_alg_param_init]Finish initializing amlgorithm parameter ...

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:971:main]UNIX domain socket bound

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:977:main]Accepting connections ...

[2024-10-22 07:42:40] DEBUG [amlv4l2src camsrc.c:122:cam_src_obtain_devname]udp_sock_create

[2024-10-22 07:42:40] DEBUG [amlv4l2src common/common.c:70:udp_sock_create][453321940][/tmp/camctrl0.socket] start connect

[2024-10-22 07:42:40] DEBUG [amlv4l2src camsrc.c:124:cam_src_obtain_devname]udp_sock_recv

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:985:main]connected_sockfd: 20

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:989:main]video_dev_name: /dev/video63

[2024-10-22 07:42:40] DEBUG [amlv4l2src camsrc.c:282:cam_src_initialize]obtain devname: /dev/video63

devname : /dev/video63

driver : aml-camera

device : Amlogic Camera Card

bus_info : platform:aml-cam

version : 331657

error tvin-port use -1

[API:aml_v4l2src_streamon:373]Enter

[2024-10-22 07:42:40] DEBUG [amlv4l2src camsrc.c:298:cam_src_start]start ...

[API:aml_v4l2src_streamon:376]Exit

[2024-10-22 07:42:40] DEBUG [amlv4l2src camctrl.cc:860:process_socket_thread]receive streamon notification

cmos_again_calc_table_imx415[125]: cmos_again_calc_table: 1836, 1836

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 11046912, 11046912, 11046912, 11046912

cmos_again_calc_table_imx415[125]: cmos_again_calc_table: 0, 0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 14585856, 14585856, 14585856, 14585856

[ WARN:0@1.792] global ./modules/videoio/src/cap_gstreamer.cpp (1405) open OpenCV | GStreamer warning: Cannot query video position: status=0, value=-1, duration=-1

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 13967360, 13967360, 13967360, 13967360

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 13545472, 13545472, 13545472, 13545472

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 13185024, 13185024, 13185024, 13185024

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12959744, 12959744, 12959744, 12959744

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12816384, 12816384, 12816384, 12816384

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12697600, 12697600, 12697600, 12697600

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12693504, 12693504, 12693504, 12693504

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12689408, 12689408, 12689408, 12689408

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12685312, 12685312, 12685312, 12685312

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12681216, 12681216, 12681216, 12681216

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12677120, 12677120, 12677120, 12677120

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12673024, 12673024, 12673024, 12673024

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12668928, 12668928, 12668928, 12668928

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12664832, 12664832, 12664832, 12664832

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12660736, 12660736, 12660736, 12660736

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12656640, 12656640, 12656640, 12656640

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12652544, 12652544, 12652544, 12652544

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12648448, 12648448, 12648448, 12648448

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12644352, 12644352, 12644352, 12644352

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

cmos_inttime_calc_table_imx415[150]: cmos_inttime_calc_table: 12640256, 12640256, 12640256, 12640256

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

InputE NN_SDK:[aml_adla_inputs_set_off:812]Error: set input size too big, please check it! [1228800] : [35840]

OutputStart DenseNet CTCResult of DenseNet CTC0

The Video is displaying on a Window but no text recognition is happening.

How do i fix the input size too big error? Or is my approach wrong?

![]() ). Can anyone help me on this or is there any ready Demo which i can use? I have been researching this for more than two weeks now and still can’t find any solution.

). Can anyone help me on this or is there any ready Demo which i can use? I have been researching this for more than two weeks now and still can’t find any solution.