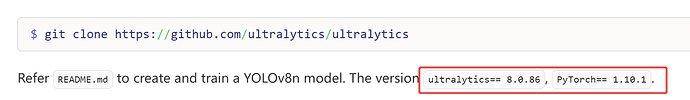

On my desktop that I use for model conversion I followed the instructions in the link. I updated Ultralytics (I changed head.py per the instructions, though the version of Ultralytics that pip installed on my machine had different line numbers, probably due to being a different version), and when I tried to export the yolov11 model in onnx format I got

ONNX: starting export with onnx 1.17.0 opset 19...

ONNX: export failure ❌ 0.2s: expected Tensor as element 0 in argument 0, but got tuple

Traceback (most recent call last):

File "onnxexport.py", line 3, in <module>

results = model.export(format="onnx")

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/ultralytics/engine/model.py", line 728, in export

return Exporter(overrides=args, _callbacks=self.callbacks)(model=self.model)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/ultralytics/engine/exporter.py", line 433, in __call__

f[2], _ = self.export_onnx()

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/ultralytics/engine/exporter.py", line 181, in outer_func

raise e

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/ultralytics/engine/exporter.py", line 176, in outer_func

f, model = inner_func(*args, **kwargs)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/ultralytics/engine/exporter.py", line 559, in export_onnx

torch.onnx.export(

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/onnx/utils.py", line 551, in export

_export(

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/onnx/utils.py", line 1648, in _export

graph, params_dict, torch_out = _model_to_graph(

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/onnx/utils.py", line 1170, in _model_to_graph

graph, params, torch_out, module = _create_jit_graph(model, args)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/onnx/utils.py", line 1046, in _create_jit_graph

graph, torch_out = _trace_and_get_graph_from_model(model, args)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/onnx/utils.py", line 950, in _trace_and_get_graph_from_model

trace_graph, torch_out, inputs_states = torch.jit._get_trace_graph(

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/jit/_trace.py", line 1497, in _get_trace_graph

outs = ONNXTracedModule(

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1553, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1562, in _call_impl

return forward_call(*args, **kwargs)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/jit/_trace.py", line 141, in forward

graph, out = torch._C._create_graph_by_tracing(

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/jit/_trace.py", line 132, in wrapper

outs.append(self.inner(*trace_inputs))

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1553, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1562, in _call_impl

return forward_call(*args, **kwargs)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1543, in _slow_forward

result = self.forward(*input, **kwargs)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/ultralytics/nn/tasks.py", line 114, in forward

return self.predict(x, *args, **kwargs)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/ultralytics/nn/tasks.py", line 132, in predict

return self._predict_once(x, profile, visualize, embed)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/ultralytics/nn/tasks.py", line 153, in _predict_once

x = m(x) # run

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1553, in _wrapped_call_impl

return self._call_impl(*args, **kwargs)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1562, in _call_impl

return forward_call(*args, **kwargs)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1543, in _slow_forward

result = self.forward(*input, **kwargs)

File "/home/marc/src/yolo11n-pose/yoloconv/lib/python3.8/site-packages/ultralytics/nn/modules/head.py", line 261, in forward

return torch.cat([x, pred_kpt], 1) if self.export else (torch.cat([x[0], pred_kpt], 1), (x[1], kpt))

TypeError: expected Tensor as element 0 in argument 0, but got tuple

I’ve done some googling but haven’t found any answers. Do you know what this might mean?

Thanks