@Ehsan At present, Google AOSP not support NPU.

我想在Android下使用串口有什么好的建议吗

AOSP 下使用串口吗?主要做什么功能呢?

我有一些模块是串口的想在Android下直接和模块通信

还有可以修改I2C_A(GPIO22/23)为UART //ttyS2

common/arch/arm/boot/dts/amlogic/kvim.dts

+&uart_B {

status = "okay";

+};

+

+&i2c0 {

+ status = "disabled";

common/arch/arm/boot/dts/amlogic/mesongxl.dtsi

b_uart_pins:b_uart {

mux {

groups = "uart_tx_b",

- "uart_rx_b",

- "uart_cts_b",

- "uart_rts_b";

+ "uart_rx_b";

function = "uart_b";

device/khadas/common/products/mbox/ueventd.amlogic.rc

/dev/ttyS2 0666 system system

AOSP的dts文件可能不一样,上面的补丁只是参考。

@tenk.wang 我是指app怎么调用这个串口,安卓11上获取root权限是比较困难的所以想请教一下

这有个Demo你可以参考一下。

@tenk.wang 谢谢我先看看

hello how and where i can download Google AOSP vim3l and vim3 please is it the same android tv? if no where i can download the third party android tv for vim3l and vim3 i used to download them from Files but i cannot see them

Current Android tv ROMs by SuperCeleron for the Vim3l and Vim3. Do note however that there are some streaming services that will either stream in lower quality or not not at all due to drm requirements.

thank you for your quick reply

Hello all,

I want to enable pmu counters in Google AOSP kernel. I enabled CONFIG_HW_PERF_EVENTS config but they are zero in ARM DS5 streamline software and warning that pmu counters are not enabled or are not defined in device tree. How could I define pmu coutners in device tree? should I add it to meong12-common.dtsi?

For example for hikey970 board it should be defined in “arch/arm64/boot/dts/hisilicon/kirin970-hikey970.dts” as follow:

pmu {

compatible = “arm,armv8-pmuv3”;

interrupts = <0 24 4>,

<0 25 4>,

<0 26 4>,

<0 27 4>,

<0 2 4>,

<0 3 4>,

<0 4 4>,

<0 5 4>;

interrupt-affinity = <&cpu0>,

<&cpu1>,

<&cpu2>,

<&cpu3>,

<&cpu4>,

<&cpu5>,

<&cpu6>,

<&cpu7>;

};

Best regards,

Ehsan

@Vladimir.v.v @Electr1 @tenk.wang would you please help me abou the problem?

Best,

Ehsan

@Ehsan The dtsi file.

hikey-linaro/arch/arm64/boot/dts/amlogic/meson-khadas-vim3.dtsi

@tenk.wang Thaks for your reply.

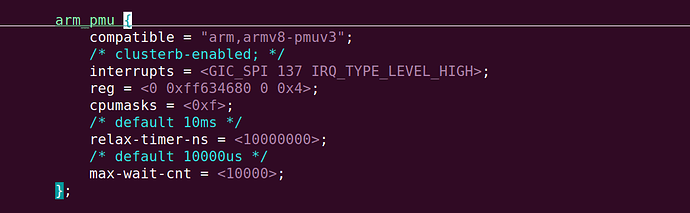

I added the following definition of pmu in the meson-khadas-vim3.dtsi:

arm_pmu {

compatible = “arm,armv8-pmuv3”;

clusterb-enabled;

interrupts = <GIC_SPI 137 IRQ_TYPE_LEVEL_HIGH>,

<GIC_SPI 171 IRQ_TYPE_LEVEL_HIGH>;

reg = <0x0 0xff634680 0x0 0x4>,

<0x0 0xff6347c0 0x0 0x04>;

cpumasks = <0x3>,

<0x3C>;

// default 10ms

relax-timer-ns = <10000000>;

// default 10000us

max-wait-cnt = <10000>;

};

But it just fix the pmu for A53 cores and pmu counters for A73 are zero.

Dmesg related log:

“[ 1.965309] hw perfevents: no interrupt-affinity property for /arm_pmu, guessing.

[ 1.970821] hw perfevents: enabled with armv8_pmuv3 PMU driver, 7 counters available

…

[ 7.131341] ueventd: LoadWithAliases was unable to load of:Narm_pmuT(null)Carm,armv8-pmuv3”

Also output of “cat /sys/devices/armv8_pmuv3/cpus” is 0-1 which means just A53 cores.

Best,

Ehsan

@Ehsan You can try it.

@tenk.wang I tried adding your recommended definition of arm_pmu in arch/arm64/boot/dts/amlogic/meson-khadas-vim3.dtsi file, however the problem has not solved.

I think maybe we should use cpumasks=<0x3F> as this board has 6 cores?

I tracked that warning in kernel dmesg (hw perfevents: no interrupt-affinity property for /arm_pmu, guessing) originates from drivers/perf/arm_pmu_platform.c file.

I am wondering if it is possible to enable pmu counters for both cores in this kernel or not?

I wish I could khadas kernel (in Khadas AOSP) as it supports NPU and pmu counters, but the problem of the khadas kernel is that it has not ability of GPU DVFS. Is there a chance that Khadas team works on adding ability of changing GPU frequency in their kernel? (In the khadas kernel there is not devfreq option for gpu in kernel config and I see that drivers/gpu/arm is not existed).

Best,

Ehsan

Best,

Ehsan

@Ehsan I have a patch about pmu,you can refer to it.

commit 55f60c41f7cbb5f2aae97bd6a6d9ca87fba68c0f

Author: Hanjie Lin <hanjie.lin@amlogic.com>

Date: Fri Nov 13 15:19:01 2020 +0800

perf: add pmu support [1/1]

PD#SWPL-34438

Problem:

add pmu support

Solution:

add pmu support

Verify:

sc2

Change-Id: Id61fe465d5914d6dff44002bb086c74320f1683a

Signed-off-by: Hanjie Lin <hanjie.lin@amlogic.com>

Signed-off-by: fushan.zeng <fushan.zeng@amlogic.com>

diff --git a/arch/arm/boot/dts/amlogic/meson-g12-common.dtsi b/arch/arm/boot/dts/amlogic/meson-g12-common.dtsi

index e921c24..4dc6815 100644

--- a/arch/arm/boot/dts/amlogic/meson-g12-common.dtsi

+++ b/arch/arm/boot/dts/amlogic/meson-g12-common.dtsi

@@ -2390,6 +2390,19 @@

};

};

#endif

+

+ arm_pmu {

+ compatible = "arm,cortex-a15-pmu";

+ /* clusterb-enabled; */

+ interrupts = <GIC_SPI 137 IRQ_TYPE_LEVEL_HIGH>;

+ reg = <0xff634680 0x4>;

+ cpumasks = <0xf>;

+ /* default 10ms */

+ relax-timer-ns = <10000000>;

+ /* default 10000us */

+ max-wait-cnt = <10000>;

+ };

+

gic: interrupt-controller@ffc01000 {

compatible = "arm,gic-400";

reg = <0xffc01000 0x1000>,

diff --git a/arch/arm/boot/dts/amlogic/meson-sc2.dtsi b/arch/arm/boot/dts/amlogic/meson-sc2.dtsi

index 1b8af0e..9506e35 100644

--- a/arch/arm/boot/dts/amlogic/meson-sc2.dtsi

+++ b/arch/arm/boot/dts/amlogic/meson-sc2.dtsi

@@ -170,6 +170,23 @@

min_delta_ns=<10>;

};

+ arm_pmu {

+ compatible = "arm,cortex-a15-pmu";

+ private-interrupts;

+ /* clusterb-enabled; */

+ interrupts = <GIC_SPI 235 IRQ_TYPE_LEVEL_HIGH>,

+ <GIC_SPI 236 IRQ_TYPE_LEVEL_HIGH>,

+ <GIC_SPI 237 IRQ_TYPE_LEVEL_HIGH>,

+ <GIC_SPI 238 IRQ_TYPE_LEVEL_HIGH>;

+

+ reg = <0xff634680 0x4>;

+ cpumasks = <0xf>;

+ /* default 10ms */

+ relax-timer-ns = <10000000>;

+ /* default 10000us */

+ max-wait-cnt = <10000>;

+ };

+

gic: interrupt-controller@fff01000 {

compatible = "arm,cortex-a15-gic", "arm,cortex-a9-gic";

#interrupt-cells = <3>;

@@ -819,7 +836,7 @@

pl-periph-id = <0>; /** lm name */

clock-src = "usb0"; /** clock src */

port-id = <0>; /** ref to mach/usb.h */

- port-type = <2>; /** 0: otg, 1: host, 2: slave */

+ port-type = <2>; /** 0: otg, 1: host, 2: slave */

port-speed = <0>; /** 0: default, high, 1: full */

port-config = <0>; /** 0: default */

/*0:default,1:single,2:incr,3:incr4,4:incr8,5:incr16,6:disable*/

diff --git a/arch/arm/boot/dts/amlogic/meson-tm-common.dtsi b/arch/arm/boot/dts/amlogic/meson-tm-common.dtsi

index ec805a4..1f8ca99 100644

--- a/arch/arm/boot/dts/amlogic/meson-tm-common.dtsi

+++ b/arch/arm/boot/dts/amlogic/meson-tm-common.dtsi

@@ -1333,6 +1333,18 @@

};

+ arm_pmu {

+ compatible = "arm,cortex-a15-pmu";

+ /* clusterb-enabled; */

+ interrupts = <GIC_SPI 137 IRQ_TYPE_LEVEL_HIGH>;

+ reg = <0xff634680 0x4>;

+ cpumasks = <0xf>;

+ /* default 10ms */

+ relax-timer-ns = <10000000>;

+ /* default 10000us */

+ max-wait-cnt = <10000>;

+ };

+

gic: interrupt-controller@ffc01000 {

compatible = "arm,gic-400";

reg = <0xffc01000 0x1000>,

@@ -1744,7 +1756,7 @@

pl-periph-id = <0>; /** lm name */

clock-src = "usb0"; /** clock src */

port-id = <0>; /** ref to mach/usb.h */

- port-type = <2>; /** 0: otg, 1: host, 2: slave */

+ port-type = <2>; /** 0: otg, 1: host, 2: slave */

port-speed = <0>; /** 0: default, high, 1: full */

port-config = <0>; /** 0: default */

/*0:default,1:single,2:incr,3:incr4,*/

diff --git a/arch/arm/kernel/perf_event_v7.c b/arch/arm/kernel/perf_event_v7.c

index 2924d79..132d0372 100644

--- a/arch/arm/kernel/perf_event_v7.c

+++ b/arch/arm/kernel/perf_event_v7.c

@@ -946,7 +946,22 @@ static void armv7pmu_disable_event(struct perf_event *event)

raw_spin_unlock_irqrestore(&events->pmu_lock, flags);

}

+#ifdef CONFIG_AMLOGIC_MODIFY

+#include <linux/perf/arm_pmu.h>

+

+static irqreturn_t armv7pmu_handle_irq(int irq_num, struct arm_pmu *dev);

+

+void amlpmu_handle_irq_ipi(void *arg)

+{

+ armv7pmu_handle_irq(-1, amlpmu_ctx.pmu);

+}

+#endif

+

+#ifdef CONFIG_AMLOGIC_MODIFY

+static irqreturn_t armv7pmu_handle_irq(int irq_num, struct arm_pmu *cpu_pmu)

+#else

static irqreturn_t armv7pmu_handle_irq(struct arm_pmu *cpu_pmu)

+#endif

{

u32 pmnc;

struct perf_sample_data data;

@@ -959,6 +974,15 @@ static irqreturn_t armv7pmu_handle_irq(struct arm_pmu *cpu_pmu)

*/

pmnc = armv7_pmnc_getreset_flags();

+#ifdef CONFIG_AMLOGIC_MODIFY

+ if (!amlpmu_ctx.private_interrupts) {

+ /* amlpmu have routed the interrupt already, so return IRQ_HANDLED */

+ if (amlpmu_handle_irq(cpu_pmu,

+ irq_num,

+ armv7_pmnc_has_overflowed(pmnc)))

+ return IRQ_HANDLED;

+ }

+#endif

/*

* Did an overflow occur?

*/

diff --git a/arch/arm64/boot/dts/amlogic/meson-g12-common.dtsi b/arch/arm64/boot/dts/amlogic/meson-g12-common.dtsi

index 75726fc..ee39cce 100644

--- a/arch/arm64/boot/dts/amlogic/meson-g12-common.dtsi

+++ b/arch/arm64/boot/dts/amlogic/meson-g12-common.dtsi

@@ -2391,6 +2391,19 @@

};

};

#endif

+

+ arm_pmu {

+ compatible = "arm,armv8-pmuv3";

+ /* clusterb-enabled; */

+ interrupts = <GIC_SPI 137 IRQ_TYPE_LEVEL_HIGH>;

+ reg = <0 0xff634680 0 0x4>;

+ cpumasks = <0xf>;

+ /* default 10ms */

+ relax-timer-ns = <10000000>;

+ /* default 10000us */

+ max-wait-cnt = <10000>;

+ };

+

gic: interrupt-controller@ffc01000 {

compatible = "arm,gic-400";

reg = <0x0 0xffc01000 0 0x1000>,

diff --git a/arch/arm64/boot/dts/amlogic/meson-sc2.dtsi b/arch/arm64/boot/dts/amlogic/meson-sc2.dtsi

index 1b1fbc9..ecdc342 100644

--- a/arch/arm64/boot/dts/amlogic/meson-sc2.dtsi

+++ b/arch/arm64/boot/dts/amlogic/meson-sc2.dtsi

@@ -163,6 +163,23 @@

min_delta_ns=<10>;

};

+ arm_pmu {

+ compatible = "arm,armv8-pmuv3";

+ private-interrupts;

+ /* clusterb-enabled; */

+ interrupts = <GIC_SPI 235 IRQ_TYPE_LEVEL_HIGH>,

+ <GIC_SPI 236 IRQ_TYPE_LEVEL_HIGH>,

+ <GIC_SPI 237 IRQ_TYPE_LEVEL_HIGH>,

+ <GIC_SPI 238 IRQ_TYPE_LEVEL_HIGH>;

+

+ reg = <0x0 0xff634680 0x0 0x4>;

+ cpumasks = <0xf>;

+ /* default 10ms */

+ relax-timer-ns = <10000000>;

+ /* default 10000us */

+ max-wait-cnt = <10000>;

+ };

+

gic: interrupt-controller@fff01000 {

compatible = "arm,cortex-a15-gic", "arm,cortex-a9-gic";

#interrupt-cells = <3>;

@@ -810,7 +827,7 @@

pl-periph-id = <0>; /** lm name */

clock-src = "usb0"; /** clock src */

port-id = <0>; /** ref to mach/usb.h */

- port-type = <2>; /** 0: otg, 1: host, 2: slave */

+ port-type = <2>; /** 0: otg, 1: host, 2: slave */

port-speed = <0>; /** 0: default, high, 1: full */

port-config = <0>; /** 0: default */

/*0:default,1:single,2:incr,3:incr4,4:incr8,5:incr16,6:disable*/

diff --git a/arch/arm64/boot/dts/amlogic/meson-tm-common.dtsi b/arch/arm64/boot/dts/amlogic/meson-tm-common.dtsi

index 3281273..24366d6 100644

--- a/arch/arm64/boot/dts/amlogic/meson-tm-common.dtsi

+++ b/arch/arm64/boot/dts/amlogic/meson-tm-common.dtsi

@@ -1294,6 +1294,18 @@

};

+ arm_pmu {

+ compatible = "arm,armv8-pmuv3";

+ /* clusterb-enabled; */

+ interrupts = <GIC_SPI 137 IRQ_TYPE_LEVEL_HIGH>;

+ reg = <0 0xff634680 0 0x4>;

+ cpumasks = <0xf>;

+ /* default 10ms */

+ relax-timer-ns = <10000000>;

+ /* default 10000us */

+ max-wait-cnt = <10000>;

+ };

+

gic: interrupt-controller@ffc01000 {

compatible = "arm,gic-400";

reg = <0x0 0xffc01000 0 0x1000>,

@@ -1758,7 +1770,7 @@

pl-periph-id = <0>; /** lm name */

clock-src = "usb0"; /** clock src */

port-id = <0>; /** ref to mach/usb.h */

- port-type = <2>; /** 0: otg, 1: host, 2: slave */

+ port-type = <2>; /** 0: otg, 1: host, 2: slave */

port-speed = <0>; /** 0: default, high, 1: full */

port-config = <0>; /** 0: default */

/*0:default,1:single,2:incr,3:incr4,*/

diff --git a/arch/arm64/kernel/perf_event.c b/arch/arm64/kernel/perf_event.c

index 19128d9..18eb59b 100644

--- a/arch/arm64/kernel/perf_event.c

+++ b/arch/arm64/kernel/perf_event.c

@@ -689,7 +689,22 @@ static void armv8pmu_stop(struct arm_pmu *cpu_pmu)

raw_spin_unlock_irqrestore(&events->pmu_lock, flags);

}

+#ifdef CONFIG_AMLOGIC_MODIFY

+#include <linux/perf/arm_pmu.h>

+

+static irqreturn_t armv8pmu_handle_irq(int irq_num, struct arm_pmu *dev);

+

+void amlpmu_handle_irq_ipi(void *arg)

+{

+ armv8pmu_handle_irq(-1, amlpmu_ctx.pmu);

+}

+#endif

+

+#ifdef CONFIG_AMLOGIC_MODIFY

+static irqreturn_t armv8pmu_handle_irq(int irq_num, struct arm_pmu *cpu_pmu)

+#else

static irqreturn_t armv8pmu_handle_irq(struct arm_pmu *cpu_pmu)

+#endif

{

u32 pmovsr;

struct perf_sample_data data;

@@ -702,12 +717,23 @@ static irqreturn_t armv8pmu_handle_irq(struct arm_pmu *cpu_pmu)

*/

pmovsr = armv8pmu_getreset_flags();

+#ifdef CONFIG_AMLOGIC_MODIFY

+ if (!amlpmu_ctx.private_interrupts) {

+ /* amlpmu have routed the interrupt already, so return IRQ_HANDLED */

+ if (amlpmu_handle_irq(cpu_pmu,

+ irq_num,

+ armv8pmu_has_overflowed(pmovsr)))

+ return IRQ_HANDLED;

+ }

+#endif

+

/*

* Did an overflow occur?

*/

if (!armv8pmu_has_overflowed(pmovsr))

return IRQ_NONE;

+

/*

* Handle the counter(s) overflow(s)

*/

diff --git a/drivers/perf/arm_pmu.c b/drivers/perf/arm_pmu.c

index df352b3..53cef5f 100644

--- a/drivers/perf/arm_pmu.c

+++ b/drivers/perf/arm_pmu.c

@@ -350,7 +350,11 @@ static irqreturn_t armpmu_dispatch_irq(int irq, void *dev)

return IRQ_NONE;

start_clock = sched_clock();

+#ifdef CONFIG_AMLOGIC_MODIFY

+ ret = armpmu->handle_irq(irq, armpmu);

+#else

ret = armpmu->handle_irq(armpmu);

+#endif

finish_clock = sched_clock();

perf_sample_event_took(finish_clock - start_clock);

diff --git a/drivers/perf/arm_pmu_platform.c b/drivers/perf/arm_pmu_platform.c

index 933bd84..b570073 100644

--- a/drivers/perf/arm_pmu_platform.c

+++ b/drivers/perf/arm_pmu_platform.c

@@ -187,6 +187,539 @@ static void armpmu_free_irqs(struct arm_pmu *armpmu)

}

}

+#ifdef CONFIG_AMLOGIC_MODIFY

+#include <linux/of_address.h>

+#include <linux/delay.h>

+

+struct amlpmu_context amlpmu_ctx;

+

+static enum hrtimer_restart amlpmu_relax_timer_func(struct hrtimer *timer)

+{

+ struct amlpmu_context *ctx = &amlpmu_ctx;

+ struct amlpmu_cpuinfo *ci;

+

+ ci = per_cpu_ptr(ctx->cpuinfo, 0);

+

+ pr_info("enable cpu0_irq %d again, irq cnt = %lu\n",

+ ci->irq_num,

+ ci->valid_irq_cnt);

+ enable_irq(ci->irq_num);

+

+ return HRTIMER_NORESTART;

+}

+

+void amlpmu_relax_timer_start(int other_cpu)

+{

+ struct amlpmu_cpuinfo *ci;

+ int cpu;

+ struct amlpmu_context *ctx = &amlpmu_ctx;

+

+ cpu = smp_processor_id();

+ WARN_ON(cpu != 0);

+

+ ci = per_cpu_ptr(ctx->cpuinfo, 0);

+

+ pr_warn("wait cpu %d fixup done timeout, main cpu irq cnt = %lu\n",

+ other_cpu,

+ ci->valid_irq_cnt);

+

+ if (hrtimer_active(&ctx->relax_timer)) {

+ pr_alert("relax_timer already active, return!\n");

+ return;

+ }

+

+ disable_irq_nosync(ci->irq_num);

+

+ hrtimer_start(&ctx->relax_timer,

+ ns_to_ktime(ctx->relax_timer_ns),

+ HRTIMER_MODE_REL);

+}

+

+static void amlpmu_fix_setup_affinity(int irq)

+{

+ int cluster_index = 0;

+ int cpu;

+ int affinity_cpu = -1;

+ struct amlpmu_cpuinfo *ci = NULL;

+ struct amlpmu_context *ctx = &amlpmu_ctx;

+ s64 latest_next_stamp = S64_MAX;

+

+ if (irq == ctx->irqs[0]) {

+ cluster_index = 0;

+ } else if (ctx->clusterb_enabled && irq == ctx->irqs[1]) {

+ cluster_index = 1;

+ } else {

+ pr_err("%s() bad irq = %d\n", __func__, irq);

+ return;

+ }

+

+ /*

+ * find latest next_predicted_stamp cpu for affinity cpu

+ * if no cpu have predict time, select first cpu of cpumask

+ * todo:

+ * - if a cpu predict failed for continuous N times,

+ * try add some punishment.

+ * - if no cpu have predicted time, try recently most used cpu

+ * for affinity

+ * - try to keep and promote prediction accuracy

+ */

+ for_each_cpu_and(cpu,

+ &ctx->cpumasks[cluster_index],

+ cpu_possible_mask) {

+ ci = per_cpu_ptr(ctx->cpuinfo, cpu);

+ //pr_info("cpu = %d, ci->next_predicted_stamp = %lld\n",

+ // cpu, ci->next_predicted_stamp);

+ if (ci->next_predicted_stamp &&

+ ci->next_predicted_stamp < latest_next_stamp) {

+ latest_next_stamp = ci->next_predicted_stamp;

+ affinity_cpu = cpu;

+ }

+ }

+

+ if (affinity_cpu == -1) {

+ affinity_cpu = cpumask_first(&ctx->cpumasks[cluster_index]);

+ pr_debug("used first cpu: %d, cluster: 0x%lx\n",

+ affinity_cpu,

+ *cpumask_bits(&ctx->cpumasks[cluster_index]));

+ } else {

+ pr_debug("find affinity cpu: %d, next_predicted_stamp: %lld\n",

+ affinity_cpu,

+ latest_next_stamp);

+ }

+

+ if (irq_set_affinity(irq, cpumask_of(affinity_cpu)))

+ pr_err("irq_set_affinity() failed irq: %d, affinity_cpu: %d\n",

+ irq,

+ affinity_cpu);

+}

+

+/*

+ * on pmu interrupt generated cpu, @irq_num is valid

+ * on other cpus(called by AML_PMU_IPI), @irq_num is -1

+ */

+static int amlpmu_irq_fix(int irq_num)

+{

+ int i;

+ int cpu;

+ int cur_cpu;

+ int pmuirq_val;

+ int cluster_index = 0;

+ int fix_success = 0;

+ struct amlpmu_cpuinfo *ci;

+ struct amlpmu_context *ctx = &amlpmu_ctx;

+ int max_wait_cnt = ctx->max_wait_cnt;

+

+ call_single_data_t csd_stack = {

+ .func = amlpmu_handle_irq_ipi,

+ .info = NULL,

+ };

+

+ cur_cpu = smp_processor_id();

+

+ if (irq_num == ctx->irqs[0]) {

+ cluster_index = 0;

+ } else if (ctx->clusterb_enabled && irq_num == ctx->irqs[1]) {

+ cluster_index = 1;

+ } else {

+ pr_err("%s() bad irq = %d\n", __func__, irq_num);

+ return fix_success;

+ }

+

+ if (!cpumask_test_cpu(cur_cpu, &ctx->cpumasks[cluster_index])) {

+ pr_warn("%s() cur_cpu %d not in cluster: 0x%lx\n",

+ __func__,

+ cur_cpu,

+ *cpumask_bits(&ctx->cpumasks[cluster_index]));

+ }

+

+ pmuirq_val = readl(ctx->regs[cluster_index]);

+ pmuirq_val &= 0xf;

+ pmuirq_val <<= ctx->first_cpus[cluster_index];

+

+ pr_debug("%s() val=0x%0x, first_cpu=%d, cluster=0x%lx\n",

+ __func__,

+ readl(ctx->regs[cluster_index]),

+ ctx->first_cpus[cluster_index],

+ *cpumask_bits(&ctx->cpumasks[cluster_index]));

+

+ for_each_cpu_and(cpu,

+ &ctx->cpumasks[cluster_index],

+ cpu_possible_mask) {

+ if (pmuirq_val & (1 << cpu)) {

+ if (cpu == cur_cpu) {

+ pr_info("ownercpu %d in pmuirq = 0x%x\n",

+ cur_cpu, pmuirq_val);

+ continue;

+ }

+ pr_debug("fix pmu irq cpu %d, pmuirq = 0x%x\n",

+ cpu,

+ pmuirq_val);

+

+ ci = per_cpu_ptr(ctx->cpuinfo, cpu);

+ WRITE_ONCE(ci->fix_done, 0);

+ WRITE_ONCE(ci->fix_overflowed, 0);

+

+ csd_stack.flags = 0;

+ smp_call_function_single_async(cpu, &csd_stack);

+

+ for (i = 0; i < max_wait_cnt; i++) {

+ if (READ_ONCE(ci->fix_done))

+ break;

+

+ udelay(1);

+ }

+

+ if (i == ctx->max_wait_cnt) {

+ pr_err("wait for cpu %d done timeout\n",

+ cpu);

+ //amlpmu_relax_timer_start(cpu);

+ }

+

+ if (READ_ONCE(ci->fix_overflowed))

+ fix_success++;

+

+ pr_info("fix pmu irq cpu %d, fix_success = %d\n",

+ cpu,

+ fix_success);

+ }

+ }

+

+ return fix_success;

+}

+

+static void amlpmu_update_stats(int irq_num,

+ int has_overflowed,

+ int fix_success)

+{

+ int freq;

+ int i;

+ ktime_t stamp;

+ unsigned long time = jiffies;

+ struct amlpmu_cpuinfo *ci;

+ struct amlpmu_context *ctx = &amlpmu_ctx;

+

+ ci = this_cpu_ptr(ctx->cpuinfo);

+

+ if (!has_overflowed && !fix_success) {

+ pr_debug("empty_irq_cnt: %lu\n", ci->empty_irq_cnt);

+ ci->empty_irq_cnt++;

+ ci->empty_irq_time = time;

+ }

+

+ if (fix_success) {

+ /* send IPI success */

+ pr_debug("fix_irq_cnt: %lu, fix_success = %d\n",

+ ci->fix_irq_cnt,

+ fix_success);

+ ci->fix_irq_cnt++;

+ ci->fix_irq_time = time;

+ }

+

+ if (has_overflowed) {

+ ci->valid_irq_cnt++;

+ ci->valid_irq_time = time;

+

+ stamp = ktime_get();

+ ci->stamp_deltas[ci->valid_irq_cnt % MAX_DELTA_CNT] =

+ stamp - ci->last_stamp;

+ ci->last_stamp = stamp;

+

+ /* update avg_delta if it's valid */

+ ci->avg_delta = 0;

+ for (i = 0; i < MAX_DELTA_CNT; i++)

+ ci->avg_delta += ci->stamp_deltas[i];

+

+ ci->avg_delta /= MAX_DELTA_CNT;

+ for (i = 0; i < MAX_DELTA_CNT; i++) {

+ if ((ci->stamp_deltas[i] > ci->avg_delta * 3 / 2) ||

+ (ci->stamp_deltas[i] < ci->avg_delta / 2)) {

+ ci->avg_delta = 0;

+ break;

+ }

+ }

+

+ if (ci->avg_delta)

+ ci->next_predicted_stamp =

+ ci->last_stamp + ci->avg_delta;

+ else

+ ci->next_predicted_stamp = 0;

+

+ pr_debug("irq_num = %d, valid_irq_cnt = %lu\n",

+ irq_num,

+ ci->valid_irq_cnt);

+ pr_debug("cur_delta = %lld, avg_delta = %lld, next = %lld\n",

+ ci->stamp_deltas[ci->valid_irq_cnt % MAX_DELTA_CNT],

+ ci->avg_delta,

+ ci->next_predicted_stamp);

+ }

+

+ if (time_after(ci->valid_irq_time, ci->last_valid_irq_time + 2 * HZ)) {

+ freq = ci->empty_irq_cnt - ci->last_empty_irq_cnt;

+ freq *= HZ;

+ freq /= (ci->empty_irq_time - ci->last_empty_irq_time);

+ pr_info("######## empty_irq_cnt: %lu - %lu = %lu, freq = %d\n",

+ ci->empty_irq_cnt,

+ ci->last_empty_irq_cnt,

+ ci->empty_irq_cnt - ci->last_empty_irq_cnt,

+ freq);

+

+ ci->last_empty_irq_cnt = ci->empty_irq_cnt;

+ ci->last_empty_irq_time = ci->empty_irq_time;

+

+ freq = ci->fix_irq_cnt - ci->last_fix_irq_cnt;

+ freq *= HZ;

+ freq /= (ci->fix_irq_time - ci->last_fix_irq_time);

+ pr_info("######## fix_irq_cnt: %lu - %lu = %lu, freq = %d\n",

+ ci->fix_irq_cnt,

+ ci->last_fix_irq_cnt,

+ ci->fix_irq_cnt - ci->last_fix_irq_cnt,

+ freq);

+

+ ci->last_fix_irq_cnt = ci->fix_irq_cnt;

+ ci->last_fix_irq_time = ci->fix_irq_time;

+

+ freq = ci->valid_irq_cnt - ci->last_valid_irq_cnt;

+ freq *= HZ;

+ freq /= (ci->valid_irq_time - ci->last_valid_irq_time);

+ pr_info("######## valid_irq_cnt: %lu - %lu = %lu, freq = %d\n",

+ ci->valid_irq_cnt,

+ ci->last_valid_irq_cnt,

+ ci->valid_irq_cnt - ci->last_valid_irq_cnt,

+ freq);

+

+ ci->last_valid_irq_cnt = ci->valid_irq_cnt;

+ ci->last_valid_irq_time = ci->valid_irq_time;

+ }

+}

+

+int amlpmu_handle_irq(struct arm_pmu *cpu_pmu, int irq_num, int has_overflowed)

+{

+ int cpu;

+ int fix_success = 0;

+ struct amlpmu_cpuinfo *ci;

+ struct amlpmu_context *ctx = &amlpmu_ctx;

+

+ ci = this_cpu_ptr(ctx->cpuinfo);

+ ci->irq_num = irq_num;

+ cpu = smp_processor_id();

+

+ pr_debug("%s() irq_num = %d, overflowed = %d\n",

+ __func__,

+ irq_num,

+ has_overflowed);

+

+ /*

+ * if current cpu is not overflowed, it's possible some other

+ * cpus caused the pmu interrupt.

+ * so if current cpu is interrupt generated cpu(irq_num != -1),

+ * call aml_pmu_fix() try to send IPI to other cpus and waiting

+ * for fix_done.

+ */

+ if (!has_overflowed && irq_num != -1)

+ fix_success = amlpmu_irq_fix(irq_num);

+

+ /*

+ * valid_irq, fix_irq and empty_irq status

+ * avg_delta time account to predict next interrupt time

+ */

+ amlpmu_update_stats(irq_num, has_overflowed, fix_success);

+

+ /*

+ * armv*pmu_getreset_flags() will clear interrupt. If current

+ * interrupt is IPI fix(irq_num = -1), interrupt generated cpu

+ * now is waiting for ci->fix_done=1(clear interrupt).

+ * we must set ci->fix_done to 1 after amlpmu_stat_account(),

+ * because interrupt generated cpu need this predict time info

+ * to setup interrupt affinity.

+ */

+ if (irq_num == -1) {

+ WRITE_ONCE(ci->fix_overflowed, has_overflowed);

+ /* fix_overflowed must before fix_done */

+ mb();

+ WRITE_ONCE(ci->fix_done, 1);

+ }

+

+ /* only interrupt generated cpu need setup affinity */

+ if (irq_num != -1)

+ amlpmu_fix_setup_affinity(irq_num);

+

+ /*

+ * when a pmu interrupt generated, if current cpu is not

+ * overflowed and some other cpus succeed in handling the

+ * interrupt by IPIs return true.

+ */

+ return !has_overflowed && fix_success;

+}

+

+static int amlpmu_init(struct platform_device *pdev, struct arm_pmu *pmu)

+{

+ int cpu;

+ int ret = 0;

+ int irq;

+ u32 cpumasks[MAX_CLUSTER_NR] = {0};

+ struct amlpmu_context *ctx = &amlpmu_ctx;

+ struct amlpmu_cpuinfo *ci;

+ int cluster_nr = 1;

+

+ memset(ctx, 0, sizeof(*ctx));

+

+ /* each cpu has it's own pmu interrtup */

+ if (of_property_read_bool(pdev->dev.of_node, "private-interrupts")) {

+ ctx->private_interrupts = 1;

+ return 0;

+ }

+

+ ctx->cpuinfo = __alloc_percpu_gfp(sizeof(struct amlpmu_cpuinfo),

+ SMP_CACHE_BYTES,

+ GFP_KERNEL | __GFP_ZERO);

+ if (!ctx->cpuinfo) {

+ pr_err("alloc percpu failed\n");

+ ret = -ENOMEM;

+ goto free;

+ }

+

+ for_each_possible_cpu(cpu) {

+ ci = per_cpu_ptr(ctx->cpuinfo, cpu);

+ ci->last_valid_irq_time = INITIAL_JIFFIES;

+ ci->last_fix_irq_time = INITIAL_JIFFIES;

+ ci->last_empty_irq_time = INITIAL_JIFFIES;

+ }

+

+ ctx->pmu = pmu;

+

+ if (of_property_read_bool(pdev->dev.of_node, "clusterb-enabled")) {

+ ctx->clusterb_enabled = 1;

+ cluster_nr = MAX_CLUSTER_NR;

+ }

+

+ pr_info("clusterb_enabled = %d\n", ctx->clusterb_enabled);

+

+ ret = of_property_read_u32_array(pdev->dev.of_node,

+ "cpumasks",

+ cpumasks,

+ cluster_nr);

+ if (ret) {

+ pr_err("read prop cpumasks failed, ret = %d\n", ret);

+ ret = -EINVAL;

+ goto free;

+ }

+ pr_info("cpumasks 0x%0x, 0x%0x\n", cpumasks[0], cpumasks[1]);

+

+ ret = of_property_read_u32(pdev->dev.of_node,

+ "relax-timer-ns",

+ &ctx->relax_timer_ns);

+ if (ret) {

+ pr_err("read prop relax-timer-ns failed, ret = %d\n", ret);

+ ret = -EINVAL;

+ goto free;

+ }

+

+ ret = of_property_read_u32(pdev->dev.of_node,

+ "max-wait-cnt",

+ &ctx->max_wait_cnt);

+ if (ret) {

+ pr_err("read prop max-wait-cnt failed, ret = %d\n", ret);

+ ret = -EINVAL;

+ goto free;

+ }

+

+ irq = platform_get_irq(pdev, 0);

+ if (irq < 0) {

+ pr_err("get clusterA irq failed, %d\n", irq);

+ ret = -EINVAL;

+ goto free;

+ }

+ ctx->irqs[0] = irq;

+ pr_info("cluster A irq = %d\n", irq);

+

+ ctx->regs[0] = of_iomap(pdev->dev.of_node, 0);

+ if (IS_ERR(ctx->regs[0])) {

+ pr_err("of_iomap() clusterA failed, base = %p\n", ctx->regs[0]);

+ ret = PTR_ERR(ctx->regs[0]);

+ goto free;

+ }

+

+ cpumask_clear(&ctx->cpumasks[0]);

+ memcpy(cpumask_bits(&ctx->cpumasks[0]),

+ &cpumasks[0],

+ sizeof(cpumasks[0]));

+ if (!cpumask_intersects(&ctx->cpumasks[0], cpu_possible_mask)) {

+ pr_err("bad cpumasks[0] 0x%x\n", cpumasks[0]);

+ ret = -EINVAL;

+ goto free;

+ }

+ ctx->first_cpus[0] = cpumask_first(&ctx->cpumasks[0]);

+

+ for_each_cpu(cpu, &ctx->cpumasks[0]) {

+ cpumask_set_cpu(cpu, &pmu->supported_cpus);

+ }

+

+ amlpmu_fix_setup_affinity(ctx->irqs[0]);

+

+ hrtimer_init(&ctx->relax_timer,

+ CLOCK_MONOTONIC,

+ HRTIMER_MODE_REL);

+ ctx->relax_timer.function = amlpmu_relax_timer_func;

+

+ if (!ctx->clusterb_enabled)

+ return 0;

+

+ irq = platform_get_irq(pdev, 1);

+ if (irq < 0) {

+ pr_err("get clusterB irq failed, %d\n", irq);

+ ret = -EINVAL;

+ goto free;

+ }

+ ctx->irqs[1] = irq;

+ pr_info("cluster B irq = %d\n", irq);

+

+ ctx->regs[1] = of_iomap(pdev->dev.of_node, 1);

+ if (IS_ERR(ctx->regs[1])) {

+ pr_err("of_iomap() clusterA failed, base = %p\n", ctx->regs[1]);

+ ret = PTR_ERR(ctx->regs[1]);

+ goto free;

+ }

+

+ cpumask_clear(&ctx->cpumasks[1]);

+ memcpy(cpumask_bits(&ctx->cpumasks[1]),

+ &cpumasks[1],

+ sizeof(cpumasks[1]));

+ if (!cpumask_intersects(&ctx->cpumasks[1], cpu_possible_mask)) {

+ pr_err("bad cpumasks[1] 0x%x\n", cpumasks[1]);

+ ret = -EINVAL;

+ goto free;

+ } else if (cpumask_intersects(&ctx->cpumasks[0], &ctx->cpumasks[1])) {

+ pr_err("cpumasks intersect 0x%x : 0x%x\n",

+ cpumasks[0],

+ cpumasks[1]);

+ ret = -EINVAL;

+ goto free;

+ }

+ ctx->first_cpus[1] = cpumask_first(&ctx->cpumasks[1]);

+

+ for_each_cpu(cpu, &ctx->cpumasks[1]) {

+ cpumask_set_cpu(cpu, &pmu->supported_cpus);

+ }

+

+ amlpmu_fix_setup_affinity(ctx->irqs[1]);

+

+ return 0;

+

+free:

+ if (ctx->cpuinfo)

+ free_percpu(ctx->cpuinfo);

+

+ if (ctx->regs[0])

+ iounmap(ctx->regs[0]);

+

+ if (ctx->regs[1])

+ iounmap(ctx->regs[1]);

+

+ return ret;

+}

+

+#endif

+

int arm_pmu_device_probe(struct platform_device *pdev,

const struct of_device_id *of_table,

const struct pmu_probe_info *probe_table)

@@ -238,6 +771,13 @@ int arm_pmu_device_probe(struct platform_device *pdev,

if (ret)

goto out_free;

+#ifdef CONFIG_AMLOGIC_MODIFY

+ if (amlpmu_init(pdev, pmu)) {

+ pr_err("amlpmu_init() failed\n");

+ return 1;

+ }

+#endif

+

return 0;

out_free_irqs:

diff --git a/include/linux/perf/arm_pmu.h b/include/linux/perf/arm_pmu.h

index 71f525a..0561c42 100644

--- a/include/linux/perf/arm_pmu.h

+++ b/include/linux/perf/arm_pmu.h

@@ -80,7 +80,11 @@ struct arm_pmu {

struct pmu pmu;

cpumask_t supported_cpus;

char *name;

- irqreturn_t (*handle_irq)(struct arm_pmu *pmu);

+#ifdef CONFIG_AMLOGIC_MODIFY

+ irqreturn_t (*handle_irq)(int irq_num, struct arm_pmu *pmu);

+#else

+ irqreturn_t (*handle_irq)(struct arm_pmu *pmu);

+#endif

void (*enable)(struct perf_event *event);

void (*disable)(struct perf_event *event);

int (*get_event_idx)(struct pmu_hw_events *hw_events,

@@ -168,6 +172,98 @@ int armpmu_request_irq(int irq, int cpu);

void armpmu_free_irq(int irq, int cpu);

#define ARMV8_PMU_PDEV_NAME "armv8-pmu"

+#ifdef CONFIG_AMLOGIC_MODIFY

+#define MAX_DELTA_CNT 4

+struct amlpmu_cpuinfo {

+ int irq_num;

+

+ /*

+ * In interrupt generated cpu(affinity cpu)

+ * If pmu no overflowed, then we need to send IPI to some other cpus to

+ * fix it. And before send IPI, set corresponding cpu's fix_done and

+ * fix_overflowed to zero, in corresponding cpu's IPI interrupt will set

+ * fix_done to inform source cpu and if indeed pmu overflowed then also

+ * set fix_overflowed to 1, then inerrupt generated cpu can feel that.

+ */

+ int fix_done;

+ int fix_overflowed;

+

+ /* for interrupt affinity prediction */

+ ktime_t last_stamp;

+ s64 stamp_deltas[MAX_DELTA_CNT];

+ s64 avg_delta;

+ ktime_t next_predicted_stamp;

+

+ /*

+ * irq state account of this cpu

+ *

+ * - valid_irq_cnt:

+ * valid irq cnt.(pmu overflow happened)

+ * - fix_irq_cnt:

+ * when this cpu is pmu interrupt generated affinity cpu, a pmu

+ * interrupt if cpu affinity predict failed so no pmu overflow

+ * happened and succeeded send IPI to other cpu, then it's a send

+ * fix irq. So the lower is better.

+ * - empty_irq_cnt:

+ * when this cpu is pmu interrupt generated affinity cpu, a pmu

+ * interrupt that no overflow happened and also no fix IPI sended to

+ * other cpus, then it's a empty irq.

+ * when this cpu is not affinity cpu, a IPI interrupt(pmu fix from

+ * affinity cpu) that no pmu overflow happened, it's a empty irq.

+ *

+ * attention:

+ * A interrupt can be a valid_irq and also a fix_irq.

+ */

+ unsigned long valid_irq_cnt;

+ unsigned long fix_irq_cnt;

+ unsigned long empty_irq_cnt;

+

+ unsigned long valid_irq_time;

+ unsigned long fix_irq_time;

+ unsigned long empty_irq_time;

+

+ unsigned long last_valid_irq_cnt;

+ unsigned long last_fix_irq_cnt;

+ unsigned long last_empty_irq_cnt;

+

+ unsigned long last_valid_irq_time;

+ unsigned long last_fix_irq_time;

+ unsigned long last_empty_irq_time;

+};

+

+#define MAX_CLUSTER_NR 2

+struct amlpmu_context {

+ struct amlpmu_cpuinfo __percpu *cpuinfo;

+

+ /* struct arm_pmu */

+ struct arm_pmu *pmu;

+

+ int private_interrupts;

+ int clusterb_enabled;

+

+ unsigned int __iomem *regs[MAX_CLUSTER_NR];

+ int irqs[MAX_CLUSTER_NR];

+ struct cpumask cpumasks[MAX_CLUSTER_NR];

+ int first_cpus[MAX_CLUSTER_NR];

+

+ /*

+ * In main pmu irq route wait for other cpu fix done may cause lockup,

+ * when lockup we disable main irq for a while.

+ * relax_timer will enable main irq again.

+ */

+ struct hrtimer relax_timer;

+

+ unsigned int relax_timer_ns;

+ unsigned int max_wait_cnt;

+};

+

+extern struct amlpmu_context amlpmu_ctx;

+

+int amlpmu_handle_irq(struct arm_pmu *cpu_pmu, int irq_num, int has_overflowed);

+

+/* defined int arch/arm(64)/kernel/perf_event(_v7).c */

+void amlpmu_handle_irq_ipi(void *arg);

+#endif

#endif /* CONFIG_ARM_PMU */

Has there been any development on the problems of the memory barrier when this is used on the VIM3 Pro versions?

Thanx