Software

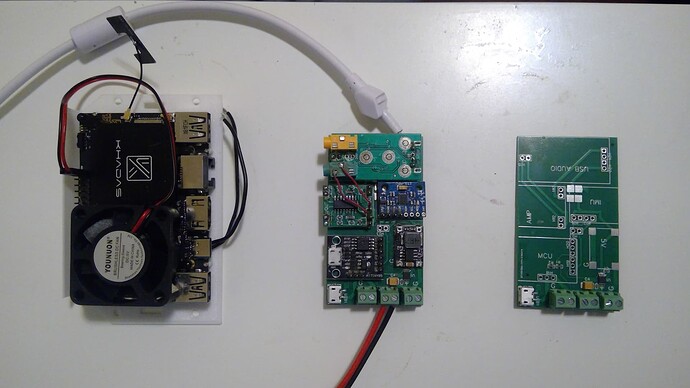

Fig… The Github Page for the Software

So for the software, I wanted to build something that was modular and that other people can add functions in the future. I would say that I am just an average programmer, and most of my experience is in Python, but I have worked in java, C+, and linux.

You can see the very basic version of the software at this github link: lordNil/lilium: Code for Robotic Brain V1 (github.com). This code is just the foundation structure, and has very few functions implemented. I plan to release different robot brains as a fork to this project, and you can also build your own brain as a fork to the project.

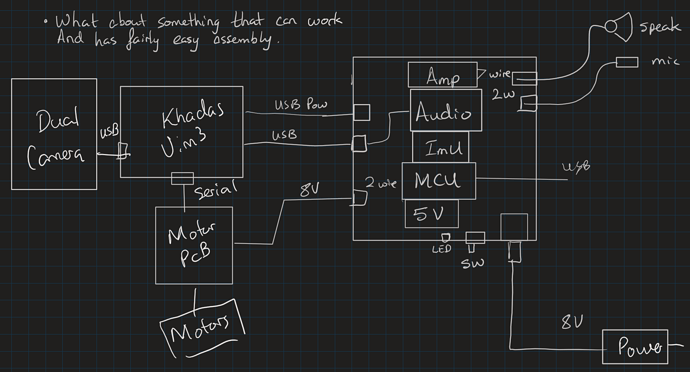

The main file that is run using python is Core.py. This file actually starts python - multiprocessing, and starts the following processes: Sound, Motion, UI, and Vision

The reason for this is that each of these processes can run CPU, IO, or NPU tasks at the same time and not stall the entire program. Each of these processes are split into their own file: Sound.py, Motion.py, ect…

The core also manages a shared data object that is synchronized between all the different processes in real time.

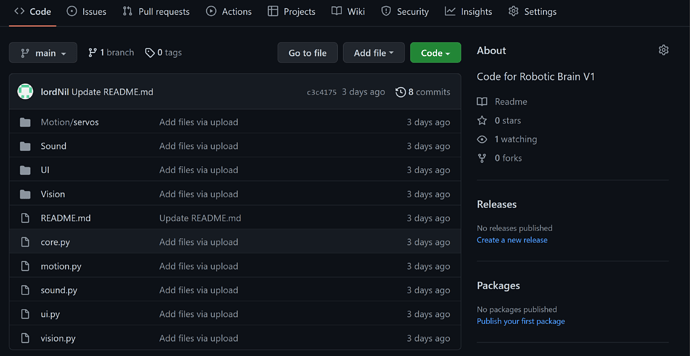

Code Details for Vim3

The following section will talk in detail about the actual code running on the vim3. You can see a version of this code here: lordNil/lilium-Khadas-Vim3 (github.com)

Motion

This section includes the motion.py files and the motion folder which is used to store data. This section mainly contains all of the functions needed to control the servo motors, and also manages all of the data for all 28 motors. Each of the motors are smart servos, and you can control the speed, motion, acceleration, torque, and position of the motors. You can even reset the zero position of the motors which is very convenient for calibration. Each motor has an ID number, and to control it, you simply read / write data to it’s registers. (using the read or write function). The motor bus operates at 1Mhz, and communicating to all motors takes around 100ms which is not too bad.

A bunch of other basic functions are written such as:

- function to mimic breathing

- random head/ mouth/body movement

- head movement to locate object

- recording animations and saving them

- playing animations

If you run the motion.py file by itself, it enter into a command mode where you can give commands to the motors like ping certain motors and record animations.

To be honest, this motion system is fairly basic for now, but allows me to make make a bunch of idle animations for the robot, and code some simple abilities.

I actually experimented with implementing Deepmimic for this robot before, which utilizes supervised machine learning to allow the robot to lean how to walk based off of human examples of walking. This was partially successful in simulation, but it was rather tricky to get working right. In theory, this can be used to train for different gaits and also allow transition between different modes of motion.

It would also be nice to make animations in a program like blender, and then convert it to robotic animations. Another possible upgrade is to incorporate software for machine arms into the the robot. This would first require some decent vision object recognition and depth perception. Then some sort of reverse kinematic algorithm to control the arms and manipulate the objects. Or one can go with a machine learning approach and train neural networks based on object grabbing examples to allow it to learn manipulation itself. Anyways, i dont really have the time to investigate this further right now.

Sound

For the sound, a bunch of things where implemented to get a few chatbots working. Basically, there are some basic sound functions implemented such as playing sound files, and also modifying their pitch.

The chatbot pipeline is composed of a Speech to text (STT) module, then a chatbot module, then a Text to Speech module. The STT and TTS modules from google seem to work the best for now, but I really do want a TTS system that is able to product a more attractive female voice. Google’s voice is a bit robotic. Luckly, many people are developing more advanced TTS systems using the latest ML methods, and I hope to use their work in the future.

For the first chatbot, it is an AIML chatbot and is actually completely pre-scripted. It was made a while ago (2008?) and was first online as the Mitsuki Chatbot. I customized the script for my AIML chatbot and it works alright. It runs quite fast on CPU, but the only downside is that it does not really have the power to use synonyms. Thus, your sentence has to contain specific words in order for the chatbot to understand what you said.

The other chatbot used is GTP-3 which is built and run by OpenAI (Elon Musk’s company). This chatbot is quite interesting because it is one of the latest AI chatbots. It uses the transformer architecture, and what researchers found is that if you make the model bigger and bigger, then the results get better and better linearly. So after GTP 1 was big, GTP 2 was huge, and GTP 3 is massive. The entire model should be around 175 GB in size or larger and is trained on 45 TB of text data. The training itself took months and cost +10million. The end result is a very nice model that mainly does text completion. This is basically taking some existing text, and then GTP 3 will generate new text to expand upon the existing text. Thus, for chatbot applications, we typically feed it an introduction like: this is a talk between X and B, and then the conversation history, and GTP3 will generate future responses to try and continue the story.

Sadly, it is not exactly a self embodied AI entity, but it is able to generate very smooth text, and is able to have conversations about all sorts of complex topics. The main drawback is the lack of local memory - such as what knowledge about itself and it’s conversation partner.

I also implemented some things in the sound such as a e-book reader so that the robot can read you books.

Vision

For the vision system, a few things where implemented. Using the Khadas ksnn modules, I was able to implement Yolo3 face detection and also Yolo3 object detection. Yolo algorithms are nice as they both find the object name, and also locate where it is in the image. However, the main drawback is that it only knows about 80 objects ( it is trained on coco dataset) where as some other models such as imagenet can recognize 1000 objects.

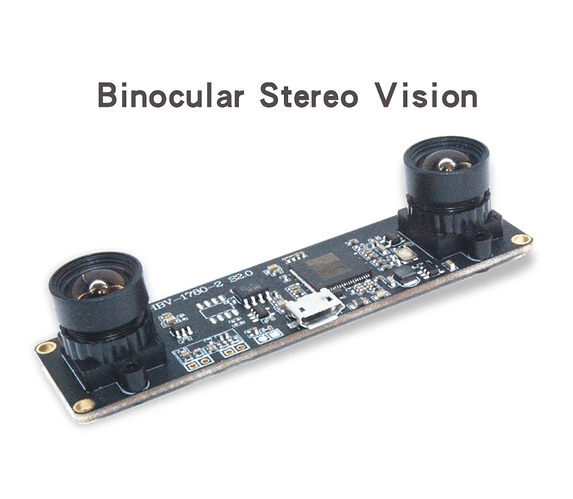

Some other ML models where implemented in another version of the brain . There is a nice stereo depth map model that generated 3D depth from both of the images from each eye. a Human Keyframe model was also implemented to recognize human limbs.

UI

Finnaly, for the UI, some code was implemented so that python starts its own web server, and hosts it’s own website on your network. This website contains all the robotic control functions, and you can access it from any browser on your network.

While this is cool, there are some major drawbacks - The user needs to access the vim3 via HDMI and enter their wifi password. Furthermore, they actually need to set a static IP address ( i was not about to get linux to set a static IP address by default )

Initially, I actually just wanted the UI to be an app that you can run on your phone, and then connect to the vim3 via bluetooth. I actually did write the app in the FLutter language, and it does work. The main issue is that I could not get bluetooth to auto accept connection on the Vim3. I tried quite a few things using the installed linux bluetooth controllers, but they did not work. I could also buy a bluetooth module and connect that to the serial port, but i needed the serial port for motor control. Anyways, I sort of gave up on this plan.

Another UI was made which is a simple GUI . This is just a basic pop-up window that shows the commands.

Conclusion

Some decent software was written for the robot, and I think all of the basic functions are implemented. I am not a trained software architect, and perhaps someone can comment and improve the main parts of the code. I am sure that it would be fairly easy to add extra functions to one section.

Finally, I have made a youtube video to demonstrate the software functions. you can see it here:

Lilium Robot Cat Girl - Chatbot and Software - YouTube