Hello @Arjun_Gupta ,

Could you provide your codes that can inference right results by using onnx model?

Hello @Arjun_Gupta ,

Could you provide your codes that can inference right results by using onnx model?

Hi

I don’t have the code for onnx model I am using YOLOv5 torch default model and using the default outputs right now

Could you suggest a way to run YOLO model on the VIM3

@Arjun_Gupta maybe you can check out ArmNN examples

It doesn’t utilize the NPU…

On GPU and CPU I am able to run inferencing…

@Arjun_Gupta NPU version also exist https://github.com/sravansenthiln1/armnn_tflite/tree/main/yolov8n

But latest yolo models do not perform as fast as expected with NPU.

Hello @Arjun_Gupta ,

I run your model, and i think your postprocess is wrong.

The output shape is (1, 84, 8400). 8400 is the number of prediction box. 84 is 4(box)+80(class). Your postprocess is wrong so class number in the log actually is box information.

The right way is reshape output into (1, 84, 8400), traverse every boxes to find the boxes with confidence greater than threshold and do non-maximum suppression.

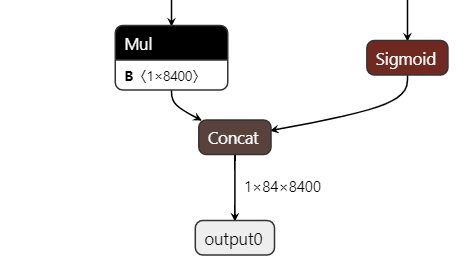

Another problem is the lastest structure of in model. This structure first appears in YOLOv8.

If it is too complex for you, you can try to use C++. You can refer those. First is converting model. Second is inferring on VIM3.

NPU SDK Usage [Khadas Docs]

Application Source Code [Khadas Docs]

If you still want to use KSNN, you need to wait some weeks. We are planning to add KSNN YOLOv8 demo.

@Louis-Cheng-Liu

Is the last structure also an issue on yolo V5 and V3 models?

@Louis-Cheng-Liu

@numbqq

i used onnx modifier

[GitHub - ZhangGe6/onnx-modifier: A tool to modify ONNX models in a visualization fashion, based on Netron and Flask.]

to get rid of the tree which was told to be incompatible

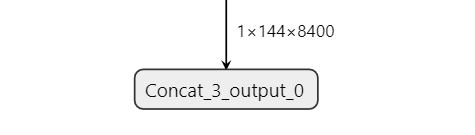

but my output shape was changed to 1x144x8400

which I reshaped to 84, 8400 since according to netron it is 1x144x8400

import numpy as np

import os

import argparse

import sys

from ksnn.api import KSNN

from ksnn.types import *

import cv2 as cv

classes = [

"person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck",

"boat", "traffic light", "fire hydrant", "stop sign", "parking meter", "bench",

"bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear", "zebra",

"giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove",

"skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup",

"fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange",

"broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "sofa",

"pottedplant", "bed", "diningtable", "toilet", "tvmonitor", "laptop", "mouse",

"remote", "keyboard", "cell phone", "microwave", "oven", "toaster", "sink",

"refrigerator", "book", "clock", "vase", "scissors", "teddy bear", "hair drier",

"toothbrush"

]

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--library", help="Path to C static library file")

parser.add_argument("--model", help="Path to nbg file")

parser.add_argument("--level", help="Information printer level: 0/1/2")

args = parser.parse_args()

if args.model :

if os.path.exists(args.model) == False:

sys.exit('Model \'{}\' not exist'.format(args.model))

model = args.model

else :

sys.exit("NBG file not found !!! Please use format: --model")

if args.library :

if os.path.exists(args.library) == False:

sys.exit('C static library \'{}\' not exist'.format(args.library))

library = args.library

else :

sys.exit("C static library not found !!! Please use format: --library")

if args.level == '1' or args.level == '2' :

level = int(args.level)

else :

level = 0

resnet50 = KSNN('VIM3')

print(' |--- KSNN Version: {} +---| '.format(resnet50.get_nn_version()))

print('Start init neural network ...')

resnet50.nn_init(library=library, model=model, level=level)

print('Done.')

cap = cv.VideoCapture(1)

assert cap.isOpened()

color = (0, 255, 0)

while True:

ret, frame = cap.read()

if not ret:

break

frame = cv.resize(frame, (640, 480))

outputs = resnet50.nn_inference(frame, platform = 'ONNX', reorder='2 1 0', output_format=output_format.OUT_FORMAT_FLOAT32)

output = outputs[0]

a = np.resize(output, (84,8400))

print(a)

cv.imshow('Camera Stream', frame)

if cv.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv.destroyAllWindows()

OUTPUT:

[ 8.058984 4.6051335 2.8142483 ... 0.25584075 0.6396019

0.7675222 ]

[ 7.931063 7.5473022 4.7330537 ... 0.5116815 1.023363

1.023363 ]

[ 3.5817704 7.163541 6.523939 ... 1.023363 1.6629648

1.4071242 ]

...

[-12.280355 -12.280355 -12.152435 ... -14.966683 -14.455002

-15.862126 ]

[-14.071241 -14.199162 -14.455002 ... -12.664117 -12.408277

-14.071241 ]

[-12.024515 -11.896595 -11.896595 ... -13.175798 -12.792037

-14.710843 ]]

[[ 7.6752224 4.221372 2.8142483 ... 0. 0.3837611

0.6396019 ]

[ 7.5473022 7.2914615 4.7330537 ... 0. 0.5116815

0.5116815 ]

[ 3.3259296 6.9077 6.523939 ... 0.12792037 0.6396019

0.6396019 ]

...

[-13.047878 -13.303719 -13.303719 ... -14.582923 -14.838763

-15.222525 ]

[-14.327082 -14.582923 -14.710843 ... -13.047878 -13.175798

-13.8154 ]

[-12.792037 -12.792037 -12.792037 ... -13.55956 -13.68748

-14.455002 ]]

[[ 7.6752224 4.3492928 2.3025668 ... -0.5116815 -0.5116815

-0.25584075]

[ 7.5473022 7.6752224 4.6051335 ... -0.7675222 -0.6396019

-0.25584075]

[ 3.0700889 7.2914615 6.77978 ... -0.6396019 -0.3837611

-0.3837611 ]

...

[-12.280355 -12.664117 -13.047878 ... -13.68748 -14.455002

-13.8154 ]

[-13.943321 -14.327082 -14.966683 ... -12.536197 -13.047878

-12.792037 ]

[-11.768675 -11.768675 -12.152435 ... -12.280355 -12.792037

-12.792037 ]]

[[ 7.931063 4.093452 2.046726 ... -0.8954426 -0.7675222

-0.3837611]

[ 7.931063 7.2914615 3.8376112 ... -1.1512834 -0.7675222

-0.3837611]

[ 3.45385 6.9077 6.1401777 ... -0.8954426 -0.5116815

-0.5116815]

...

[-12.536197 -12.792037 -13.047878 ... -14.071241 -14.966683

-14.455002 ]

[-14.327082 -14.838763 -15.0946045 ... -12.919958 -13.43164

-13.43164 ]

[-12.536197 -12.792037 -13.175798 ... -12.536197 -13.047878

-13.43164 ]]

still all the output is non-integer

my models:

https://drive.google.com/drive/folders/18bvIog9AebAR-YWToUA5ucEVK1LG0QwW?usp=sharing

Hello @Arjun_Gupta ,

Blockquote

Is the last structure also an issue on yolo V5 and V3 models?

The problem occurs on this structure. So whatever master network, it has mistake.

Blockquote

still all the output is non-integer

Emmm, do you think model can output the class index directly? Actually no. Model will output confidence of each class. Taking your model as an example, 144 is 64+80. 64 is the box location information. 80 is confidence. You need to find the max confidence (after doing sigmoid) in 80 classes. The index of max confidence is final result of class. For example, if class confidence output is [0.1, 0.7, 0.2], the class result is 1.

@Louis-Cheng-Liu

oh ok

i thought since the dimension you said to resize to was 84x8400 i assumed the 144x8400 tensor had the class already there

sorry I’m new to AI

@Louis-Cheng-Liu

@numbqq

@Electr1

import numpy as np

import os

import argparse

import sys

from ksnn.api import KSNN

from ksnn.types import *

import cv2 as cv

cam_index = 0

names = {

0: 'person', 1: 'bicycle', 2: 'car', 3: 'motorcycle', 4: 'airplane', 5: 'bus', 6: 'train', 7: 'truck',

8: 'boat', 9: 'traffic light', 10: 'fire hydrant', 11: 'stop sign', 12: 'parking meter', 13: 'bench',

14: 'bird', 15: 'cat', 16: 'dog', 17: 'horse', 18: 'sheep', 19: 'cow', 20: 'elephant', 21: 'bear',

22: 'zebra', 23: 'giraffe', 24: 'backpack', 25: 'umbrella', 26: 'handbag', 27: 'tie', 28: 'suitcase',

29: 'frisbee', 30: 'skis', 31: 'snowboard', 32: 'sports ball', 33: 'kite', 34: 'baseball bat',

35: 'baseball glove', 36: 'skateboard', 37: 'surfboard', 38: 'tennis racket', 39: 'bottle',

40: 'wine glass', 41: 'cup', 42: 'fork', 43: 'knife', 44: 'spoon', 45: 'bowl', 46: 'banana',

47: 'apple', 48: 'sandwich', 49: 'orange', 50: 'broccoli', 51: 'carrot', 52: 'hot dog', 53: 'pizza',

54: 'donut', 55: 'cake', 56: 'chair', 57: 'couch', 58: 'potted plant', 59: 'bed', 60: 'dining table',

61: 'toilet', 62: 'tv', 63: 'laptop', 64: 'mouse', 65: 'remote', 66: 'keyboard', 67: 'cell phone',

68: 'microwave', 69: 'oven', 70: 'toaster', 71: 'sink', 72: 'refrigerator', 73: 'book', 74: 'clock',

75: 'vase', 76: 'scissors', 77: 'teddy bear', 78: 'hair drier', 79: 'toothbrush'

}

def draw_bounding_box(frame, box, class_index, confidence):

pass

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--library", help="Path to C static library file")

parser.add_argument("--model", help="Path to nbg file")

parser.add_argument("--level", help="Information printer level: 0/1/2")

args = parser.parse_args()

if args.model:

if os.path.exists(args.model) == False:

sys.exit('Model \'{}\' not exist'.format(args.model))

model = args.model

else:

sys.exit("NBG file not found !!! Please use format: --model")

if args.library:

if os.path.exists(args.library) == False:

sys.exit('C static library \'{}\' not exist'.format(args.library))

library = args.library

else:

sys.exit("C static library not found !!! Please use format: --library")

if args.level == '1' or args.level == '2':

level = int(args.level)

else:

level = 0

resnet50 = KSNN('VIM3')

print(' |--- KSNN Version: {} +---| '.format(resnet50.get_nn_version()))

print('Start init neural network ...')

resnet50.nn_init(library=library, model=model, level=level)

print('Done.')

cap = cv.VideoCapture(cam_index)

assert cap.isOpened()

color = (0, 255, 0)

while True:

ret, frame = cap.read()

if not ret:

break

frame = cv.resize(frame, (640, 480))

outputs = resnet50.nn_inference(frame, platform='ONNX', reorder='2 1 0', output_format=output_format.OUT_FORMAT_FLOAT32)

outputs = outputs[0]

predictions = np.array(outputs).reshape(8400, 144)

boxes = predictions[:, :64]

confidences = predictions[:, 64:]

confidences = 1 / (1 + np.exp(-confidences))

predicted_classes = np.argmax(confidences, axis=1)

predicted_class_names = [names[class_index] for class_index in predicted_classes]

cv.imshow('Camera Stream', frame)

if cv.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv.destroyAllWindows()

im unable to understand why there are 64 elements for box location…

if the model is giving out confidences for 80 classes there should be 80 boxes…

and also the model output is like

[[actual output list of 1209600 elements]]

though that works out to be 144x8400

but please tell me why there are 64 elements for boxes

it would be great if you could help out with postprocessing of the output…

Hello @Arjun_Gupta ,

Yes, 1209600=144×8400.

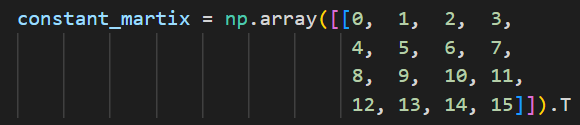

64 elements need to be decoded to 4 elements. This part is about decoding process.

First, you need to divide it into 4 parts. Each part has 16 elements. Then, each part does softmax and multiplies a constant matrix. The constant matrix is 0-15, shape(1, 16). You will has 4 elements. But these elements only are box information. So, third, add location information. Name them x1, y1, x2, y2 in order. The formula is as follows

box_left = (j + 0.5 - x1) * stride / input.shape[1]

box_top = (i + 0.5 - y1) * stride / input.shape[0]

box_right = (j + 0.5 + x2) * stride / input.shape[1]

box_bottom = (i + 0.5 + y2) * stride / input.shape[0]

i and j are the coordinates of the feature map and stride is convolution reduction ratio. YOLO has three kinds of strides, 8, 16, 32. So, it has three different sizes of feature maps. For your model, input is 640×640, so the sizes are 640×640 divided by 8, 16, 32; 80×80, 40×40, 20×20. For example, if in 40×40 map, the coordinates is [9, 0] ([i, j]).

box_left = (0 + 0.5 - x1) * 16 / 640

box_top = (9 + 0.5 - y1) * 16 / 640

box_right = (0 + 0.5 + x2) *16 / 640

box_bottom = (9 + 0.5 + y2) *16 / 640

About how to know this element is in what map and what location, 8400 is 80×80+40×40+20×20. So, [:, :6400] is 80 map. [:, 6400:8000] is 40 map. [:, 8000:] is 20 map. The elements arrange in order of j to i. For example, the elements in 0-79 is [0, 0] to [0, 79], 80-159 is [1, 0] to [1, 79].

A suggestion. Your model input is 640×640 and KSNN will force to resize your input to this size. In order to avoid distortion, i suggest you padding the input before providing it to KSNN.

A mistake. You reshape output should be the same as model output.

- predictions = np.array(outputs).reshape(8400, 144)

+ predictions = np.array(outputs).reshape(144, 8400)

Hello @Arjun_Gupta ,

Maybe i do not explain clearly. You can try to search ‘YOLOv8 postprocess’ and find some other explanation.

The YOLOv8 KSNN demo will release this week. If it is too complex for you, you can wait for the demo.

Hello @Arjun_Gupta ,

Sorry, my explanation has a mistake. I have modify it.

- box_left = (j + 0.5 - x1) / stride

- box_top = (i + 0.5 - y1) / stride

- box_right = (j + 0.5 + x2) / stride

- box_bottom = (i + 0.5 + y2) / stride

+ box_left = (j + 0.5 - x1) * stride / input.shape[1]

+ box_top = (i + 0.5 - y1) * stride / input.shape[0]

+ box_right = (j + 0.5 + x2) * stride / input.shape[1]

+ box_bottom = (i + 0.5 + y2) * stride / input.shape[0]

@Louis-Cheng-Liu

this code is what I came up with

for some reason the x, y, width and height variables are in magnitudes of 10^-5

what is causing this?

import numpy as np

import os

import argparse

import sys

from ksnn.api import KSNN

from ksnn.types import *

import cv2 as cv

cam_index = 1

names = {

0: 'person', 1: 'bicycle', 2: 'car', 3: 'motorcycle', 4: 'airplane', 5: 'bus', 6: 'train', 7: 'truck',

8: 'boat', 9: 'traffic light', 10: 'fire hydrant', 11: 'stop sign', 12: 'parking meter', 13: 'bench',

14: 'bird', 15: 'cat', 16: 'dog', 17: 'horse', 18: 'sheep', 19: 'cow', 20: 'elephant', 21: 'bear',

22: 'zebra', 23: 'giraffe', 24: 'backpack', 25: 'umbrella', 26: 'handbag', 27: 'tie', 28: 'suitcase',

29: 'frisbee', 30: 'skis', 31: 'snowboard', 32: 'sports ball', 33: 'kite', 34: 'baseball bat',

35: 'baseball glove', 36: 'skateboard', 37: 'surfboard', 38: 'tennis racket', 39: 'bottle',

40: 'wine glass', 41: 'cup', 42: 'fork', 43: 'knife', 44: 'spoon', 45: 'bowl', 46: 'banana',

47: 'apple', 48: 'sandwich', 49: 'orange', 50: 'broccoli', 51: 'carrot', 52: 'hot dog', 53: 'pizza',

54: 'donut', 55: 'cake', 56: 'chair', 57: 'couch', 58: 'potted plant', 59: 'bed', 60: 'dining table',

61: 'toilet', 62: 'tv', 63: 'laptop', 64: 'mouse', 65: 'remote', 66: 'keyboard', 67: 'cell phone',

68: 'microwave', 69: 'oven', 70: 'toaster', 71: 'sink', 72: 'refrigerator', 73: 'book', 74: 'clock',

75: 'vase', 76: 'scissors', 77: 'teddy bear', 78: 'hair drier', 79: 'toothbrush'

}

def decode_boxes(predictions, input_shape, strides):

num_classes = 80

boxes_per_anchor = 4

predictions = np.array(predictions).reshape(144, 8400)

decoded_boxes = []

for i in range(len(predictions)):

class_confidences = predictions[i][:80]

box_data = predictions[i][80:]

class_probs = np.exp(class_confidences) / np.sum(np.exp(class_confidences), axis=-1)

box_data = np.exp(box_data) / np.sum(np.exp(box_data), axis=-1)

box_data = box_data.reshape(-1, boxes_per_anchor)

x_data, y_data, width_data, height_data = box_data.T

decoded_boxes.append(np.stack([x_data, y_data, width_data, height_data], axis=-1))

decoded_boxes = np.array(decoded_boxes)

boxes = []

for i in range(decoded_boxes.shape[0]):

for j in range(decoded_boxes.shape[1]):

box = decoded_boxes[i, j, :]

x, y, width, height = box

box_left = (j + 0.5 - x) * strides / input_shape[1]

box_top = (i + 0.5 - y) * strides / input_shape[0]

box_right = (j + 0.5 + width) * strides / input_shape[1]

box_bottom = (i + 0.5 + height) * strides / input_shape[0]

boxes.append([box_left, box_top, box_right, box_bottom])

return np.array(boxes)

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--library", help="Path to C static library file")

parser.add_argument("--model", help="Path to nbg file")

parser.add_argument("--level", help="Information printer level: 0/1/2")

args = parser.parse_args()

if args.model:

if not os.path.exists(args.model):

sys.exit('Model \'{}\' not exist'.format(args.model))

model = args.model

else:

sys.exit("NBG file not found !!! Please use format: --model")

if args.library:

if not os.path.exists(args.library):

sys.exit('C static library \'{}\' not exist'.format(args.library))

library = args.library

else:

sys.exit("C static library not found !!! Please use format: --library")

if args.level == '1' or args.level == '2':

level = int(args.level)

else:

level = 0

resnet50 = KSNN('VIM3')

print(' |--- KSNN Version: {} +---| '.format(resnet50.get_nn_version()))

print('Start init neural network ...')

resnet50.nn_init(library=library, model=model, level=level)

print('Done.')

cap = cv.VideoCapture(cam_index)

assert cap.isOpened()

while True:

ret, frame = cap.read()

if not ret:

break

frame = cv.resize(frame, (640, 640))

outputs = resnet50.nn_inference(frame, platform='ONNX', reorder='2 1 0', output_format=output_format.OUT_FORMAT_FLOAT32)

#decode boxes

input_shape = (640, 640)

strides = 8

decoded_boxes = decode_boxes(outputs[0], input_shape, strides)

#bounding boxes

for box in decoded_boxes:

box_left, box_top, box_right, box_bottom = box

color = (0, 255, 0) # Green color for bounding boxes

label = "{}".format(names[np.argmax(box)])

cv.rectangle(frame, (int(box_left), int(box_top)), (int(box_right), int(box_bottom)), color, 2)

cv.putText(frame, label, (int(box_left), int(box_top) - 5), cv.FONT_HERSHEY_SIMPLEX, 0.5, color, 2)

cv.imshow('Camera Stream', frame)

if cv.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv.destroyAllWindows()

Hello @Arjun_Gupta ,

Third, you need to do softmax for four part separately. Divide [64, :] into [16, :]×4 and do softmax for each part.

Last, you missed this step.

Blockquote

Then, each part does softmax and multiplies a constant matrix. The constant matrix is 0-15, shape(1, 16).

The constant matrix.

YOLOv8 demo has been released last Friday. You can refer it.

khadas/ksnn: Khadas Software Neural Network (github.com)

Docs

YOLOv8n KSNN Demo - 2 [Khadas Docs]

This demo’s model output is [1, 144, 80, 80], [1, 144, 40, 40], [1, 144, 20, 20]. You should separate and reshape your output to them.

@Louis-Cheng-Liu

regarding the YOLOv8 demo i’m recieving this error:

[22663] Failed to execute script pegasus

Traceback (most recent call last):

File "pegasus.py", line 131, in <module>

File "pegasus.py", line 112, in main

File "acuitylib/app/importer/commands.py", line 245, in execute

File "acuitylib/vsi_nn.py", line 171, in load_onnx

File "acuitylib/app/importer/import_onnx.py", line 123, in run

File "acuitylib/converter/onnx/convert_onnx.py", line 61, in __init__

File "acuitylib/converter/onnx/convert_onnx.py", line 761, in _shape_inference

File "acuitylib/onnx_ir/onnx_numpy_backend/shape_inference.py", line 65, in infer_shape

File "acuitylib/onnx_ir/onnx_numpy_backend/smart_graph_engine.py", line 70, in smart_onnx_scanner

File "acuitylib/onnx_ir/onnx_numpy_backend/smart_node.py", line 48, in calc_and_assign_smart_info

File "acuitylib/onnx_ir/onnx_numpy_backend/smart_toolkit.py", line 636, in multi_direction_broadcast_shape

ValueError: operands could not be broadcast together with shapes (1,0,160,160) (1,16,160,160)Hello @Arjun_Gupta ,

Is it report when convert model? Could you provide your new model?

Another thing, you do not have to modify model. [1, 144, 8400] is okay, too. Divide and reshape it to [1, 144, 80, 80], [1, 144, 40, 40] and [1, 144, 20, 20].

output_1 = output[:, :, :6400].reshape(1, 144, 80, 80)

output_2 = output[:, :, 6400:8000].reshape(1, 144, 40, 40)

output_3 = output[:, :, 8000:].reshape(1, 144, 20, 20)

@Louis-Cheng-Liu

this issue occurs when i try to convert a stock yolov8n model

https://github.com/ultralytics/assets/releases/download/v0.0.0/yolov8n.pt

onnx format-

Hello @Arjun_Gupta ,

Have you done this step?

Your onnx model do not remove the structure.

I use your pt model to convert successfully.