@Louis-Cheng-Liu

Yes i edited the file as required

and i tried both models one with the problematic structure and one without both have same error

Hello @Arjun_Gupta ,

Do you use official code? Provide your code please.

@Louis-Cheng-Liu

No i meant both models give error when i try to convert them via the acuity tool kit

the error was same whether i removed the problematic structure from the model or not

the error is trying to convert yolov8n.onnx model

also when I run the example of yolov8n it is really laggy

could you please verify if this issue is on my side or is hardware limitation

Hello @Arjun_Gupta ,

I can reproduce your problem when i use your onnx model to convert. But i use your pt model to convert onnx and then to convert nb. It is successful. So i think the problem occur in your convert code and hope you provide your train code.

Can the ‘laggy’ be more specific? Infer one picture spend much time or load model spend much time?

Hi @Louis-Cheng-Liu

I’m using the same code as in the guide to convert the model to onnx:

from ultralytics import YOLO

model = YOLO("./runs/detect/train/weights/best.pt")

results = model.export(format="onnx")

The model loading time is ok the but the inference time is 60-70 ms which is about 15 FPS

Hello @Arjun_Gupta ,

What the version of PyTorch and ONNX library are you use? My PyTorch version is 1.10.1 and ONNX is 1.14.0.

On my VIM3, the inference time is 50-70ms.

Modify the opset version and try to convert again.

import onnx

model = onnx.load("./yolov8n.onnx")

model.opset_import[0].version = 12

onnx.save(model, "./yolov8n_1.onnx")

@Louis-Cheng-Liu

On the VM in which i convert the models using ksnn:

torch==2.1.2

onnx==1.13.0

in the env where im converting from torch to onnx:

torch==2.1.2

onnx==1.15.0

BTW what OS/ distribution are you using?

I’m using ubuntu server with LXDe since with gNOME the inferencing was even slower

could it be a power issue?

also i tried

import onnx

model = onnx.load("./yolov8n.onnx")

model.opset_import[0].version = 12

onnx.save(model, "./yolov8n_1.onnx")

and i got this error:

Traceback (most recent call last):

File "pegasus.py", line 131, in <module>

File "pegasus.py", line 112, in main

File "acuitylib/app/importer/commands.py", line 245, in execute

File "acuitylib/vsi_nn.py", line 171, in load_onnx

File "acuitylib/app/importer/import_onnx.py", line 123, in run

File "acuitylib/converter/onnx/convert_onnx.py", line 61, in __init__

File "acuitylib/converter/onnx/convert_onnx.py", line 761, in _shape_inference

File "acuitylib/onnx_ir/onnx_numpy_backend/shape_inference.py", line 65, in infer_shape

File "acuitylib/onnx_ir/onnx_numpy_backend/smart_graph_engine.py", line 70, in smart_onnx_scanner

File "acuitylib/onnx_ir/onnx_numpy_backend/smart_node.py", line 48, in calc_and_assign_smart_info

File "acuitylib/onnx_ir/onnx_numpy_backend/smart_toolkit.py", line 1100, in reshape_shape

File "<__array_function__ internals>", line 6, in reshape

File "numpy/core/fromnumeric.py", line 301, in reshape

File "numpy/core/fromnumeric.py", line 61, in _wrapfunc

ValueError: cannot reshape array of size 604800 into shape (1,4,16,8400)Hello @Arjun_Gupta ,

Do you use this official codes?

Could you provide your whole codes? 604800 is 72×8400. It should not appear in YOLOv8.

The kernel i use is 5.15.

https://dl.khadas.com/products/vim3/firmware/ubuntu/emmc/vim3-ubuntu-22.04-gnome-linux-5.15-fenix-1.6-231229-emmc.img.xz

What is you use? Is it much slower than mine?

after a reinstall everything is working fine the inference time is around 40ms for me with a USB-PD 30W supply and XFCE with ubuntu server

thanks a lot

Hi @Louis-Cheng-Liu ,

I am stuck in a problem and i need your help. The problem is that i have a model which i want to run on older version of fenix-release. So in order to get the required Galcore driver and to run the required aml-npu library which is need for the model i had to upgrade the kernel of this older fenix-release which is shown below.

Now i used :

linux-dtb-amlogic-4.9_1.0.9_arm64.deb

linux-image-amlogic-4.9_1.0.9_arm64.deb

linux-headers-amlogic-4.9_1.0.9_arm64.deb

For kernel upgrade after sync and reboot my wifi seems to be not working. I dont know why.

cat /etc/fenix-release

# PLEASE DO NOT EDIT THIS FILE

BOARD=VIM3

VENDOR=Amlogic

VERSION=0.8.3

ARCH=arm64

INITRD_ARCH=arm64

INSTALL_TYPE=EMMC

IMAGE_RELEASE_VERSION=V0.8.3-20200302

swarmx@P100:/etc$ cat os-release

NAME="Ubuntu"

VERSION="18.04.4 LTS (Bionic Beaver)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 18.04.4 LTS"

VERSION_ID="18.04"

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

VERSION_CODENAME=bionic

UBUNTU_CODENAME=bionic

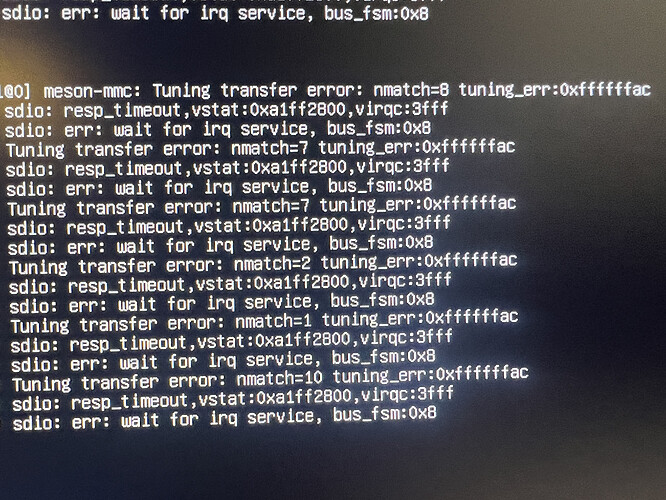

And this is some logs i am seeing after reboot when the kernel upgraded:

Hello @adiKhan12

This is a realy old version, please upgrade to latest version.

Hello @numbqq ,

Yeah it is old but the problem is that there are many devices we have which are deployed in the field i mean cities. So updating the kernel in this case is much easier for us than image there are many Services also running on the devices. So is there any way possible to fix this for example i really need to run aml-nou library equal to or higher than 6.4.4.3. Please let me know if the network problem can be fixed

Hello @adiKhan12

Could you tell us what’s the orignal OS version you used? The image file name? And how to reproduce this issue ?

Hello @numbqq ,

Okay so the os version i have in devices is V0.8.3-20200302. The kernel build i used of is of fenix release 1.0.9 i checkout to 1.0.9 branch build the kernel and got .deb files and update it on V0.8.3-20200302.

So the original os version i used where my model is working properly is 1.0.9. But unfortunately the devices we have in field has 0.8.3 and the only way possible is to update kernel in field devices the update aml-npu-library then the model works.

Then after update i sync and reboot the issue appear no network connection. I just checked the khadas fenix release all releases and i saw that in version 1.0.7 wifi drivers update could that be the cause ?. here Release v1.0.7 · khadas/fenix · GitHub

Hello!

I’m getting the same errors as @Arjun_Gupta but I dont know whats wrong, can you help me?

Torch 2.2.0

Onnx 15.0.1

Yolov8 8.1.9

I’m doing everything as set in your instructions