Hello @adiKhan12

@Louis-Cheng-Liu will help you then.

Hello @adiKhan12

@Louis-Cheng-Liu will help you then.

Hello @Louis-Cheng-Liu

I did modify size-with-batch and i am getting this error.

Namespace(import='tflite', input_size_list='1,640,640,3#1,640,640,3', inputs='input.1 input.7', model='centertrack.tflite', output_data='centertrack.data', output_model='centertrack.json', outputs='509 513 517 521', size_with_batch='True,True', which='import')

I Start importing tflite...

I Specify the inputs: ['input.1', 'input.7']

I Specify the outputs: ['509', '513', '517', '521']

E length of size_with_batch is not equal to inputs

W ----------------Error(1),Warning(0)----------------

Traceback (most recent call last):

File "pegasus.py", line 131, in <module>

File "pegasus.py", line 112, in main

File "acuitylib/app/importer/commands.py", line 224, in execute

File "acuitylib/vsi_nn.py", line 91, in load_tflite

File "acuitylib/acuitylog.py", line 259, in e

acuitylib.acuityerror.AcuityError: ('length of size_with_batch is not equal to inputs', None)

[11493] Failed to execute script pegasus

Hello @adiKhan12 ,

Could you provide your model ?

Hello @Louis-Cheng-Liu

Yes here is the link to the model centertrack.tflite - Google Drive.

Hello @adiKhan12 ,

About your problem, I have given feedback to our engineer. He replied he need time to find the cause of problem and fixed it. Maybe next week.

Hello @Louis-Cheng-Liu

Okay please let me know as soon as possible i really need to do this conversion. One more thing which i want to share with you below is the output of conversion of same model but with input shape NCHW. the Error is almost same.

$pegasus import tflite\

--model ${NAME}.tflite \

--inputs 'input.1 input.7' \

--input-size-list '1,3,640,640#1,3,640,640' \

--size-with-batch 'True#True' \

--output-model ${NAME}.json \

--output-data ${NAME}.data \

--outputs '509 513 517 521'

OUTPUT:

D Convert layer concatenation

D Convert layer strided_slice

D Convert layer add

D Convert layer conv_2d

D Convert layer add

D Convert layer conv_2d

D Convert layer conv_2d

D Convert layer conv_2d

D Convert layer dequantize

D Convert layer conv_2d

D Convert layer conv_2d

D Convert layer dequantize

D Convert layer conv_2d

D Convert layer conv_2d

D Convert layer dequantize

D Convert layer conv_2d

D Convert layer conv_2d

D Convert layer dequantize

D shape:[1, 3, 640, 640],channel:640

Traceback (most recent call last):

File "pegasus.py", line 131, in <module>

File "pegasus.py", line 112, in main

File "acuitylib/app/importer/commands.py", line 224, in execute

File "acuitylib/vsi_nn.py", line 122, in load_tflite

File "acuitylib/vsi_nn.py", line 709, in generate_inputmeta

File "acuitylib/app/console/inputmeta.py", line 119, in generate_inputmeta

IndexError: list index out of range

[14638] Failed to execute script pegasus

Hello @Louis-Cheng-Liu

I tried to convert mnist model which i quantized and converted to tflite and with acuity toolkit conversion its passed i receive no error so please let me know as soon as you have information on my model whats the problem.

Hello @adiKhan12 ,

We think the problem is this structure.

W Tensor b'model_13/tf.nn.conv2d_transpose_149/conv2d_transpose' has no buffer, init to zeros.

The conv2d_transpose operator read in has no data.

Is this model original model done quantification? How did it quantify? Could you provide the model without quantification that help us to deal with it?

The original model was not quantize and i converted the original model in onnx format using the Acuity toolkit successfully and run it. But i need Quantize now So i used onnx2tf tool to quantize and export my model to tflite. Also i used Acuitylite to quantize my model and exported to tflite but i got same error. Here is original model Please check and if needed the model i quantize and exported with acuitylite let me know. NQ_nas_model.onnx - Google Drive

Hello @adiKhan12 ,

We suggest you to quantize model by acuity toolkit. The model has two inputs, so the format of dataset.txt should like this.

./data/space_shuttle_224.jpg ./data/space_shuttle_224.jpg

./data/space_shuttle_224.jpg ./data/space_shuttle_224.jpg

I use it converting model successfully. Here are my parameters.

# 0_import_model.sh

#Onnx

$pegasus import onnx\

--model ./model/${NAME}.onnx \

--output-model ${NAME}.json \

--output-data ${NAME}.data \

#generate inpumeta --source-file dataset.txt

$pegasus generate inputmeta \

--model ${NAME}.json \

--input-meta-output ${NAME}_inputmeta.yml \

--channel-mean-value "0 0 0 0.0039215" \

--source-file dataset.txt

# 1_quantize_model.sh

$pegasus quantize \

--quantizer dynamic_fixed_point \

--qtype int8 \

--rebuild \

--with-input-meta ${NAME}_inputmeta.yml \

--model ${NAME}.json \

--model-data ${NAME}.data

# 2_export_case_code.sh

$pegasus export ovxlib\

--model ${NAME}.json \

--model-data ${NAME}.data \

--model-quantize ${NAME}.quantize \

--with-input-meta ${NAME}_inputmeta.yml \

--dtype quantized \

--optimize VIPNANOQI_PID0X88 \

--viv-sdk ${ACUITY_PATH}vcmdtools \

--pack-nbg-unify

Thank you for such a detailed explanation. I have already implemented this method and run it on Khadas, even creating a complete application around it. However, the issue I am facing is with the quantization of the ONNX model using Acuity; the results are not satisfactory, falling short of even 50% accuracy. This is why I am keen to convert quantized model. If successful, I could also apply this to a quantization-aware trained model. Additionally, if you could assist me with improving the low accuracy of the model quantized using the Acuity toolkit, that would be great. Please let me know your thoughts.

Hello @adiKhan12 ,

How many quantification pictures are you use? The best number of quantification pictures is about 200 and the pictures should be actual usage scenarios. Your model has two inputs, so you should use about 400 pictures. Each input has 200.

If 1 is right, you can use inference.sh to run your quantized model and compare the result with the model without quantification. Make sure whether quantification causes accuracy falling.

# After running 0_import_model.sh

#snapshots inference quantized float32

$pegasus inference \

--dtype float32 \

--model ${NAME}.json \

--model-data ${NAME}.data \

--with-input-meta ${NAME}_inputmeta.yml \

# After running 1_quantize_model.sh

#snapshots inference quantized float32

$pegasus inference \

--dtype quantized \

--model ${NAME}.json \

--model-data ${NAME}.data \

--with-input-meta ${NAME}_inputmeta.yml \

# int16

# 1_quantize_model.sh

$pegasus quantize \

--quantizer dynamic_fixed_point \

--qtype int16 \

--rebuild \

--with-input-meta ${NAME}_inputmeta.yml \

--model ${NAME}.json \

--model-data ${NAME}.data

# No quantification

# After running 0_import_model.sh, run 2_export_case_code.sh

$pegasus export ovxlib\

--model ${NAME}.json \

--model-data ${NAME}.data \

--with-input-meta ${NAME}_inputmeta.yml \

--optimize VIPNANOQI_PID0X88 \

--viv-sdk ${ACUITY_PATH}vcmdtools \

--pack-nbg-unify

About more details, you can refer aml_npu_sdk/docs/en/Model Transcoding and Running User Guide (1.0).pdf.

Hi @Louis-Cheng-Liu

So regarding you 1st question yes i am providing more than 500 images for quantization. I use the inference.sh and compare the results of non quantized and quantized just like you said Below are the results you can see by your self. Now the problem is that i when i run this model on khadas vim 3 device for the same input images i get 50% accuracy the bounding boxes or object detection results are small as compared to PC results thats what i am thinking that if i convert already Quantized aware trained model maybe it will be fixed. Do you have any idea for me further what should i do.

NON QUANTIZED MODEL RESULTS:

########################################################################################################

I Iter(0), top(5), tensor(@attach_Conv_Conv_169/out0_0:out0) :

I 116043: -2.5413317680358887

I 45438: -2.594998836517334

I 45598: -2.6330647468566895

I 45278: -2.6936354637145996

I 45758: -2.7059412002563477

I Iter(0), top(5), tensor(@attach_Conv_Conv_172/out0_1:out0) :

I 28143: 1.198882818222046

I 28142: 1.1950265169143677

I 27663: 1.191260576248169

I 28141: 1.191146731376648

I 27665: 1.1905944347381592

I Iter(0), top(5), tensor(@attach_Conv_Conv_178/out0_2:out0) :

I 47856: 6.284069061279297

I 48016: 6.236096382141113

I 47855: 6.183168411254883

I 47696: 6.1698899269104

I 48176: 6.149331569671631

I Iter(0), top(5), tensor(@attach_Conv_Conv_181/out0_3:out0) :

I 31953: 72.93936157226562

I 31952: 72.455322265625

I 32113: 72.12811279296875

I 33392: 72.07128143310547

I 33395: 71.88139343261719

I Check const pool...

I End inference...

QUANTIZED MODEL RESULTS:

########################################################################################################

I Iter(0), top(5), tensor(@attach_Conv_Conv_169/out0_0:out0) :

I 116043: -2.25

I 25438: -2.75

I 25278: -2.75

I 45278: -3.0

I 45598: -3.0

I Iter(0), top(5), tensor(@attach_Conv_Conv_172/out0_1:out0) :

I 27663: 1.1875

I 27664: 1.1875

I 27667: 1.171875

I 27665: 1.15625

I 27666: 1.140625

I Iter(0), top(5), tensor(@attach_Conv_Conv_178/out0 0 0 0.00392150_2:out0) :

I 48337: 6.4375

I 48336: 6.4375

I 48496: 6.375

I 48177: 6.375

I 48495: 6.3125

I Iter(0), top(5), tensor(@attach_Conv_Conv_181/out0_3:out0) :

I 33392: 76.0

I 32113: 76.0

I 32590: 76.0

I 32591: 76.0

I 32271: 75.0

I Check const pool...

I End inference...

I ----------------Er

Hello @adiKhan12 ,

After you run inference.sh, it will generate the tensor file of input and output. Below picture red boxes are model output and green box is input.

You should compare the output between non-quantized and quantized. You can refer NN Tool FAQ (0.5).pdf section 4.2.

Hi @Louis-Cheng-Liu

Okay i did just like you said below you can see the result of tensors comparison of float32 and quantized.

tensors : out0_0_out0_1_6_160_160 before quantize vs after quantize

euclidean_distance 195.44814

cos_similarity 0.998509

###############################################

tensors: out0_1_out0_1_2_160_160 before quantize vs after quantize

euclidean_distance 9.424252

cos_similarity 0.996469

###############################################

tensors: out0_2_out0_1_2_160_160 before quantize vs after quantize

euclidean_distance 77.51932

cos_similarity 0.967092

###############################################

tensors: out0_3_out0_1_2_160_160 before quantize vs after quantize

euclidean_distance 493.8319

cos_similarity 0.99271

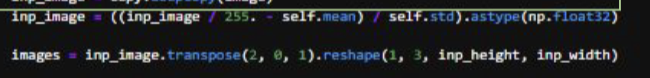

Hi @Louis-Cheng-Liu

I have question that the mean and scale value for example which is used during quantization for for data between -1,1 normalization which 128 128 128 0.007. Do we need to use these values in our preprocess pipeline when running model for example in preprocess (C code) i have done the same normalization of image data which i did on PC which is shown in below image then afterwards i copy preprocess data to tensor graph.

I think i am missing something in Preprocess that is why maybe my results are not good. Is it okay if i share my Preprocess C code with you can you check maybe.

Hi @Louis-Cheng-Liu

Thank you for the support. Today i fixed the problem the model works great now there was problem in my preprocessing pipeline actually that’s why.

Hello @adiKhan12 ,

I am very sorry that we take a vacation this week and i did not notice your reply until now.

From the compare result, only out0_2_out0_1_2_160_160 (0.96) has a little loss of precision. But i do not think it can make 50% accuracy loss. Others (0.99) are almost the same as non-quantized.

At last, congratulate for the problem solved.

This code keeps giving me garbage values please help me fix this the class id is way too huge than intended the conversion tool for some reason gives the output tensor as 84,8400 and that doesn’t make sense since there are only 80 classes

Class: 70, Bounding Box: (x=42, y=50, w=52, h=58), Confidence: 65.59947204589844

70

Class: 116, Bounding Box: (x=73, y=80, w=90, h=100), Confidence: 111.01449584960938

116

Class: 169, Bounding Box: (x=126, y=136, w=143, h=153), Confidence: 161.4756317138672

169

Class: 211, Bounding Box: (x=176, y=181, w=194, h=201), Confidence: 209.41371154785156

211

Class: 272, Bounding Box: (x=219, y=229, w=242, h=249), Confidence: 262.39788818359375

272

Class: 317, Bounding Box: (x=275, y=280, w=295, h=300), Confidence: 307.81292724609375

317

Class: 360, Bounding Box: (x=317, y=327, w=338, h=345), Confidence: 353.2279357910156

360

Class: 413, Bounding Box: (x=370, y=380, w=391, h=401), Confidence: 403.6890869140625

413

Class: 469, Bounding Box: (x=426, y=439, w=449, h=461), Confidence: 469.2885437011719

469

Class: 494, Bounding Box: (x=471, y=474, w=479, h=486), Confidence: 491.9960632324219

494

Class: 580, Bounding Box: (x=504, y=527, w=542, h=557), Confidence: 565.1647338867188

580

Class: 590, Bounding Box: (x=587, y=587, w=590, h=590), Confidence: 587.8721923828125

590

Class: 20, Bounding Box: (x=595, y=620, w=630, h=638), Confidence: 5.04611349105835

20

Class: 58, Bounding Box: (x=22, y=32, w=40, h=47), Confidence: 52.98419189453125

58

Class: 98, Bounding Box: (x=63, y=68, w=73, h=80), Confidence: 90.83003997802734

98

Class: 153, Bounding Box: (x=108, y=116, w=126, h=136), Confidence: 146.33729553222656

153

Class: 201, Bounding Box: (x=161, y=166, w=176, h=184), Confidence: 191.7523193359375

201

Class: 252, Bounding Box: (x=209, y=211, w=219, h=229), Confidence: 239.6903839111328

252

Class: 302, Bounding Box: (x=262, y=272, w=275, h=280), Confidence: 297.720703125

302

Class: 345, Bounding Box: (x=307, y=315, w=320, h=327), Confidence: 338.089599609375

345

Class: 401, Bounding Box: (x=350, y=363, w=368, h=380), Confidence: 391.07379150390625

401

Class: 461, Bounding Box: (x=406, y=413, w=426, h=441), Confidence: 449.1040954589844

461

Class: 484, Bounding Box: (x=469, y=469, w=474, h=474), Confidence: 479.3807678222656

484

Class: 557, Bounding Box: (x=489, y=494, w=504, h=519), Confidence: 542.4572143554688

557

Class: 590, Bounding Box: (x=565, y=580, w=582, h=587), Confidence: 590.395263671875

590

Class: 638, Bounding Box: (x=590, y=592, w=597, h=618), Confidence: 630.76416015625

638

Class: 47, Bounding Box: (x=5, y=20, w=25, h=32), Confidence: 40.3689079284668

47

Class: 83, Bounding Box: (x=52, y=58, w=65, h=68), Confidence: 75.69170379638672

import numpy as np

import os

import argparse

import sys

from ksnn.api import KSNN

from ksnn.types import *

import cv2 as cv

import time

classes = [

"person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck",

"boat", "traffic light", "fire hydrant", "stop sign", "parking meter", "bench",

"bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear", "zebra",

"giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove",

"skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup",

"fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange",

"broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "sofa",

"pottedplant", "bed", "diningtable", "toilet", "tvmonitor", "laptop", "mouse",

"remote", "keyboard", "cell phone", "microwave", "oven", "toaster", "sink",

"refrigerator", "book", "clock", "vase", "scissors", "teddy bear", "hair drier",

"toothbrush"

]

def draw_boxes(frame, class_id, confidence, x, y, w, h, color):

cv.rectangle(frame, (x,y), (x+w,y+h), color, 2)

#label = str(class_id)

#cv.putText(frame, label, (x,y), cv.FONT_HERSHEY_SIMPLEX, 0.5, color, 2)

def softmax(x):

return np.exp(x - np.max(x)) / np.sum(np.exp(x - np.max(x)))

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--library", help="Path to C static library file")

parser.add_argument("--model", help="Path to nbg file")

parser.add_argument("--level", help="Information printer level: 0/1/2")

args = parser.parse_args()

if args.model :

if os.path.exists(args.model) == False:

sys.exit('Model \'{}\' not exist'.format(args.model))

model = args.model

else :

sys.exit("NBG file not found !!! Please use format: --model")

if args.library :

if os.path.exists(args.library) == False:

sys.exit('C static library \'{}\' not exist'.format(args.library))

library = args.library

else :

sys.exit("C static library not found !!! Please use format: --library")

if args.level == '1' or args.level == '2' :

level = int(args.level)

else :

level = 0

resnet50 = KSNN('VIM3')

print(' |--- KSNN Version: {} +---| '.format(resnet50.get_nn_version()))

print('Start init neural network ...')

resnet50.nn_init(library=library, model=model, level=level)

print('Done.')

cap = cv.VideoCapture(0)

assert cap.isOpened()

color = (0, 255, 0)

while True:

ret, frame = cap.read()

if not ret:

break

frame = cv.resize(frame, (640, 480))

outputs = resnet50.nn_inference(frame[:, :, ::-1], platform='ONNX')

output = outputs[0]

num_predictions = int(len(output) / 6)

predictions = output.reshape((num_predictions, 6))

for pred in predictions:

x, y, w, h, confidence, class_id = pred

x, y, w, h = int(x), int(y), int(w), int(h)

confidence = float(confidence)

class_id = int(class_id)

label = class_id

print(f"Class: {label}, Bounding Box: (x={x}, y={y}, w={w}, h={h}), Confidence: {confidence}")

color = (0, 255, 0) # Green color for bounding box

draw_boxes(frame, class_id, confidence, x, y, w, h, color)

cv.imshow('Camera Stream', frame)

if cv.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv.destroyAllWindows()