Hi, I created a model trained with YOLOv8n and followed all the instructions provided in the documentation.

I used the recommended versions: torch==1.10.1 and ultralytics==8.0.86.

I also modified the ultralytics/ultralytics/nn/modules.py file as mentioned in the guide, and exported the model to ONNX format as instructed.

Then, I converted the model to .nb format using the ./convert tool. Here’s an example of the command I used:

./convert --model-name pan

–platform onnx

–model best.onnx

–mean-values ‘0 0 0 0.00392156’

–quantized-dtype asymmetric_affine

–source-files ./data/dataset1/dataset1.txt

–batch-size 1

–iterations 375

–kboard VIM3

–print-level 1

I created a dataset with more than 300 images and tested both int8 and int16 quantization.

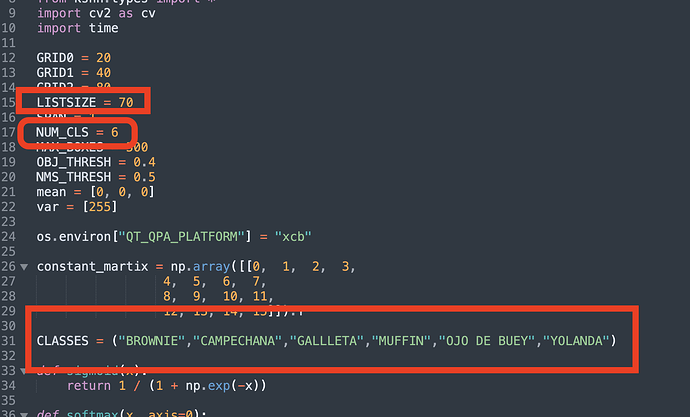

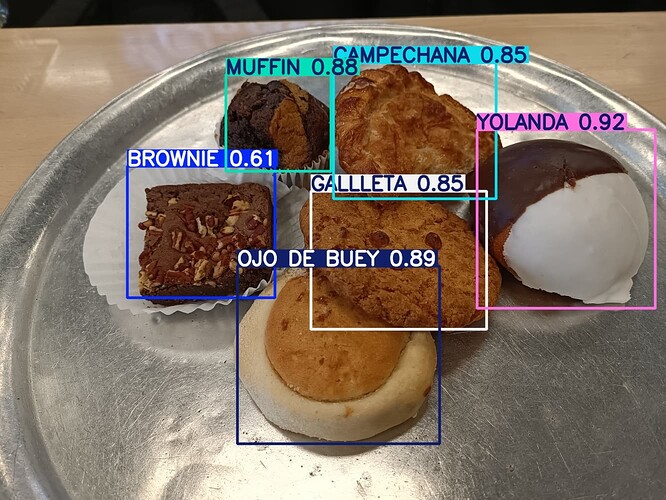

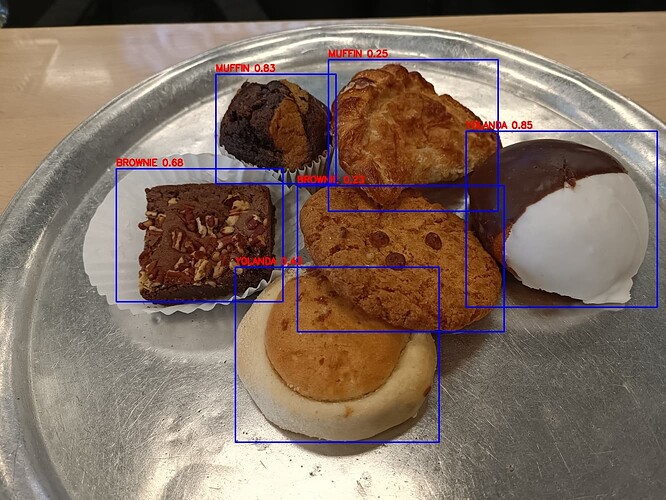

When I run the model using the yolov8n-cap.py example (modified to use 6 classes, as in my custom model), it loads without any issues. However, the detection accuracy is significantly worse compared to the original best.pt model.

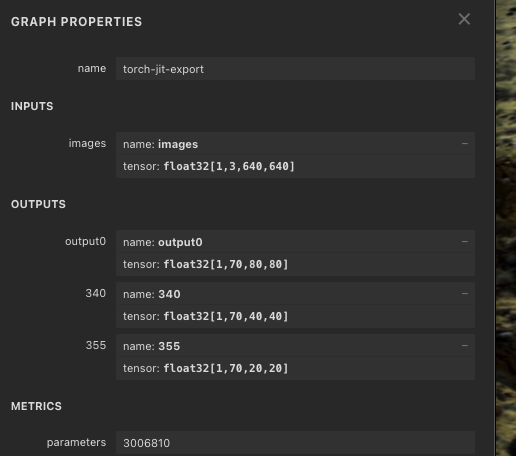

this is a screen shot of netrona.pp of onnx result:

What could I be missing?

Hello @Abraham_Salazar ,

Could you provide your nb model and ONNX model?

And have you modified LISTSIZE in codes?

Hi @Louis-Cheng-Liu

yes i modify this parameters

These are the models I included: best.pt, ONNX, and NB formats:

thanks a lot

Hello @Abraham_Salazar ,

From your result picture, reduced confidencd is normal. The model has been quantified that make the accuracy reduced. If you think the accuracy is too low, you can try to quantify model in int8 or int16.

Hello @Louis-Cheng-Liu

What other alternatives do I have? I’ve already tried with int8 and int16. I think the recognition loss is over 90%.

Hello @Abraham_Salazar ,

Could you provide the codes that infers result is this picture?

I have running the ONNX model but get the result is this.

Hello @Louis-Cheng-Liu

To run inference using my trained best.pt model, I used the following command:

yolo task=detect mode=predict model=best.pt source=test.jpg

To convert the best.pt model to ONNX format, I used this Python code:

from ultralytics import YOLO

model = YOLO(“./best.pt”)

results = model.export(format=“onnx”)

and i train my model whit this commnad

yolo detect train model=yolov8n.pt data=data.yaml epochs=90 imgsz=640 batch=8 workers=3 device=cpu

data.yaml contain this:

train: ./train/images

val: ./valid/images

test: ./test/images

nc: 6

names: [‘BROWNIE’, ‘CAMPECHANA’, ‘GALLLETA’, ‘MUFFIN’, ‘OJO DE BUEY’, ‘YOLANDA’]

Hello @Abraham_Salazar ,

We successfully reproduce your problem. The export ONNX model still detect result is more bad than pt model. We meet this problem first time. In general, exporting ONNX model will not affect accuracy.

You can try to export other format model, like pb or tflite. And then convert it to nb model. Run on VIM3 to check it can get right result or not.