前言:通过 零基础入门darknet-YOLO3及YOLOv3-Tiny 文档操作,我们已经有了自己训练的YOLO3或YOLOv3-Tiny模型,接下来一步步演示如何转换自己的模型,并适配进我们VIM3 android平台的khadas_android_npu_app。关于VIM3 ubuntu平台请参考此文档。

一,转换模型

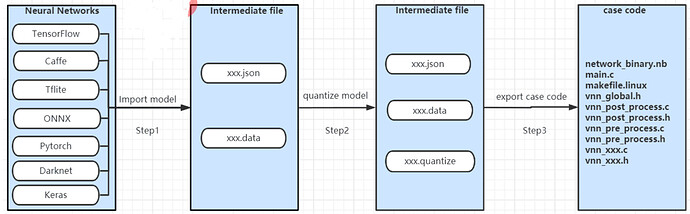

模型转换流程图:

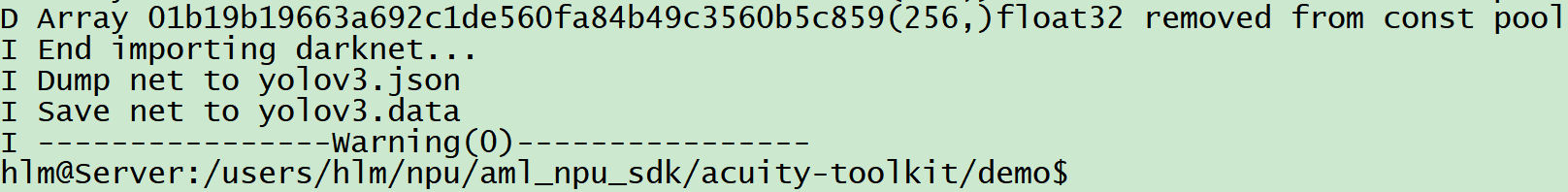

1,导入模型

当前模型转换过程都是在 acuity-toolkit 目录下进行:

cd {workspace}/aml_npu_sdk/acuity-toolkit

cp {workspace}/yolov3-khadas_ai.cfg_train demo/model/

cp {workspace}/yolov3-khadas_ai_last.weights demo/model/

cp {workspace}/test.jpg demo/model/

如下修改:

hlm@Server:/users/hlm/npu/aml_npu_sdk/acuity-toolkit/demo$ git diff

diff --git a/acuity-toolkit/demo/0_import_model.sh b/acuity-toolkit/demo/0_import_model.sh

index 3198810..4671c9f 100755

--- a/acuity-toolkit/demo/0_import_model.sh

+++ b/acuity-toolkit/demo/0_import_model.sh

@@ -1,6 +1,6 @@

#!/bin/bash

-NAME=mobilenet_tf

+NAME=yolov3

ACUITY_PATH=../bin/

convert_caffe=${ACUITY_PATH}convertcaffe

@@ -11,13 +11,13 @@ convert_onnx=${ACUITY_PATH}convertonnx

convert_keras=${ACUITY_PATH}convertkeras

convert_pytorch=${ACUITY_PATH}convertpytorch

-$convert_tf \

- --tf-pb ./model/mobilenet_v1.pb \

- --inputs input \

- --input-size-list '224,224,3' \

- --outputs MobilenetV1/Predictions/Softmax \

- --net-output ${NAME}.json \

- --data-output ${NAME}.data

+#$convert_tf \

+# --tf-pb ./model/mobilenet_v1.pb \

+# --inputs input \

+# --input-size-list '224,224,3' \

+# --outputs MobilenetV1/Predictions/Softmax \

+# --net-output ${NAME}.json \

+# --data-output ${NAME}.data

#$convert_caffe \

# --caffe-model xx.prototxt \

@@ -30,11 +30,11 @@ $convert_tf \

# --net-output ${NAME}.json \

# --data-output ${NAME}.data

-#$convert_darknet \

-# --net-input xxx.cfg \

-# --weight-input xxx.weights \

-# --net-output ${NAME}.json \

-# --data-output ${NAME}.data

+$convert_darknet \

+ --net-input ./model/yolov3-khadas_ai.cfg_train \

+ --weight-input ./model/yolov3-khadas_ai_last.weights \

+ --net-output ${NAME}.json \

+ --data-output ${NAME}.data

--- a/acuity-toolkit/demo/data/validation_tf.txt

+++ b/acuity-toolkit/demo/data/validation_tf.txt

@@ -1 +1 @@

-./space_shuttle_224.jpg, 813

+./test.jpg

执行对应脚本:

bash 0_import_model.sh

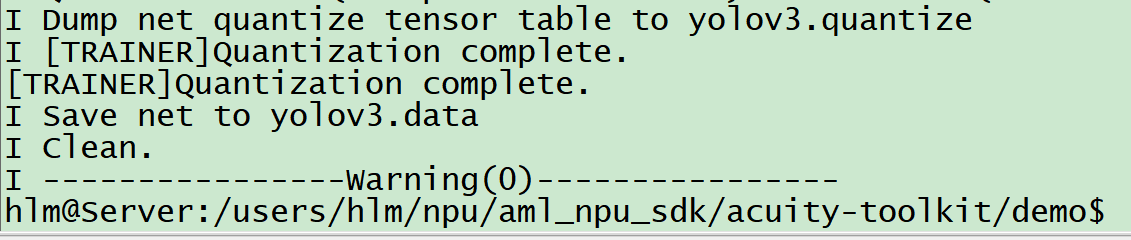

2,对模型进行量化

hlm@Server:/users/hlm/npu/aml_npu_sdk/acuity-toolkit/demo$ git diff

diff --git a/acuity-toolkit/demo/1_quantize_model.sh b/acuity-toolkit/demo/1_quantize_model.sh

index 630ea7f..ee7bd00 100755

--- a/acuity-toolkit/demo/1_quantize_model.sh

+++ b/acuity-toolkit/demo/1_quantize_model.sh

@@ -1,6 +1,6 @@

#!/bin/bash

-NAME=mobilenet_tf

+NAME=yolov3

ACUITY_PATH=../bin/

tensorzone=${ACUITY_PATH}tensorzonex

@@ -11,12 +11,12 @@ $tensorzone \

--dtype float32 \

--source text \

--source-file data/validation_tf.txt \

- --channel-mean-value '128 128 128 128' \

- --reorder-channel '0 1 2' \

+ --channel-mean-value '0 0 0 256' \

+ --reorder-channel '2 1 0' \

--model-input ${NAME}.json \

--model-data ${NAME}.data \

--model-quantize ${NAME}.quantize \

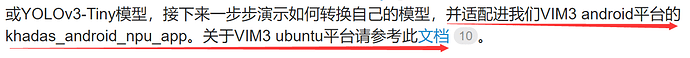

- --quantized-dtype asymmetric_affine-u8 \

+ --quantized-dtype dynamic_fixed_point-i8 \

注意,这里 --quantized-dtype 跟ubuntu平台不一样。执行对应脚本:

bash 1_quantize_model.sh

3,生成 case 代码

hlm@Server:/users/hlm/npu/aml_npu_sdk/acuity-toolkit/demo$ git diff

diff --git a/acuity-toolkit/demo/2_export_case_code.sh b/acuity-toolkit/demo/2_export_case_code.sh

index 85b101b..867c5b9 100755

--- a/acuity-toolkit/demo/2_export_case_code.sh

+++ b/acuity-toolkit/demo/2_export_case_code.sh

@@ -1,6 +1,6 @@

#!/bin/bash

-NAME=mobilenet_tf

+NAME=yolov3

ACUITY_PATH=../bin/

export_ovxlib=${ACUITY_PATH}ovxgenerator

@@ -8,8 +8,8 @@ export_ovxlib=${ACUITY_PATH}ovxgenerator

$export_ovxlib \

--model-input ${NAME}.json \

--data-input ${NAME}.data \

- --reorder-channel '0 1 2' \

- --channel-mean-value '128 128 128 128' \

+ --reorder-channel '2 1 0' \

+ --channel-mean-value '0 0 0 256' \

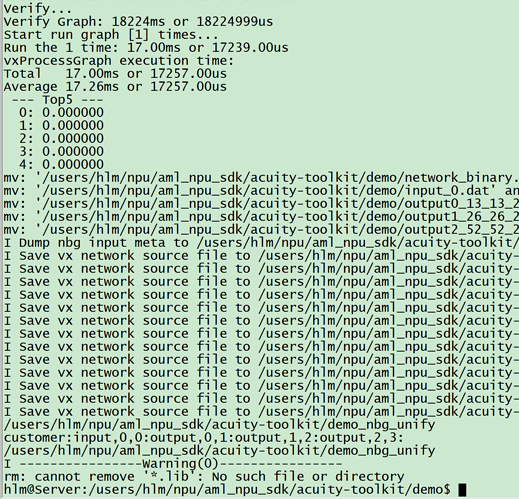

执行对应脚本:

bash 2_export_case_code.sh

当然只要运行脚本没报错,后续你可以按照上面修改完成后,一次性执行脚本:

bash 0_import_model.sh && bash 1_quantize_model.sh && bash 2_export_case_code.sh

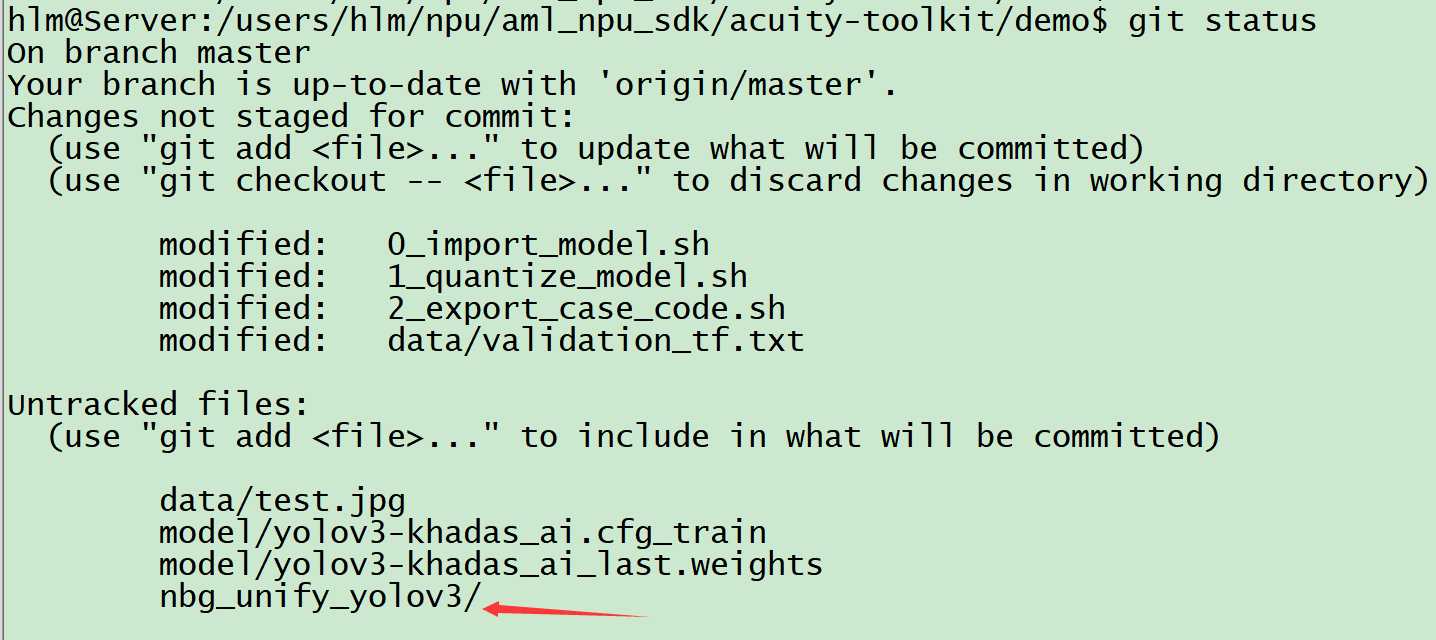

最终会产生一个nbg_unify_yolov3目录:

二,导入到VIM3 android平台的demo app运行

1, 安装ndk 编译环境

wget https://dl.google.com/android/repository/android-ndk-r17-linux-x86_64.zip

unzip android-ndk-r17-linux-x86_64.zip

vim ~/.bashrc

##添加下面两行代码到文件末尾

##export NDKROOT=/path/to/android-ndk-r17

##export PATH=$NDKROOT:$PATH

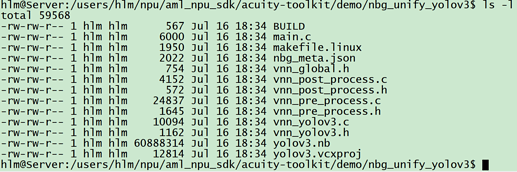

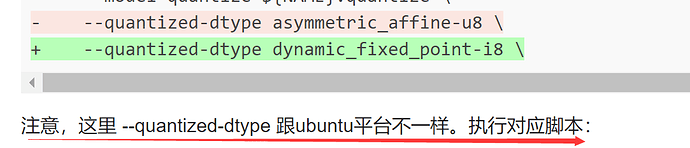

如图所示:

然后在你要使用的ssh上执行此命令:

source ~/.bashrc

2, 编译相关so库

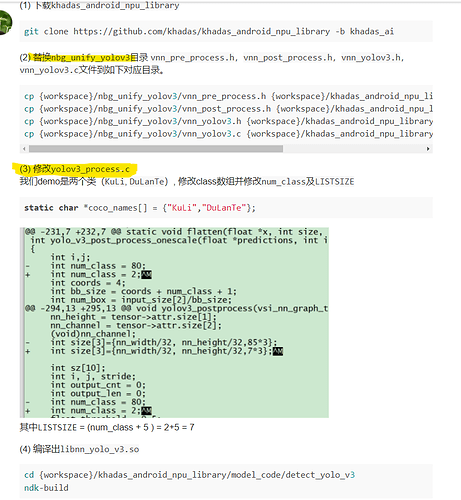

(1) 下载khadas_android_npu_library

git clone https://gitlab.com/khadas/khadas_android_npu_library -b khadas_ai

(2) 替换nbg_unify_yolov3目录 vnn_pre_process.h,vnn_post_process.h,vnn_yolov3.h,vnn_yolov3.c文件到如下对应目录。

cp {workspace}/nbg_unify_yolov3/vnn_pre_process.h {workspace}/khadas_android_npu_library/model_code/detect_yolo_v3/jni/include/

cp {workspace}/nbg_unify_yolov3/vnn_post_process.h {workspace}/khadas_android_npu_library/model_code/detect_yolo_v3/jni/include/

cp {workspace}/nbg_unify_yolov3/vnn_yolov3.h {workspace}/khadas_android_npu_library/model_code/detect_yolo_v3/jni/include/

cp {workspace}/nbg_unify_yolov3/vnn_yolov3.c {workspace}/khadas_android_npu_library/model_code/detect_yolo_v3/jni/

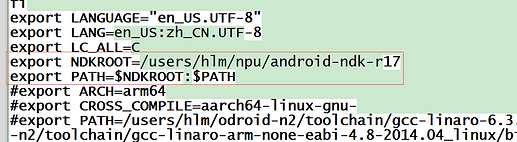

(3) 修改yolov3_process.c

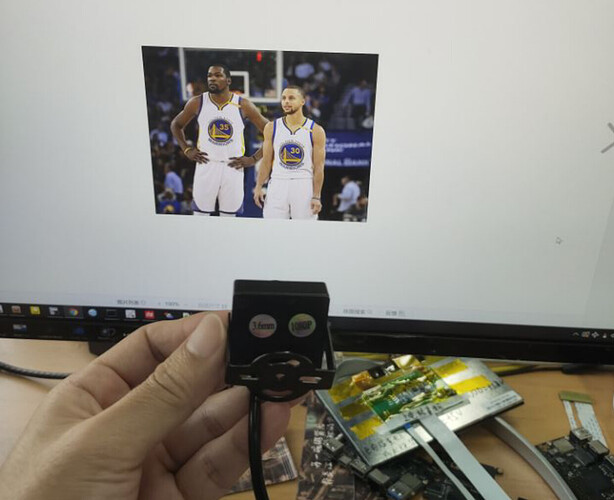

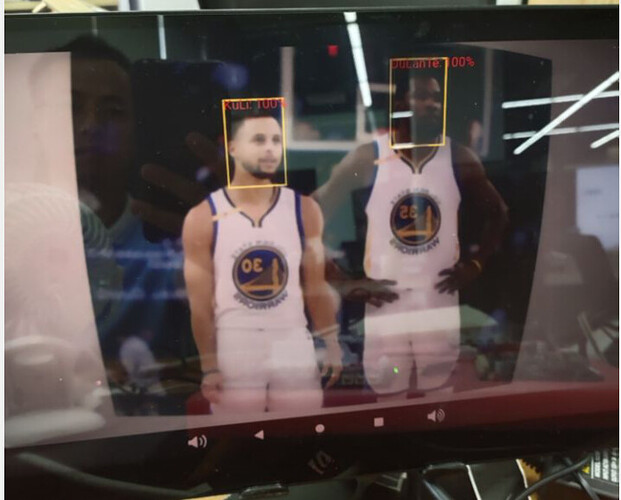

我们demo是两个类(KuLi, DuLanTe), 修改class数组并修改num_class及LISTSIZE

static char *coco_names[] = {"KuLi","DuLanTe"};

其中

LISTSIZE = (num_class + 5 ) = 2+5 = 7

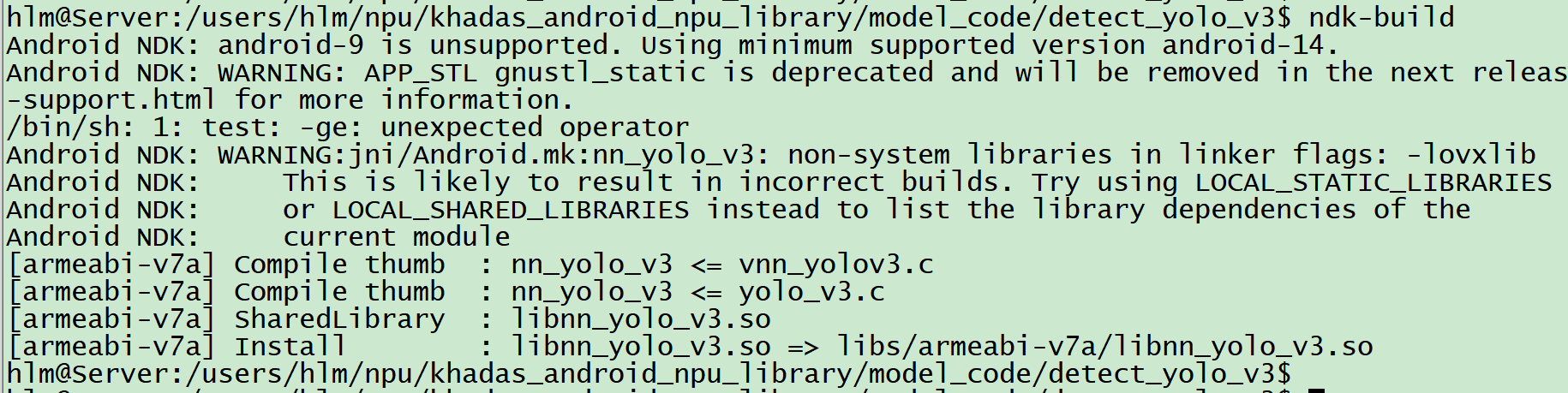

(4) 编译出libnn_yolo_v3.so

cd {workspace}/khadas_android_npu_library/model_code/detect_yolo_v3

ndk-build

3, 导入相关库到demo app运行

(1) 下载demo app

git clone https://github.com/khadas/khadas_android_npu_app -b khadas_ai

(2) 替换libnn_yolo_v3.so

cp {workspace}/khadas_android_npu_library/model_code/detect_yolo_v3/libs/armeabi-v7a/libnn_yolo_v3.so {workspace}/khadas_android_npu_app/app/libs/armeabi-v7a/

(3) 替换nb文件

cp {workspace}/acuity-toolkit/demo/nbg_unify_yolov3/yolov3.nb {workspace}/khadas_android_npu_app/app/src/main/assets/yolov3_88.nb

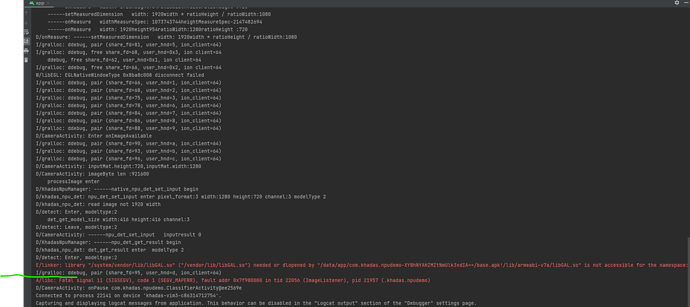

(4) 大功告成,把khadas_android_npu_app工程代码导入android studio中编译运行在VIM3板子上。

补充:YOLOv3-Tiny

1,导入模型

当前模型转换过程都是在 acuity-toolkit 目录下进行:

cd {workspace}/aml_npu_sdk/acuity-toolkit

cp {workspace}/yolov3-khadas_ai_tiny.cfg_train demo/model/

cp {workspace}/yolov3-khadas_ai_tiny_last.weights demo/model/

cp {workspace}/test.jpg demo/model/

如下修改:

hlm@Server:/users/hlm/npu/aml_npu_sdk/acuity-toolkit/demo$ git diff

diff --git a/acuity-toolkit/demo/0_import_model.sh b/acuity-toolkit/demo/0_import_model.sh

index 3198810..3b4efd3 100755

--- a/acuity-toolkit/demo/0_import_model.sh

+++ b/acuity-toolkit/demo/0_import_model.sh

@@ -1,6 +1,6 @@

#!/bin/bash

-NAME=mobilenet_tf

+NAME=yolotiny

ACUITY_PATH=../bin/

convert_caffe=${ACUITY_PATH}convertcaffe

@@ -11,13 +11,13 @@ convert_onnx=${ACUITY_PATH}convertonnx

convert_keras=${ACUITY_PATH}convertkeras

convert_pytorch=${ACUITY_PATH}convertpytorch

-$convert_tf \

- --tf-pb ./model/mobilenet_v1.pb \

- --inputs input \

- --input-size-list '224,224,3' \

- --outputs MobilenetV1/Predictions/Softmax \

- --net-output ${NAME}.json \

- --data-output ${NAME}.data

+#$convert_tf \

+# --tf-pb ./model/mobilenet_v1.pb \

+# --inputs input \

+# --input-size-list '224,224,3' \

+# --outputs MobilenetV1/Predictions/Softmax \

+# --net-output ${NAME}.json \

+# --data-output ${NAME}.data

#$convert_caffe \

# --caffe-model xx.prototxt \

@@ -30,11 +30,11 @@ $convert_tf \

# --net-output ${NAME}.json \

# --data-output ${NAME}.data

-#$convert_darknet \

-# --net-input xxx.cfg \

-# --weight-input xxx.weights \

-# --net-output ${NAME}.json \

-# --data-output ${NAME}.data

+$convert_darknet \

+ --net-input ./model/yolov3-khadas_ai_tiny.cfg_train \

+ --weight-input ./model/yolov3-khadas_ai_tiny_last.weights \

+ --net-output ${NAME}.json \

+ --data-output ${NAME}.data

--- a/acuity-toolkit/demo/data/validation_tf.txt

+++ b/acuity-toolkit/demo/data/validation_tf.txt

@@ -1 +1 @@

-./space_shuttle_224.jpg, 813

+./test.jpg

2,对模型进行量化

hlm@Server:/users/hlm/npu/aml_npu_sdk/acuity-toolkit/demo$ git diff

diff --git a/acuity-toolkit/demo/1_quantize_model.sh b/acuity-toolkit/demo/1_quantize_model.sh

index 630ea7f..ee7bd00 100755

--- a/acuity-toolkit/demo/1_quantize_model.sh

+++ b/acuity-toolkit/demo/1_quantize_model.sh

@@ -1,6 +1,6 @@

#!/bin/bash

-NAME=mobilenet_tf

+NAME=yolotiny

ACUITY_PATH=../bin/

tensorzone=${ACUITY_PATH}tensorzonex

@@ -11,12 +11,12 @@ $tensorzone \

--dtype float32 \

--source text \

--source-file data/validation_tf.txt \

- --channel-mean-value '128 128 128 128' \

- --reorder-channel '0 1 2' \

+ --channel-mean-value '0 0 0 256' \

+ --reorder-channel '2 1 0' \

--model-input ${NAME}.json \

--model-data ${NAME}.data \

--model-quantize ${NAME}.quantize \

- --quantized-dtype asymmetric_affine-u8 \

+ --quantized-dtype dynamic_fixed_point-i8 \

注意,这里 --quantized-dtype 跟ubuntu平台不一样。执行对应脚本:

3,生成 case 代码

hlm@Server:/users/hlm/npu/aml_npu_sdk/acuity-toolkit/demo$ git diff

diff --git a/acuity-toolkit/demo/2_export_case_code.sh b/acuity-toolkit/demo/2_export_case_code.sh

index 85b101b..867c5b9 100755

--- a/acuity-toolkit/demo/2_export_case_code.sh

+++ b/acuity-toolkit/demo/2_export_case_code.sh

@@ -1,6 +1,6 @@

#!/bin/bash

-NAME=mobilenet_tf

+NAME=yolotiny

ACUITY_PATH=../bin/

export_ovxlib=${ACUITY_PATH}ovxgenerator

@@ -8,8 +8,8 @@ export_ovxlib=${ACUITY_PATH}ovxgenerator

$export_ovxlib \

--model-input ${NAME}.json \

--data-input ${NAME}.data \

- --reorder-channel '0 1 2' \

- --channel-mean-value '128 128 128 128' \

+ --reorder-channel '2 1 0' \

+ --channel-mean-value '0 0 0 256' \

按照上面修改完成后,一次性执行脚本:

bash 0_import_model.sh && bash 1_quantize_model.sh && bash 2_export_case_code.sh

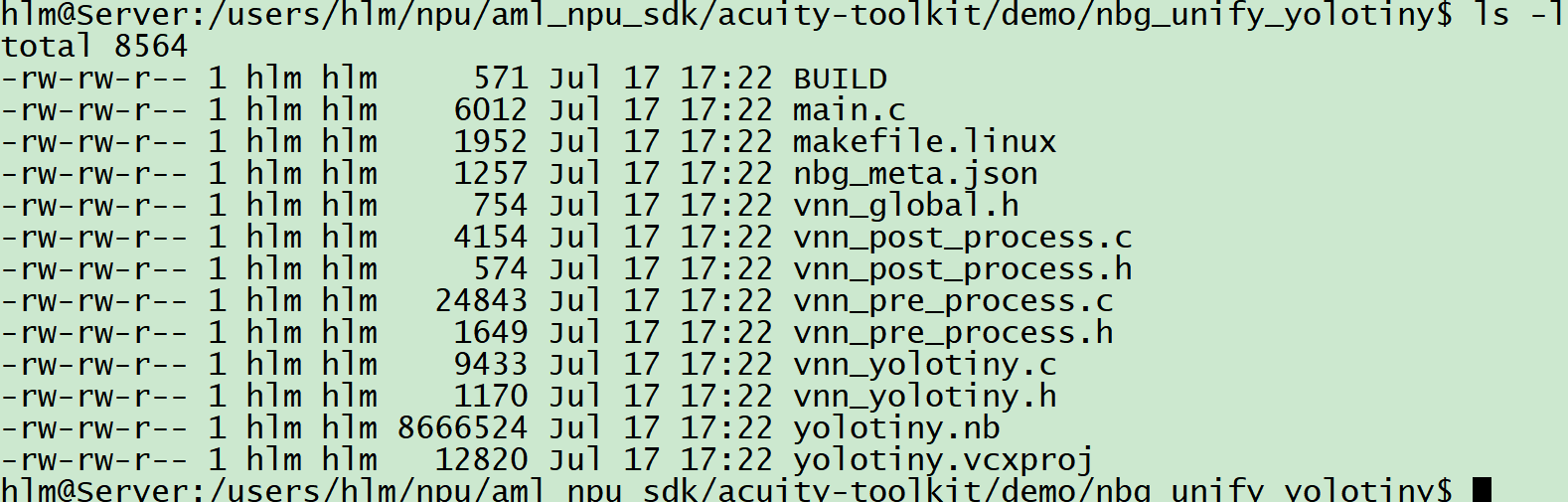

最终在demo目录下会产生一个nbg_unify_yolotiny目录:

二,导入到VIM3 android平台的demo app运行

1, 安装ndk 编译环境

wget https://dl.google.com/android/repository/android-ndk-r17-linux-x86_64.zip

unzip android-ndk-r17-linux-x86_64.zip

vim ~/.bashrc

##添加下面两行代码到文件末尾

##export NDKROOT=/path/to/android-ndk-r17

##export PATH=$NDKROOT:$PATH

如图所示:

然后在你要使用的ssh上执行此命令:

source ~/.bashrc

2, 编译相关so库

(1) 下载khadas_android_npu_library

git clone https://gitlab.com/khadas/khadas_android_npu_library -b khadas_ai

(2) 增加khadas_android_npu_library/model_code/detect_yolo_tiny/目录及相关代码

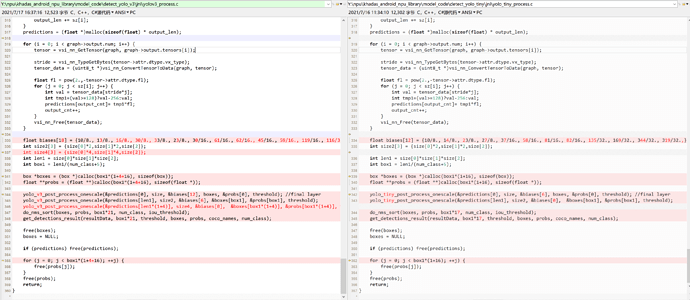

已经整理好相关代码,具体差异可以跟yolov3对比,主要差异在*_process.c文件上,如下图:

(3) 替换nbg_unify_yolotiny目录 vnn_pre_process.h,vnn_post_process.h,vnn_yolotiny.h,vnn_yolotiny.c文件到如下对应目录。

cp {workspace}/nbg_unify_yolotiny/vnn_pre_process.h {workspace}/khadas_android_npu_library/model_code/detect_yolo_tiny/jni/include/

cp {workspace}/nbg_unify_yolotiny/vnn_post_process.h {workspace}/khadas_android_npu_library/model_code/detect_yolo_tiny/jni/include/

cp {workspace}/nbg_unify_yolotiny/vnn_yolotiny.h {workspace}/khadas_android_npu_library/model_code/detect_yolo_tiny/jni/include/

cp {workspace}/nbg_unify_yolotiny/vnn_yolotiny.c {workspace}/khadas_android_npu_library/model_code/detect_yolo_tiny/jni/

(4) 修改yolo_tiny_process.c

我们demo是两个类(KuLi, DuLanTe), 修改class数组并修改num_class及LISTSIZE

static char *coco_names[] = {"KuLi","DuLanTe"};

其中

LISTSIZE = (num_class + 5 ) = 2+5 = 7

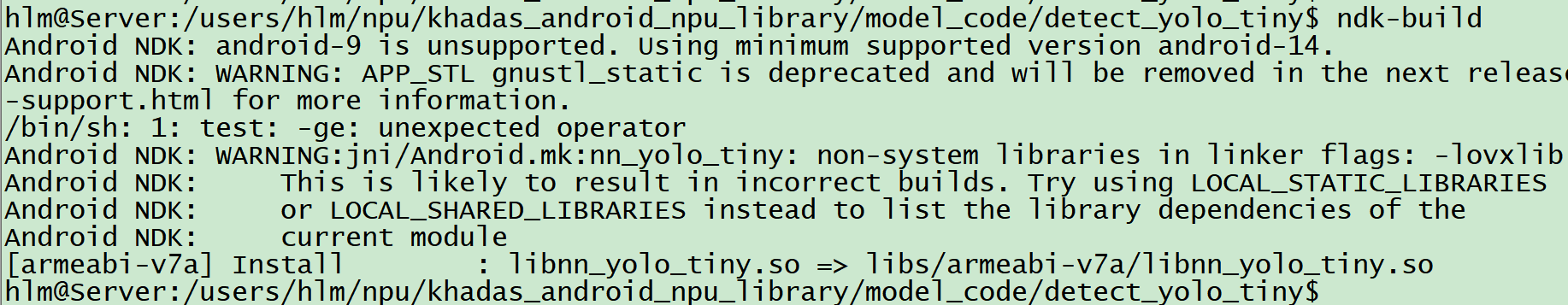

(4) 编译出libnn_yolo_tiny.so

cd {workspace}/khadas_android_npu_library/model_code/detect_yolo_tiny

ndk-build

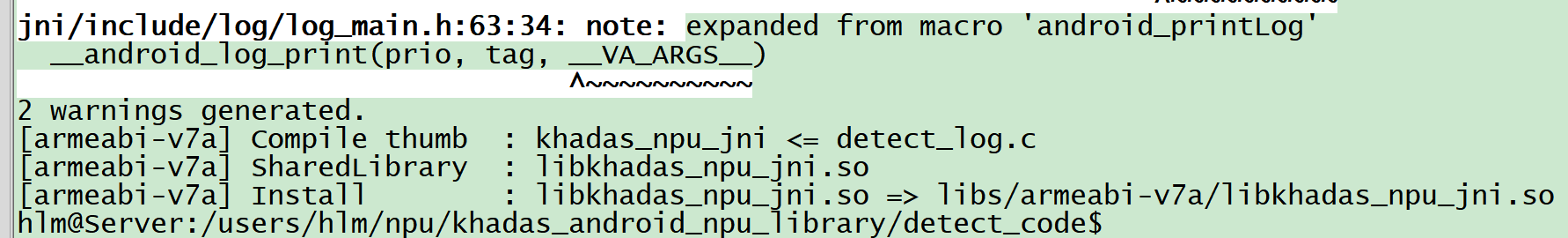

(5) 编译出libkhadas_npu_jni.so

首先要添加如下代码:

hlm@Server:/users/hlm/npu/khadas_android_npu_library/detect_code$ git diff

diff --git a/detect_code/jni/khadas_npu_det.cpp b/detect_code/jni/khadas_npu_det.cpp

index 7db2632..f90862e

--- a/detect_code/jni/khadas_npu_det.cpp

+++ b/detect_code/jni/khadas_npu_det.cpp

@@ -159,6 +159,9 @@ static jint npu_det_set_model(JNIEnv *env, jclass clazz __unused,jint modelType)

case 2:

type = DET_YOLO_V3;

break;

+ case 3:

+ type = DET_YOLO_TINY;

+ break;

default:

type = DET_FACENET;

break;

@@ -201,6 +204,9 @@ static jint npu_det_get_result(JNIEnv *env, jclass clazz __unused,jobject detres

case 2:

type = DET_YOLO_V3;

break;

+ case 3:

+ type = DET_YOLO_TINY;

+ break;

default:

type = DET_FACENET;

break;

@@ -311,6 +317,9 @@ static jint npu_det_set_input(JNIEnv *env, jclass clazz __unused,jbyteArray imgb

case 2:

type = DET_YOLO_V3;

break;

+ case 3:

+ type = DET_YOLO_TINY;

+ break;

编译:

hlm@Server:/users/hlm/npu/khadas_android_npu_library/detect_code$ ndk-build

4, 导入相关库到demo app运行

(1) 下载demo app

git clone https://github.com/khadas/khadas_android_npu_app -b khadas_ai

(2) 替换libnn_yolo_tiny.so

cp {workspace}/khadas_android_npu_library/model_code/detect_yolo_tiny/libs/armeabi-v7a/libnn_yolo_tiny.so {workspace}/khadas_android_npu_app/app/libs/armeabi-v7a/

(3) 替换libkhadas_npu_jni.so

cp {workspace}/khadas_android_npu_library/detect_code/libs/armeabi-v7a/libkhadas_npu_jni.so {workspace}/khadas_android_npu_app/app/libs/armeabi-v7a/

(4) 替换nb文件

cp {workspace}/acuity-toolkit/demo/nbg_unify_yolotiny/yolotiny.nb {workspace}/khadas_android_npu_app/app/src/main/assets/yolotiny_88.nb

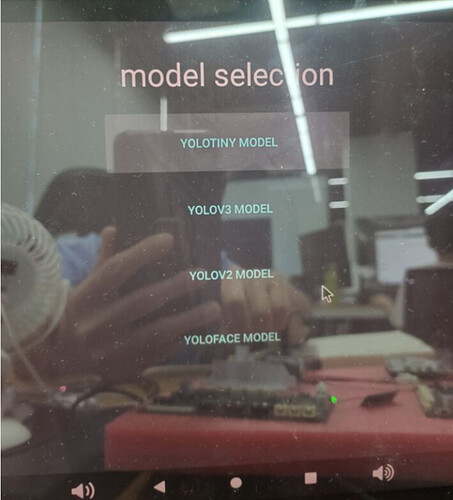

(5) app添加响应yolo_tiny按钮及功能

代码修改如下:

hlm@Server:/users/hlm/npu/khadas_android_npu_app$ git diff app/src/

diff --git a/app/src/main/java/com/khadas/npudemo/CameraActivity.java b/app/src/main/java/com/khadas/npudemo/CameraActivity.java

index 20f3e8d..dc91f60

--- a/app/src/main/java/com/khadas/npudemo/CameraActivity.java

+++ b/app/src/main/java/com/khadas/npudemo/CameraActivity.java

@@ -111,7 +111,8 @@ public abstract class CameraActivity extends AppCompatActivity implements Camera

public enum ModeType {

DET_YOLOFACE_V2,

DET_YOLO_V2,

- DET_YOLO_V3

+ DET_YOLO_V3,

+ DET_YOLO_TINY

}

static ModeType mode_type;

@@ -162,6 +163,12 @@ public abstract class CameraActivity extends AppCompatActivity implements Camera

} else {

in = assmgr.open("yolov3_99.nb");

}

+ } if(mode_type == ModeType.DET_YOLO_TINY) {

+ if(mStrboard.equals("kvim3")) {

+ in = assmgr.open("yolotiny_88.nb");

+ } else {

+ in = assmgr.open("yolotiny_99.nb");

+ }

} if(mode_type == ModeType.DET_YOLOFACE_V2) {

if(mStrboard.equals("kvim3")) {

in = assmgr.open("yolo_face_88.nb");

@@ -278,7 +285,14 @@ public abstract class CameraActivity extends AppCompatActivity implements Camera

}

setmoderesult = inceptionv3.npu_det_set_model(mode_type.ordinal());

}

-

+ if(mode_type == ModeType.DET_YOLO_TINY) {

+ if(mStrboard.equals("kvim3")) {

+ copyNbFile(this, "yolotiny_88.nb");

+ } else {

+ copyNbFile(this, "yolotiny_99.nb");

+ }

+ setmoderesult = inceptionv3.npu_det_set_model(mode_type.ordinal());

+ }

if(mode_type == ModeType.DET_YOLOFACE_V2) {

if(mStrboard.equals("kvim3")) {

copyNbFile(this, "yolo_face_88.nb");

diff --git a/app/src/main/java/com/khadas/npudemo/MainActivity.java b/app/src/main/java/com/khadas/npudemo/MainActivity.java

index e858c2a..e85d6a9

--- a/app/src/main/java/com/khadas/npudemo/MainActivity.java

+++ b/app/src/main/java/com/khadas/npudemo/MainActivity.java

@@ -13,11 +13,13 @@ import android.app.AlertDialog;

import android.content.DialogInterface;

public class MainActivity extends AppCompatActivity implements View.OnClickListener{

+ private Button button_yolotiny;

private Button button_yolov3;

private Button button_yolov2;

private Button button_yoloface;

public static final String Intent_key="modetype";

private static final String TAG = "MainActivity";

+ AlertDialog.Builder alertDialog0;

AlertDialog.Builder alertDialog;

AlertDialog.Builder alertDialog2;

AlertDialog.Builder alertDialog3;

@@ -25,7 +27,8 @@ public class MainActivity extends AppCompatActivity implements View.OnClickListe

public enum ModeType {

DET_YOLOFACE_V2,

DET_YOLO_V2,

- DET_YOLO_V3

+ DET_YOLO_V3,

+ DET_YOLO_TINY

}

@@ -38,15 +41,35 @@ public class MainActivity extends AppCompatActivity implements View.OnClickListe

TextView textView = (TextView)findViewById(R.id.title);

textView.setText("model selection");

-

+ button_yolotiny = (Button) findViewById(R.id.button_yolotiny);

button_yolov3 = (Button) findViewById(R.id.button_yolov3);

button_yolov2 = (Button) findViewById(R.id.button_yolov2);

button_yoloface = (Button) findViewById(R.id.button_yoloface);

+ button_yolotiny.setOnClickListener(this);

button_yolov3.setOnClickListener(this);

button_yolov2.setOnClickListener(this);

button_yoloface.setOnClickListener(this);

+ alertDialog0 = new AlertDialog.Builder(MainActivity.this);

+ alertDialog0.setTitle("prompt");

+ alertDialog0.setMessage("yolotiny image recognition model will run");

+ alertDialog0.setNegativeButton("cancel", new DialogInterface.OnClickListener() {//<E6><B7><BB><E5><8A><A0><E5><8F><96><E6><B6><88>

+ @Override

+ public void onClick(DialogInterface dialogInterface, int i) {

+ Log.e(TAG, "AlertDialog cancel");

+ //onClickNo();

+ }

+ })

+ .setPositiveButton("ok", new DialogInterface.OnClickListener() {//<E6><B7><BB><E5><8A><A0>"Yes"<E6><8C><89><E9><92><AE>

+ @Override

+ public void onClick(DialogInterface dialogInterface, int i) {

+ Log.e(TAG, "AlertDialog ok");

+ onClickYolovTiny();

+ }

+ })

+ .create();

+

alertDialog = new AlertDialog.Builder(MainActivity.this);

alertDialog.setTitle("prompt");

alertDialog.setMessage("yolov3 image recognition model will run");

@@ -112,6 +135,13 @@ public class MainActivity extends AppCompatActivity implements View.OnClickListe

public void onClick(View v) {

//Log.e(TAG,"OnClickListener");

switch (v.getId()) {

+ case R.id.button_yolotiny:

+ Log.e(TAG, "button_yolotiny");

+ //onClickButton1(v);

+ alertDialog.setCancelable(false);//<E7><82><B9><E5><87><BB><E7><A9><BA><E7><99><BD><E5><A4><84><E4><B9><8B><E5><90><8E><E5><BC><B9><E5><87><BA><E6><A1><86><E4><B8><8D><E4><BC><9A>

<E6><B6><88><E5><A4><B1>

+ alertDialog.show();

+ buttonSetFocus(v);

+ break;

case R.id.button_yolov3:

Log.e(TAG, "button_yolov3");

//onClickButton1(v);

@@ -146,7 +176,14 @@ public class MainActivity extends AppCompatActivity implements View.OnClickListe

button.requestFocusFromTouch();

}

-

+

+ private void onClickYolovTiny() {

+ //<E5><A4><84><E7><90><86><E9><80><BB><E8><BE><91>

+ Log.e(TAG, "button_yolovtiny enter ");

+ Intent intent = new Intent(this,ClassifierActivity.class);

+ intent.putExtra(Intent_key,ModeType.DET_YOLO_TINY.ordinal());

+ startActivity(intent);

+ }

private void onClickYolov3() {

//<E5><A4><84><E7><90><86><E9><80><BB><E8><BE><91>

diff --git a/app/src/main/res/layout/activity_main.xml b/app/src/main/res/layout/activity_main.xml

index 41ba40c..e2216d2

--- a/app/src/main/res/layout/activity_main.xml

+++ b/app/src/main/res/layout/activity_main.xml

@@ -23,6 +23,12 @@

android:text="model select"/>

<Button

+ android:id="@+id/button_yolotiny"

+ android:layout_width="300dp"

+ android:layout_height="80dp"

+ style="?android:attr/buttonBarButtonStyle"

+ android:text="yolotiny model" />

+ <Button

android:id="@+id/button_yolov3"

android:layout_width="300dp"

android:layout_height="80dp"

(6) 大功告成,把khadas_android_npu_app工程代码导入android studio中编译运行在VIM3板子上。