@OldNavi looks interesting this piece is amazing ![]()

I’m impressed - Big Respect !!!

Chapter VII - «Love and hate in C++»

I love and hate C++. That’s it.

After looking at the way how to control all buttons, leds, encoders, vu-meter and so on - I had to make a hard decision to write a controlling software from scratch. And as requirement were to have it work with lowest resources consumption a decision was made to write it in C++ and perhaps just to train myself in it…. Python or any other languages are good but - this is not the case in current scenario.

So, I wrote «Chief»

An idea was to use a concept of backends - where single backend is responsible to communicate with different subsystems. As for now I implemented the following backends inside Chief…

1/ I2c backend - to work over i2c line with vu-meter… which in its turn is an STM32 MCU running a code to work with neo-peixel leds…

2/ Arduino backend - it is a core board, which handles all 19-th buttons, 12 ordinary leds + 7 dual color leds and 6 encoders + PSU control pins + UART to communicate with Khadas SBC. That consumed all pins available on Atmega 128A chip.

3/ System backend - well it is kind of self explaining - a backend responsible to run any command or program on a Linux.

4/ Sushi backend - this one is doing a communication work with Sushi DAW main program and responsible to get and send information like parameter changes of audio plugins, set BPM and so on… It does work over GRPC protocol aka protobuf and can control almost every aspect of DAW program

5/ Variables backend - it controls all variables inside Chief

Also every backend provides a set of commands you can run against it in a common commands pool…. Like turn some led on/off, set encoder value and so on, send a parameter to DAW, turn off SBC…. There are plenty of commands available to fulfil almost every scenario.

What is a variable inside Chief ? Well it is a special type of data (bool, int, float, string or reference) type I build on top of std::variant class . General any backend entity can establish a variable and apply some transformation on it… As example a peak meter plugin sends an information from Sushi in decibels in float format… but vu-meter just needs a number of leds to turn on/off - so I have a transformation function which transforms a float value from 0…1 scale to number of leds to activate automatically - every time Sushu backend receive it from DAW (that happens 25 times per second)

Also I’ve implemented a «sensor» entity and have a 5 types of it… Generic, button, encoder and multi state button and multi state encoder…. Pretty simple beast which is just listen to variable value changes and act accordingly …. However they aren’t just simple as it could be :-)))

Generic sensor can listen to any variable and as many as you want and

1/ Check variable against a some condition like variable is equal to, less or greater than, or maybe in some range … and fire a command if condition met… or can also run a set of commands just on any variable change… like this …

- encoder_1:

type: encoder

id: 5

where: board # Identify backend

enc_id: 0 # Must match encoder defined

init: '{{master_gain}}' # Can be encoded as variable name or {{variable name}}, in second case it is value resolved at call time

pow: 0.7

on_change:

# . Reserverd word are '<', '>', '=', '<>', '>=', '<=', '<..>', '>..<'

# . With their synonyms 'less', 'greater', 'equal', 'not equal', 'greater or equal', 'less or equal', 'not in range', 'in range'

# . only number scalar allowed with type deduction... ie floats or int or char

#

'>':

value: 0.7

act:

- setdualled: [0, 2]

'<':

value: 0.7

act:

- setdualled: [0, 0]

act:

- sendparameter: [master_gain, '{{board_encoder_0}}']

Button and encoders are just an extension of generic sensor but bound to specific button or encoder entity in some backend…. And also contains a specific code for that type of controls… Like button can have put to 4 event handlers (on press, on release, on hold and on hold release)… Encoders are bit more complicated where you can set up min, max values, normal and fast speed increment and also you can set up a logarithm or revers log response curve on a rotation.

Multi state buttons and encoders are the same like previous but - they can change it state … it means that you could assign single physical encoder or button to control any amount of parameters in DAW… for example you can use encoder to control eq gain parameter by default, but if you press an encoder button - it will start to control a frequency of eq, next press will control width «Q» of eq and so on…

Also I’ve added a Presets entity - which is a just set of backends command to execute on a preset change command.

Pretty damn complicated ? Well yep…

All that can be configured via config file and I’m using YAML for that purposes, here is just few snippets from it…

- encoder_6:

type: state-encoder

id: 10

enc_id: 1

where: board # Identify backend

states:

- first:

init: '{{overdrive/HPF Freq}}'

on_change:

act:

- sendparameter: [overdrive/HPF Freq, '{{board_encoder_1}}']

- second:

init: '{{overdrive/HPF Reso}}'

on_change:

act:

- sendparameter: [overdrive/HPF Reso, '{{board_encoder_1}}']

or a preset

presets:

- 'Grunge':

actions:

- setled: [5,1]

- setled: [9,0]

- sendparameter: [overdrive/Drive, 0.2]

- sendparameter: [overdrive/Model, 0.4]

- sendparameter: [overdrive/HPF Freq, 0.2]

- sendparameter: [overdrive/HPF Reso, 0.1]

- sendparameter: [delay/Fb Mix, 0.15]

- sendparameter: [delay/L Delay, 0.8]

- sendparameter: [delay/L Delay, 0.35]

- sendparameter: [delay/Fb Tone, 0.1]

- sendparameter: [delay/Feedback, 0.07]

- sendparameter: [sdelay/sample_delay_ch1, 0.01]

- sendparameter: [sdelay/sample_delay_ch2, 0.03]

- sendparameter: [master_gain, 0.85]

- initAllSensors: []

- 'Fire':

actions:

- setled: [5,0]

- setled: [9,1]

- sendparameter: [overdrive/Drive, 0.95]

- sendparameter: [overdrive/Model, 0.7]

- sendparameter: [overdrive/HPF Freq, 0.11]

- sendparameter: [overdrive/HPF Reso, 0.1]

- sendparameter: [delay/Fb Mix, 0.15]

- sendparameter: [delay/L Delay, 0.8]

- sendparameter: [delay/R Delay, 0.4]

- sendparameter: [delay/Fb Tone, 0.1]

- sendparameter: [delay/Feedback, 0.1]

- sendparameter: [sdelay/sample_delay_ch1, 0.00455]

- sendparameter: [sdelay/sample_delay_ch2, 0.00183]

- sendparameter: [master_gain, 0.84]

- initAllSensors: []

Well to wrote that and debug (it had to be done on SBC only) took a huge amount of work, but now it works like charm and takes no more than 1% of CPU usage. In further plans is add more backends - like a pedal board over USB.

Here is a short video with example

In any case next chapter will be final with some demos, as work for this competition is almost done…

Both my projs together…

PS: By the way - I had successfully ran a NeuralPI guitar plugin - which using neural network LTSM models to emulate sound of some guitar rigs. Taken from here GitHub - GuitarML/NeuralPi: Raspberry Pi guitar pedal using neural networks to emulate real amps and effects.. Unfortunately it runs on CPU as for now as I don’t have any clue how to use Amlogic NN for it at the moment…

Final Chapter - «This is not the end.»

Development phase is over - and it come a time to show what have been developed. Before doing that I decided to put together what have been made over this months.

1/. Dual Kernel (ordinary/realtime) from EVL project (aka Xenomai 4) - had been successfully ported to Amlogic SOCs

2/ Amlogic SOC Realtime Audio Driver developed

3/ Forward ported user land library RASPA to work with new realtime driver and new kernel

4/ Forward ported realtime multithreaded library TWINE to new kernel.

5/ Forward ported DAW Sushi - to new kernel

6/ Wrote several audio plugins for DAW

7/ Developed all electronics for this project, vu-meter, control board, PSU, ADC, DAC, amplifiers and so on

8/ Designed and 3D printed a body for this F.A.W.N project

9/ Soldered and build all that together ![]()

10/ Wrote «Chief». Which controls every aspect of the project (buttons, leds, encoders)

11/ Wrote firmwares for both STM32 (vu-meter) and Atmega128A (mainboard)

12/ Ported rtpmidid (Midi over Ethernet) daemon

13/ Fixed almost all bugs ![]()

14/ Many other small things and keeping you updated on the Khadas forum

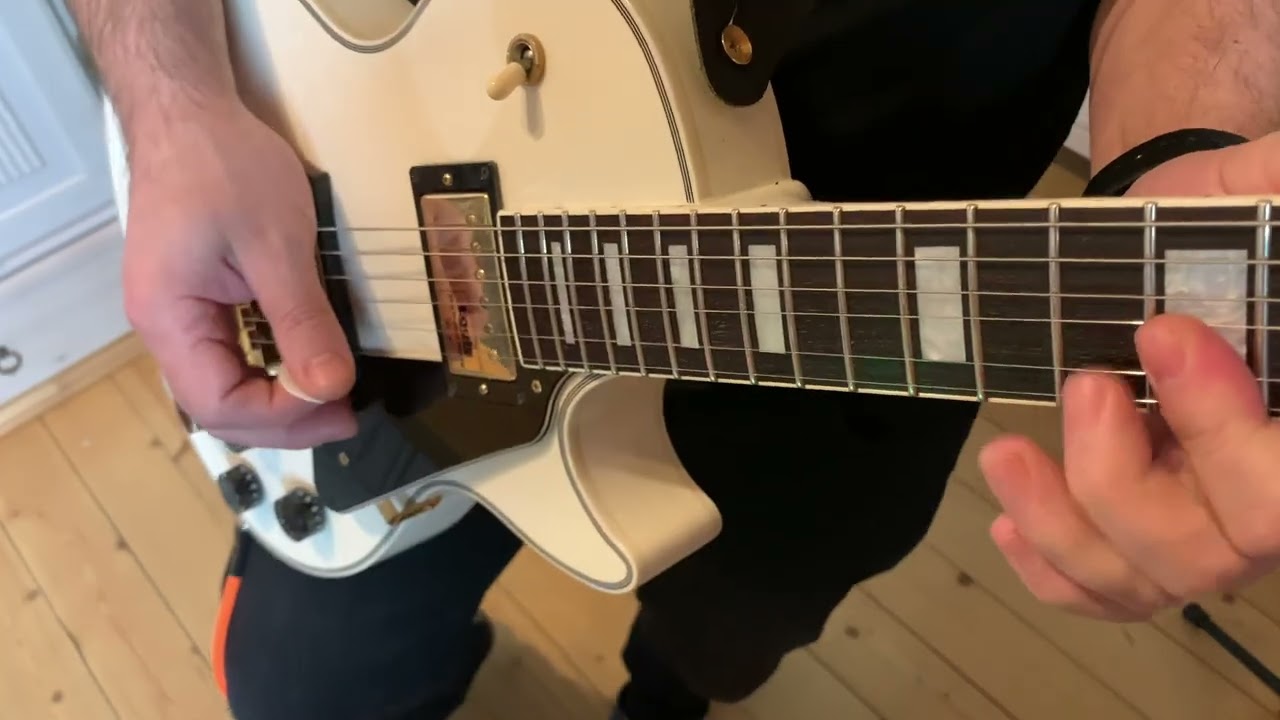

Disclamer - I’m bit better engineer rather than guitar player - so please don’t judge too hard.

I have a kinda hobby - to make covers on some old and great songs. So I got a CD copy of 24-multitrack original recording of the famous Bohemian Rhapsody by Queen. I’m remixing it and replaced all Brian May guitar parts with ones performed by myself. Oh… and all that parts had been recorded by F.A.W.N powered by Khadas SBC :-). The set of plugins in F.A.W.N used to recreate sound were - Guitar AMP, CAB emulator using convolution impulse response, compressor, slight phaser, EQ, delay and room reverberator. Need to say - that absolutely not a problem for Khadas SBC to handle that in a realtime. For copyright reasons - I’m not able to put the full composition on YouTube (otherwise it will be banned) - so I’ve put first Brian May Solo Cover here…. Please be advised to use good acoustic or headphones for listening ![]()

PS: This is not the end…

you like a superman ! amazing job ! and i’m already wanna get your guitar processor ![]()

The last but not the least. I’ve decided to record yet another demo using this project. This time I took a free backing track on YouTube from Elevated Jam Tracks as a base of this composition and wrote a small melody/solo part on top of it for this demo in just a couple of hours - so don’t judge too hard. The signal processing in F.A.W.N - was the following AMP emulator, CAB emulator, slight chorus, compressor, delay and HAAS effect (just when one channel is delayed by a few milliseconds ) to have a kind of stereo-spread.

The End.

Epilogue.

That was a very interesting road I went during this project implementation and I want to say a big thank you to Khadas team helping me with some questions - @Frank , @hyphop thank you for answers I had during this project. Special thanks goes to @sunny - it was mission impossible to have VIM3 shipped to me, and honestly I doubted it will reach me, but you went absolutely beyond all expectations to sort out all issues for the VIM3 shipping!

And of course, biggest credits goes to my family for their support and patience ![]()

Almost forgotten part. ![]()

Here is a list of repos I’ve created for this project.

Branch mainline-evl. -it contains forked Fenix build system with my changes to build realtime kernel in linux-mainline-evl package with patches added by myself for realtime capabilities including RT audio driver.

Branch next - user land library to communicate with RT driver

Branch next - forward port of Sushi DAW to work with new kernel and RT audio driver

Branch next - wrapper library for realtime threads needed for Sushi

there are also a set of other public repos used - but they are just forward compiling like

most of them are here Elk Audio OS · GitHub

Audio plugins here - some of them even run just in binary form.

Body design is also open sourced at:

Body design STL files for printing

Some things like firmwares for MCU’s and Chief aren’t yet open sourced - cause I’m still need to “buitify” a code for publish - need some for that, but I think I’ll publish it later… Anyway these isn’t critical part - as main components can be used directly and controlled via OSC

As brief summary what Khadas SBC is doing here - this board plays main role for this project - it is a core of everything. It runs a dual kernel normal/realtime and realtime audio driver. It runs headless digital audio workstation code (modified Sushi, RASPA, Twine) among with numerous audio plugins and synth inside it. DAW itself support most common audio plugins formats like Steinberg VST, Abelton Link 2, Faust and so on… As of current up to 400 audio plugins and synth supported.

Khadas SBC works with ADC and DAC I’ve used for this project as they attached directly to SBC and work over I2S lines with audio RT driver with additional features like audio share buffer (simultaneous output to DAC, HDMI and SPDIF)… Most important things that Khadas VIM3 board is truly capable realtime hardware with good audio codec inside with software I made - solid rock stable and capable to work under extreme conditions without any glitches. And there is a lot of power inside - for example Neural Guitar Amplifier/Cabinet emulator - eats up around 14% of single CPU… So in general the Khadas SBC poses a full power to handle a lot of audio effects, synths in a realtime with excellent quality - up to 24bit - 96000Hz sample rate (that is limitation of my ADC I’ve used) - so it can process analog signals from ADC and use several midi synths in a realtime very easily.

wow - i will check it soon because this area same interesting for me too ![]()