Warning: Long read!!!

Chapter I. «The Kernel and latencies»

Preambula: It is an experimental project with an outcome to be pretty unknown - it may succeed or it may fail. Who knows :-). And in any case it will take a bit more time to research and implement so I’ve started a bit earlier rather than February timeline.

Well, to get a really working solution with a «almost» realtime audio DSP on Khadas board - stock kernel isn’t good. As everybody knows signal processing require timing guaranties, It means that hardware and software events like IRQs from audio codecs should reach user process as quick as possible and in absolutely in reliable manner. Standard kernel isn’t good for that (it is not a problem of just Linux itself - it is general issue on most modern OSes)

So what to do here ? We need a kernel with timing guaranties and lowest possible latency for interrupt-to-process event. That means that we need 2 aspects implemented in a kernel -

1/. We need a realtime scheduling for all pieces of work (user threads, kernel threads and so on) - PREEMPTIVE_RT kernel option could be helpful here but it not just enough.

2/ We need also another way to handle IRQs -with ability to deliver it as quick as possible - nothing can be found in standard kernel.

Luckily there was a lot of job done in the past in area of realtime kernels. It is a Xenomai project xenomai.org which creates a almost true realtime kernel, however it is bit outdated and doesn’t support newer kernel. Its successor EVL project (also known as Xenomai 4) http://evlproject.org is to the rescue.

So, at first I was going to create a Xenomai RT kernel based on Amlogc vendor kernel 4.9. as it have everything for Amlogic SOC including TS050 panel working. However spending 3 weeks trying to adopt Xenomai kernel to Amlogic kernel version - I gave up, too much changes required because Amlogic added so many changes into the Linux kernel and it will take really huge amount of work to do.

So I decide to go to mainline kernel. I understood that I’ll loose some functionality like TS050 (that hurts badly ) but that in any case this is a future :-). Dealing with mainline kernel is much easier to merge Xenomai kernel codebase (as they already have mainline kernel source with incorporated changes)

So I took Fenix build script and slightly modified it, adding yet another configuration. Also I created another package in Fenix directory pointing to Xenomai RT kernel and incorporating latest Khadas patches. And after a week or so I finally got a dual Realtime kernel based on Xenomai and Amlogic/Khadas patches.

Dual kernel configuration means - that there are 2 kernel running together on the same SOC, realtime (so called Out-Of-Band kernel) and non-realtime (In-Band-Kernel) legacy kernel and have a common so called IRQ-pipeline (Dovetail Project). To familiarize yourself - please have a look to http://evlproject.org wiki pages. In my setup I’ve assigned 2 CPUs to inband kernel and 2 CPUs to out-of-band kernel. Hope when Khadas give me a VIM3 sample - I’ll use 4 small cores for inband kernel and 4 big cores for realtime kernel

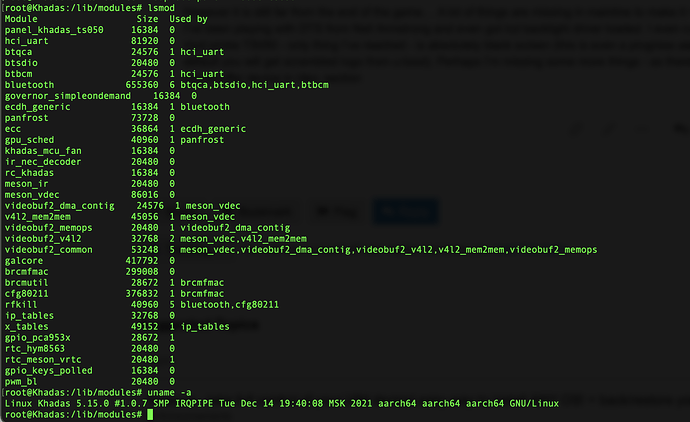

What is interesting - that switching to RT kernel exposed some legacy drivers misbehavior (as in RT kernel no IRQs can be masked out). So booted with default drivers showed some unpleasant picture of CPU usage on Inband assigned CPU. Most of the CPU consumption was generated by Broadcom firmware driver (sic) - so as result I’ve blacklisted that module - (no plans for Wifi at the moment ).

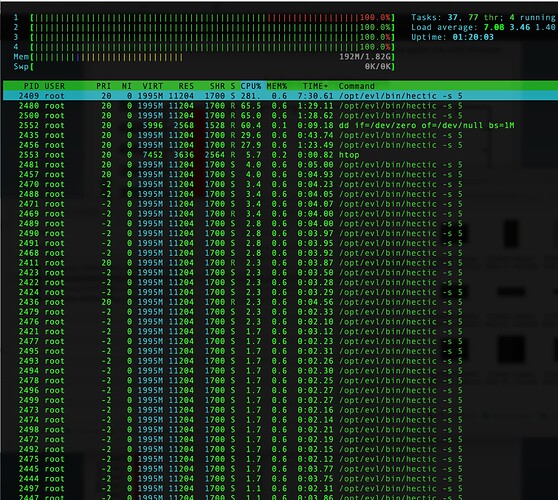

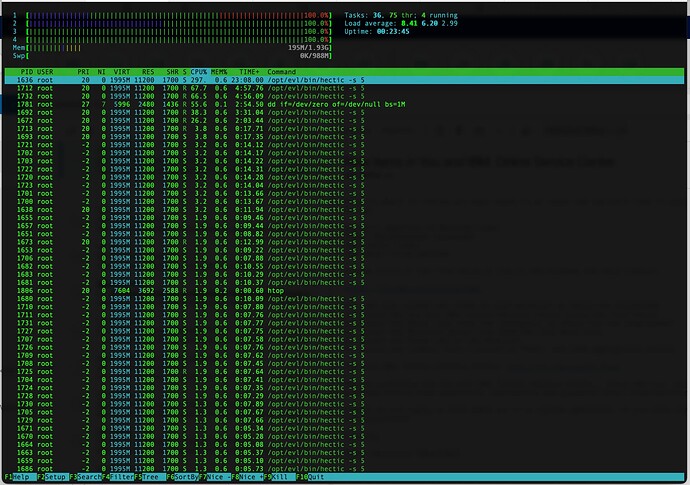

Htop results with all drivers loaded

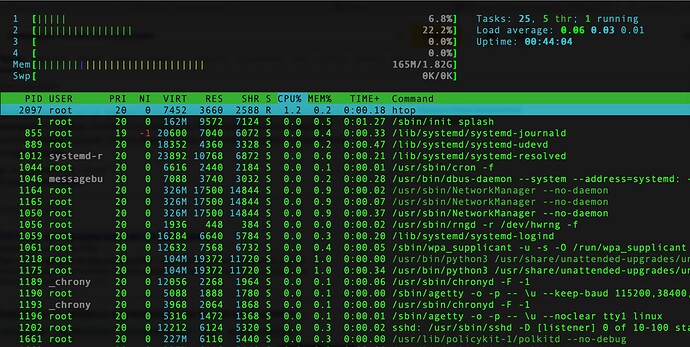

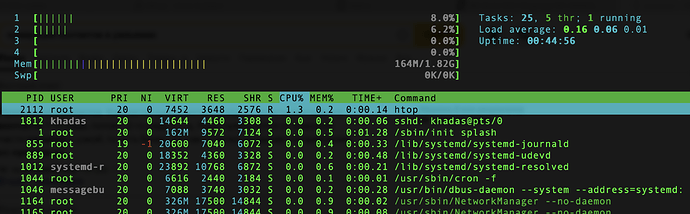

Broadcom drivers unloaded

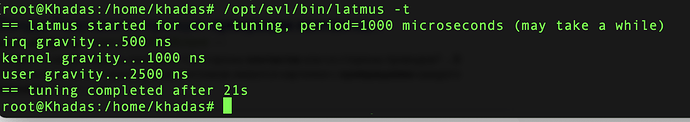

EVL (aka Xenomai 4) has a buit-in tools to measure how good system is to handle interrupt to process latencies. So let measure them using builtin latmus utility, but before do calibration tasks

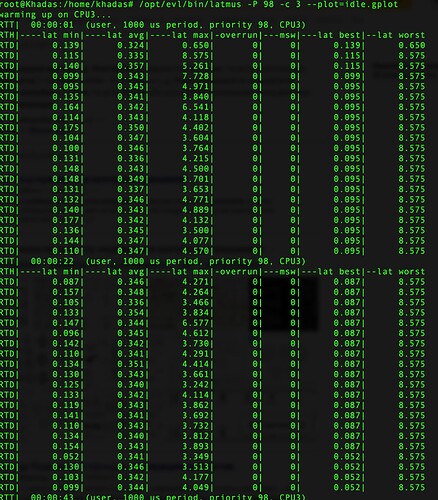

Idle mode: No apps running - CPU usage idle

Running latency test

# test started on: Wed Dec 22 10:55:45 2021

# Linux version 5.15.0 (root@OldNavi) (aarch64-none-linux-gnu-gcc (GNU Toolchain for the A-profile Architecture 9.2-2019.12 (arm-9.10)) 9.2.1 20191025, GNU ld (GNU Toolchain for the A-profile Architecture 9.2-2019.12 (arm-9.10)) 2.33.1.20191209) #1.0.7 SMP IRQPIPE Thu Dec 16 10:55:48 MSK 2021

# root=UUID=29631686-a319-4357-b990-9234333a47a2 rootfstype=ext4 rootflags=data=writeback rw ubootpart=715e90c6-01 console=ttyAML0,115200n8 no_console_suspend consoleblank=0 loglevel=0 logo=osd0,loaded,0x3d800000,panel vout=panel,enable hdmimode=1080p60hz fbcon=rotate:90 fsck.repair=yes net.ifnames=0 wol_enable=0 jtag=disable mac=c8:63:14:71:2b:98 fan=auto khadas_board=VIM3L hwver=VIM3.V12 coherent_pool=2M pci=pcie_bus_perf reboot_mode=cold_boot imagetype=SD-USB uboottype=vendor splash quiet plymouth.ignore-serial-consoles vt.handoff=7 isolcpus=2,3 evl.oob_cpus=2

# libevl version: evl.0 -- #41dc88a (2021-08-21 18:13:57 +0200)

# sampling period: 1000 microseconds

# clock gravity: 500i 1000k 2500u

# clocksource: arch_sys_counter

# vDSO access: architected

# context: user

# thread priority: 98

# thread affinity: CPU3

# C-state restricted

# duration (hhmmss): 00:00:49

# peak (hhmmss): 00:00:02

# min latency: 0.052

# avg latency: 0.344

# max latency: 8.575

# sample count: 49468

0 49364

1 52

2 0

3 26

4 21

5 1

6 2

7 1

8 1

No apps are running here, and results are astonishing - in some cases it is just 80 nanoseconds latency from interrupt to the user process.

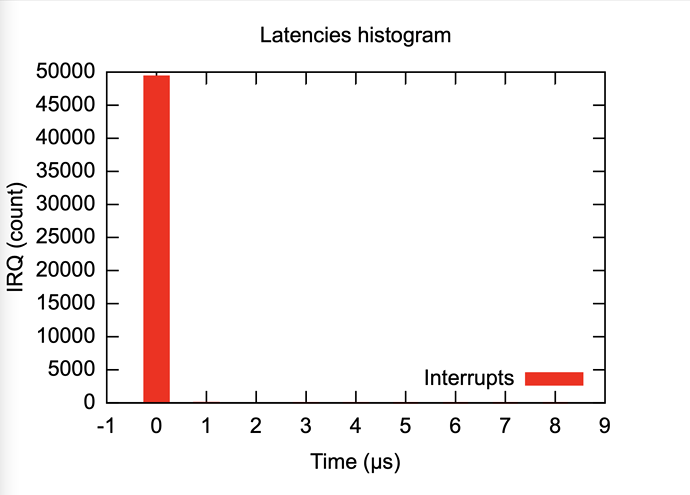

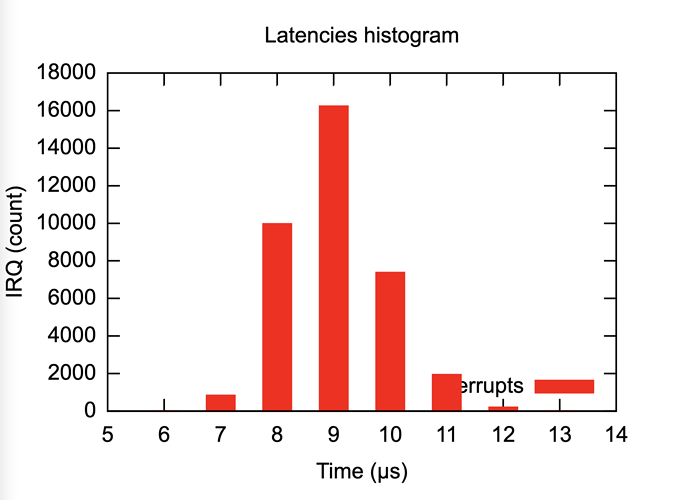

Histogram data

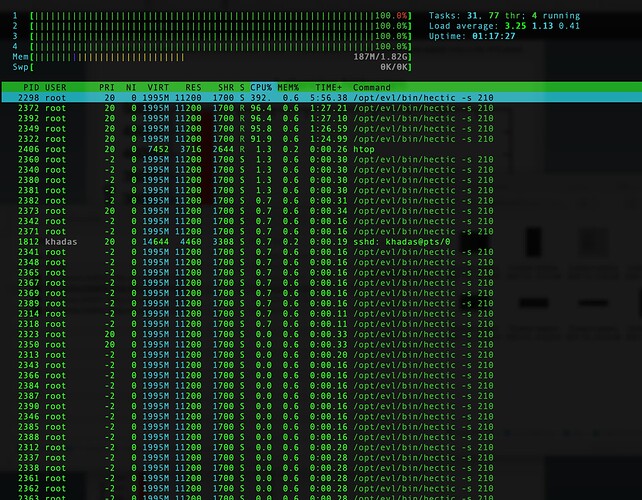

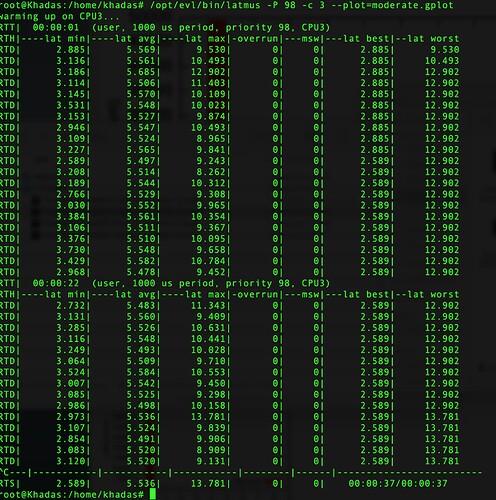

High load - run stress test with heitic forcing 10 microsecond context switching…

Turning on some stress load using heitic utility with 200 miscroseconds context switch - 100% CPU utilization

# test started on: Wed Dec 22 11:26:36 2021

# Linux version 5.15.0 (root@OldNavi) (aarch64-none-linux-gnu-gcc (GNU Toolchain for the A-profile Architecture 9.2-2019.12 (arm-9.10)) 9.2.1 20191025, GNU ld (GNU Toolchain for the A-profile Architecture 9.2-2019.12 (arm-9.10)) 2.33.1.20191209) #1.0.7 SMP IRQPIPE Thu Dec 16 10:55:48 MSK 2021

# root=UUID=29631686-a319-4357-b990-9234333a47a2 rootfstype=ext4 rootflags=data=writeback rw ubootpart=715e90c6-01 console=ttyAML0,115200n8 no_console_suspend consoleblank=0 loglevel=0 logo=osd0,loaded,0x3d800000,panel vout=panel,enable hdmimode=1080p60hz fbcon=rotate:90 fsck.repair=yes net.ifnames=0 wol_enable=0 jtag=disable mac=c8:63:14:71:2b:98 fan=auto khadas_board=VIM3L hwver=VIM3.V12 coherent_pool=2M pci=pcie_bus_perf reboot_mode=cold_boot imagetype=SD-USB uboottype=vendor splash quiet plymouth.ignore-serial-consoles vt.handoff=7 isolcpus=2,3 evl.oob_cpus=2

# libevl version: evl.0 -- #41dc88a (2021-08-21 18:13:57 +0200)

# sampling period: 1000 microseconds

# clock gravity: 500i 1000k 2500u

# clocksource: arch_sys_counter

# vDSO access: architected

# context: user

# thread priority: 98

# thread affinity: CPU3

# C-state restricted

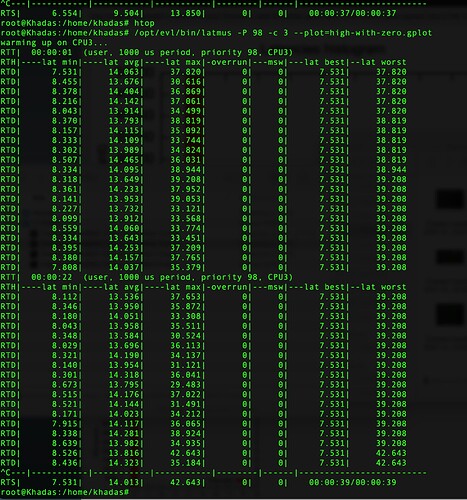

# duration (hhmmss): 00:00:37

# peak (hhmmss): 00:00:36

# min latency: 6.554

# avg latency: 9.504

# max latency: 13.850

# sample count: 36570

6 8

7 836

8 9973

9 16235

10 7374

11 1936

12 196

13 12

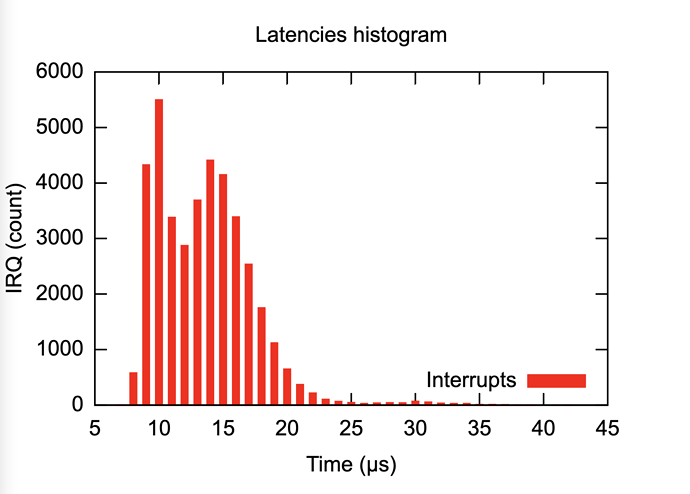

Histogram of IRQs timing

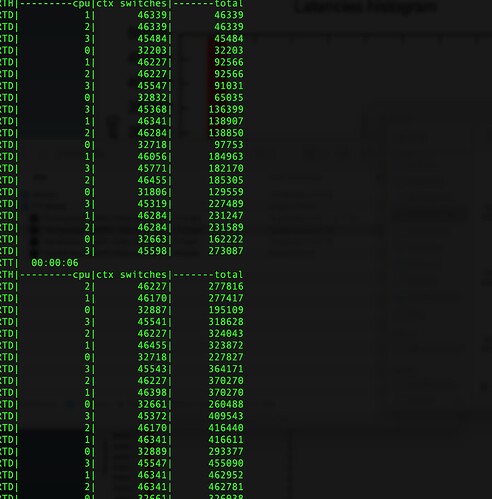

High load (all CPU at 100%)- run stress test with heitic forcing 10 microsecond context switching…

Same as previous but now context switching at maximum with 10 microseconds between ctx switcher plus adding CPU cache wiping with dd utility

Context switching per CPU - 1 second sampling period

Test details

# test started on: Wed Dec 22 11:28:14 2021

# Linux version 5.15.0 (root@OldNavi) (aarch64-none-linux-gnu-gcc (GNU Toolchain for the A-profile Architecture 9.2-2019.12 (arm-9.10)) 9.2.1 20191025, GNU ld (GNU Toolchain for the A-profile Architecture 9.2-2019.12 (arm-9.10)) 2.33.1.20191209) #1.0.7 SMP IRQPIPE Thu Dec 16 10:55:48 MSK 2021

# root=UUID=29631686-a319-4357-b990-9234333a47a2 rootfstype=ext4 rootflags=data=writeback rw ubootpart=715e90c6-01 console=ttyAML0,115200n8 no_console_suspend consoleblank=0 loglevel=0 logo=osd0,loaded,0x3d800000,panel vout=panel,enable hdmimode=1080p60hz fbcon=rotate:90 fsck.repair=yes net.ifnames=0 wol_enable=0 jtag=disable mac=c8:63:14:71:2b:98 fan=auto khadas_board=VIM3L hwver=VIM3.V12 coherent_pool=2M pci=pcie_bus_perf reboot_mode=cold_boot imagetype=SD-USB uboottype=vendor splash quiet plymouth.ignore-serial-consoles vt.handoff=7 isolcpus=2,3 evl.oob_cpus=2

# libevl version: evl.0 -- #41dc88a (2021-08-21 18:13:57 +0200)

# sampling period: 1000 microseconds

# clock gravity: 500i 1000k 2500u

# clocksource: arch_sys_counter

# vDSO access: architected

# context: user

# thread priority: 98

# thread affinity: CPU3

# C-state restricted

# duration (hhmmss): 00:00:39

# peak (hhmmss): 00:00:38

# min latency: 7.531

# avg latency: 14.013

# max latency: 42.643

# sample count: 39489

7 3

8 578

9 4323

10 5495

11 3379

12 2870

13 3689

14 4409

15 4146

16 3386

17 2534

18 1748

19 1116

20 648

21 367

22 214

23 102

24 63

25 44

26 27

27 36

28 42

29 37

30 67

31 52

32 32

33 27

34 25

35 8

36 8

37 7

38 3

39 2

40 0

41 1

42 1

Histogram

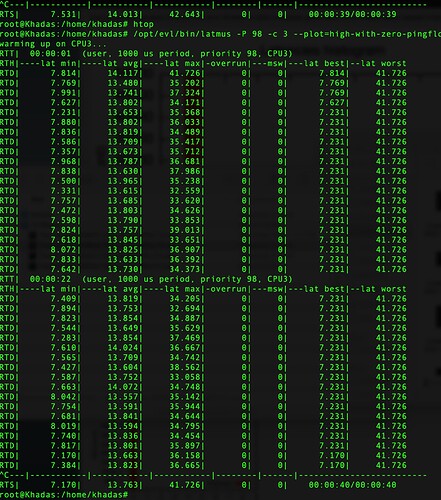

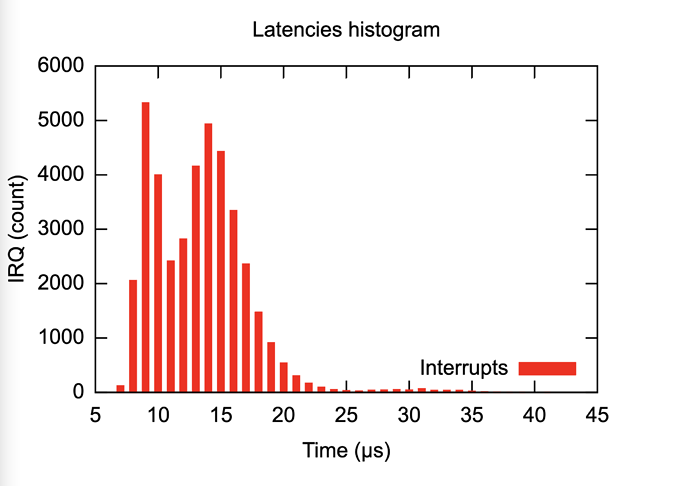

High load extreme. Previous test plus ping flood (ping at maximum possible rate ) via ethernet

Everything as in previous test plus added a ping flood test (pinging Khadas board at maximum possible rate)

Test details

# test started on: Wed Dec 22 11:30:03 2021

# Linux version 5.15.0 (root@OldNavi) (aarch64-none-linux-gnu-gcc (GNU Toolchain for the A-profile Architecture 9.2-2019.12 (arm-9.10)) 9.2.1 20191025, GNU ld (GNU Toolchain for the A-profile Architecture 9.2-2019.12 (arm-9.10)) 2.33.1.20191209) #1.0.7 SMP IRQPIPE Thu Dec 16 10:55:48 MSK 2021

# root=UUID=29631686-a319-4357-b990-9234333a47a2 rootfstype=ext4 rootflags=data=writeback rw ubootpart=715e90c6-01 console=ttyAML0,115200n8 no_console_suspend consoleblank=0 loglevel=0 logo=osd0,loaded,0x3d800000,panel vout=panel,enable hdmimode=1080p60hz fbcon=rotate:90 fsck.repair=yes net.ifnames=0 wol_enable=0 jtag=disable mac=c8:63:14:71:2b:98 fan=auto khadas_board=VIM3L hwver=VIM3.V12 coherent_pool=2M pci=pcie_bus_perf reboot_mode=cold_boot imagetype=SD-USB uboottype=vendor splash quiet plymouth.ignore-serial-consoles vt.handoff=7 isolcpus=2,3 evl.oob_cpus=2

# libevl version: evl.0 -- #41dc88a (2021-08-21 18:13:57 +0200)

# sampling period: 1000 microseconds

# clock gravity: 500i 1000k 2500u

# clocksource: arch_sys_counter

# vDSO access: architected

# context: user

# thread priority: 98

# thread affinity: CPU3

# C-state restricted

# duration (hhmmss): 00:00:40

# peak (hhmmss): 00:00:01

# min latency: 7.170

# avg latency: 13.763

# max latency: 41.726

# sample count: 39882

7 119

8 2053

9 5322

10 3995

11 2414

12 2815

13 4155

14 4933

15 4426

16 3342

17 2358

18 1473

19 911

20 536

21 303

22 166

23 90

24 48

25 30

26 21

27 37

28 41

29 50

30 41

31 64

32 36

33 36

34 34

35 18

36 8

37 4

38 1

39 1

40 0

41 1

Is these numbers good ? They are excellent !!! There were no overruns (IRQ realtime requirements met) at all and we newer had a latencies greater then 50 microseconds!!!

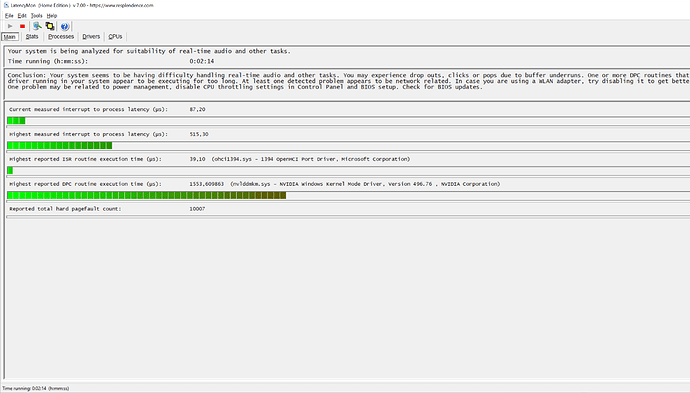

Just for comparison - here is a latency measurement on very well tuned Windows 10 with AMD Ryzen 12 core CPU, 32Gb RAM and extreme tweaking of everything (MSI-X Priority, Drivers affinity to CPU setup, Drivers threads priority tweaking to RT and so on)

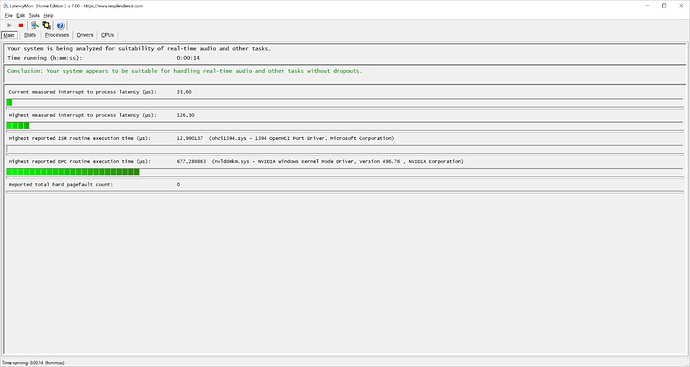

Windows IDLE

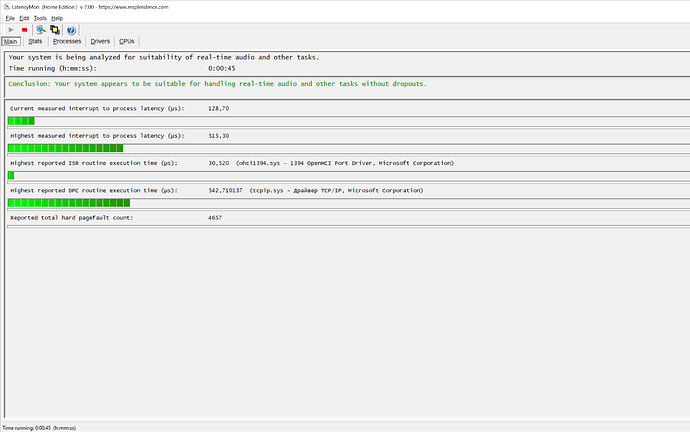

Windows 70% LOAD

Windows 100% LOAD

Amlogic VIM3L and Khadas board with mainline Linux seems to be an absolute winner here.

As the next task I’ll have to rewrite Amlogic SOC sound drivers to realtime manner - throwing away all ALSA layer. Stay tuned !