Dear all,

I am trying to run the pre-trained YOLOv4 model (.weights and .cfg) on the VIM3. The conversion from (.weights, .cfg) to .nb (and associated .h) goes through. I am able to compile a libnn_yolo_v4.so. I am able to run the demo using my newly-compiled model, but the model does not detect anything.

The steps I took were the following:

Convert the pre-trained (.weights & .cfg) YOLOv4 to a Khadas-ready model, and run it on the Khadas board

1. Convert pre-trained YOLOv4 to a Khadas-ready model (folder with .nb, .c, …)

- Download pre-trained

.weightsand.cfgfrom the official repo

wget https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v3_optimal/yolov4.weights

wget https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4.cfg

-

Follow this guide to modify the three scripts

.shinacuity-toolkit/demo/ -

Convert the (

.weights,.cfg) into a Khadas-ready model by running

bash 0_import_model.sh && bash 1_quantize_model.sh && bash 2_export_case_code.sh

This creates a folder called

nbg_unify_yolov4(soundmap) ➜ demo git:(master) ✗ ls nbg_unify_yolov4 BUILD makefile.linux vnn_global.h vnn_post_process.h vnn_pre_process.h vnn_yolov4.h yolov4.vcxproj main.c nbg_meta.json vnn_post_process.c vnn_pre_process.c vnn_yolov4.c yolov4.nb

2. Compile the newly-converted model (on the board)

- Move the header files (

.h) and thevnn_yolo_v4.cfile fromnbg_unify_yolov4onto the board, onaml_npu_app/detect_library/model_code/detect_yolo_v4

scp vnn_post_process.h vnn_pre_process.h vnn_yolov4.h <BOARD>:path/to/aml_npu_app/detect_library/model_code/detect_yolo_v4/include/

scp vnn_yolov4.c <BOARD>:path/to/aml_npu_app/detect_library/model_code/detect_yolo_v4

- Compile the model by running (on the board)

cd path/to/aml_npu_app/detect_library/model_code/detect_yolo_v4

./build_vx.sh

The compilation seems successful (see below)

Thebin_rfolder has been created, i.e.khadas@biped1:~/aml_npu_app/detect_library/model_code/detect_yolo_v4$ ls bin_r/ libnn_yolo_v4.so vnn_yolov4.o yolo_v4.o yolov4_process.o

- Move the generated

libnn_yolo_v4.soand theyolov4.nb(created at the beginning when converting the model) to the right location on the board

cp bin_r/libnn_yolo_v4.so ~/aml_npu_demo_binaries/detect_demo_picture/lib # ran on the board

scp yolov4.nb <BOARD>:path/to/aml_npu_demo_binaries/detect_demo_picture/nn_data/yolov4_88.nb # ran on the host computer

3. Test the newly-compiled model

- On the board, in

aml_npu_demo_binaries/detect_demo_picture, run

sudo ./INSTALL

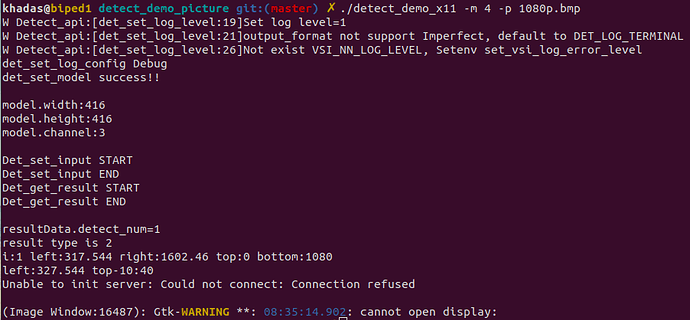

./detect_demo_x11 -m 4 -p 1080p.bmp

The above command goes through, but nothing is detected (see below)

khadas@biped1:~/aml_npu_demo_binaries/detect_demo_picture$ ./detect_demo_x11 -m 4 -p 1080p.bmp

W Detect_api:[det_set_log_level:19]Set log level=1

W Detect_api:[det_set_log_level:21]output_format not support Imperfect, default to DET_LOG_TERMINAL

W Detect_api:[det_set_log_level:26]Not exist VSI_NN_LOG_LEVEL, Setenv set_vsi_log_error_level

det_set_log_config Debug

det_set_model success!!

model.width:608

model.height:608

model.channel:3

Det_set_input START

Det_set_input END

Det_get_result START

Det_get_result END

resultData.detect_num=0

result type is 0

Note that before the conversion, the model was able to detect the person on the image. Below is an inference ran with the yolov4 repo, running

./darknet detector test cfg/coco.data cfg/yolov4.cfg yolov4.weights -ext_output ../1080p.bmp

Does anyone have any idea as to what could I be doing wrong?

Thank you and a great end of the week to you ![]()

Arthur