I am trying to run a non-image network through the NPU. I know you are meant to use the conversion scripts (ex 0_import, 1_quantize, 2_…) to convert a model in tensorflow .pb.

However, the parameters are image-specific as is the documentation

I am inputting a binary vector input – example shape (, 128). I don’t have channels, so the ordering and the quantizations and preprocessing assumed are off. And I am unsure how to handle the multiple inputs. Or what to do for the source file if I am not feeding in saved images…

Help setting params for 1d, non-image inputs/models…? I put my guesses, but they aren’t correct

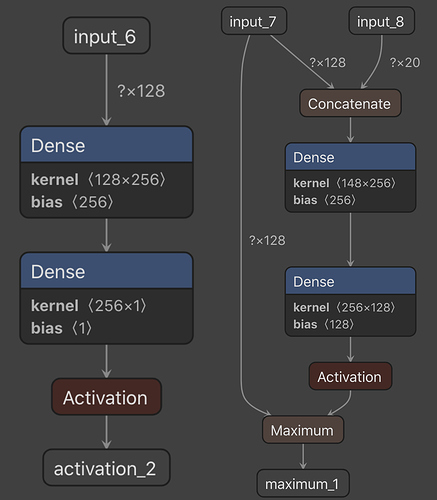

This are my two tensorflow model architectures

$convert_tf

–tf-pb ./model/mymodel.pb

–inputs input

–input-size-list ‘,128’ ‘, 20’

–outputs maximum_1 activation_2

–net-output ${NAME}.json

–data-output ${NAME}.data

$tensorzone \

--action quantization \

--source text \

--source-file ./data/validation_tf.txt \

--channel-mean-value '128 0 0 1' \

--model-input ${NAME}.json \

--model-data ${NAME}.data \

--quantized-dtype asymmetric_quantized-u8 \

--quantized-rebuild

$tensorzone \

--action inference \

--source text \

--source-file ./data/validation_tf.txt \

--channel-mean-value '128 0 0 1' \

--model-input ${NAME}.json \

--model-data ${NAME}.data \

--dtype quantized

$export_ovxlib \

--model-input ${NAME}.json \

--data-input ${NAME}.data \

--reorder-channel '0 1 2' \

--channel-mean-value '128 0 0 1' \

--export-dtype quantized \

--model-quantize ${NAME}.quantize \

--optimize VIPNANOQI_PID0X88 \

--viv-sdk ../bin/vcmdtools \

--pack-nbg-unify