Hello @Arjun_Gupta ,

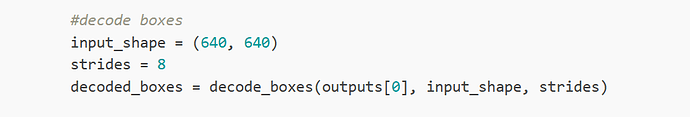

First, strides have three, [8, 16, 32].

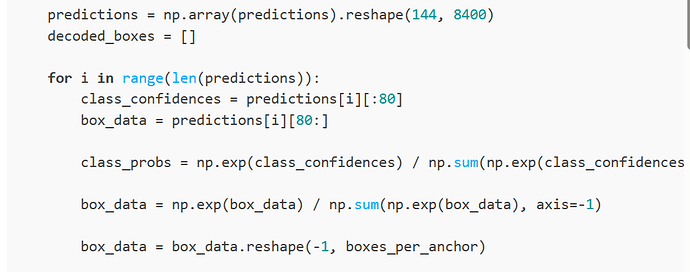

Second, the predictions you reshape into [144, 8400], so the len(predictions) is 144 and the shape of predictions[i][80:] is[1, 8320].

Third, you need to do softmax for four part separately. Divide [64, :] into [16, :]×4 and do softmax for each part.

Last, you missed this step.

Blockquote

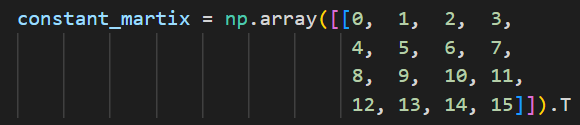

Then, each part does softmax and multiplies a constant matrix. The constant matrix is 0-15, shape(1, 16).

The constant matrix.

YOLOv8 demo has been released last Friday. You can refer it.

khadas/ksnn: Khadas Software Neural Network (github.com)

Docs

YOLOv8n KSNN Demo - 2 [Khadas Docs]

This demo’s model output is [1, 144, 80, 80], [1, 144, 40, 40], [1, 144, 20, 20]. You should separate and reshape your output to them.