Hi all

I tried to run an INT16 model using the output_format=OUT_FORMAT_INT16 setting, but it didn’t work as expected.

Here’s the code snippet I used:

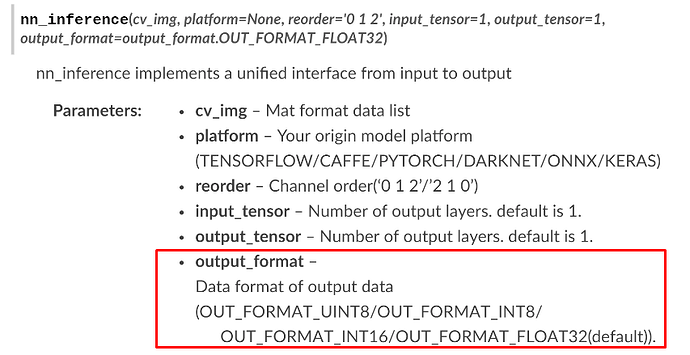

outputs = ssd.nn_inference(cv_img, platform=‘TFLITE’, output_tensor=4, reorder=‘0 1 2’, output_format=output_format.OUT_FORMAT_INT16)

input0_data = outputs[0]

input1_data = outputs[2]

input2_data = outputs[1]

input3_data = outputs[3]

print(outputs)input0_data = input0_data.reshape(GRID0, GRID0, SPAN, LISTSIZE).transpose(2, 3, 0, 1)

input1_data = input1_data.reshape(GRID1, GRID1, SPAN, LISTSIZE).transpose(2, 3, 0, 1)

input2_data = input2_data.reshape(GRID2, GRID2, SPAN, LISTSIZE).transpose(2, 3, 0, 1)

input3_data = input3_data.reshape(GRID3, GRID3, SPAN, LISTSIZE).transpose(2, 3, 0, 1)#input_data = [input0_data, input1_data, input2_data, input3_data]

input_data = list()

input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))

input_data.append(np.transpose(input3_data, (2, 3, 0, 1)))

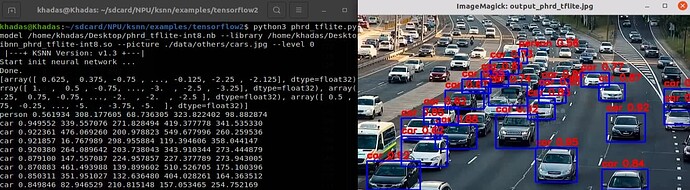

However, the console output showed incorrect data, ofc there’s no detection:

|---+ KSNN Version: v1.3 +---|

Start init neural network ...

Done.

[array([0, 0, 0, ..., 0, 0, 0], dtype=int16), array([0, 0, 0, ..., 0, 0, 0], dtype=int16), array([0, 0, 0, ..., 0, 0, 0], dtype=int16), array([0, 0, 0, ..., 0, 0, 0], dtype=int16)]

I have already attempted to convert the model to INT16 using the following command:

./convert \

--model-name phrd_tflite-int16 \

--platform tflite \

--model phrd.tflite \

--mean-values '0 0 0 0.003921569' \

--quantized-dtype dynamic_fixed_point \

--qtype int16 \

--source-files ./data/dataset/dataset0.txt \

--kboard VIM3 \

--print-level 1

can anyone please help me resolve this issue? Thank you.