Hi all,

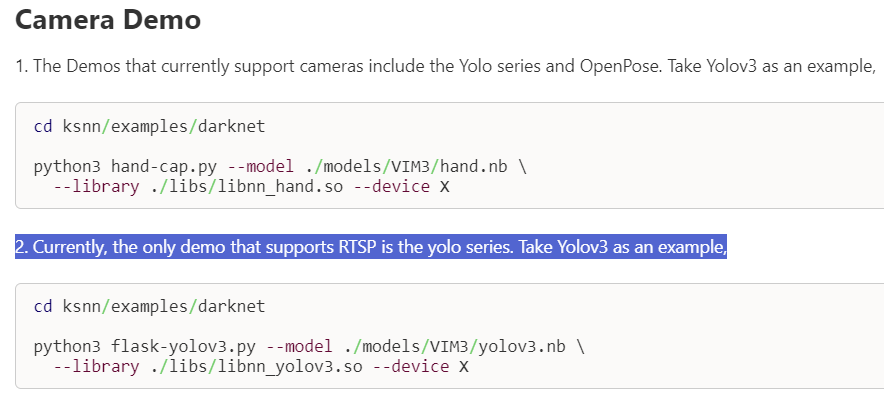

In KSNN Usage mentioned that the only demo supporting RTSP is the YOLO series. However, I noticed that the command used there is the same as the above one for the Camera MIPI demo, which made me wonder if it should actually include something related to the RTSP link instead.

can you clarify if the KSNN can work with video or RTSP streams? As far as I can tell, it currently works with images only. Thanks.

@keikeigd rtsp stream is only handled by the video reader section, ksnn will process any set of frames given as input regardless of camera input or rtsp stream.

Only that example code was written to use rtsp as input video stream as well, you can check the code and modify your example to also support rtsp as input.

Cheers

@Electr1 Thank you so much. I tried to install ffmpeg and v4l2loopback-dkms and feed RTSP stream into /dev/video1 using these commands:

sudo modprobe v4l2loopback

ffmpeg -i rtsp://admin:1234@192.168.0.164/stream1 -fflags nobuffer -vf format=yuv420p -r 60 -f v4l2 /dev/video1

Note: need to check if video1 is a dummy video device or not

$ v4l2-ctl --list-devices

Dummy video device (0x0000) (platform:v4l2loopback-000):

/dev/video1

and then run

python3 flask-yolov3.py --model ./models/VIM3/yolov3.nb --library ./libs/libnn_yolov3.so --device 1

and it worked!

1 Like

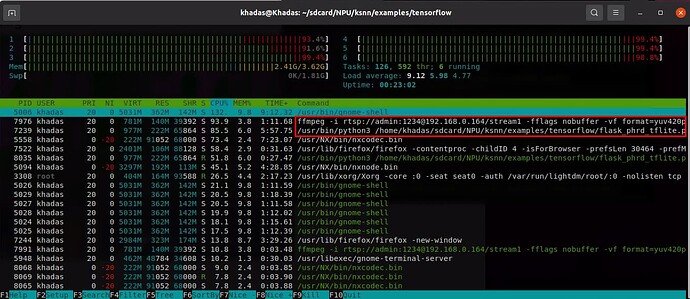

@Electr1 Hi, when testing with an RTSP stream, I have to run two parallel tasks on the Khadas board:

Step 1: Feed the RTSP stream into /dev/video1:

ffmpeg -re -i /home/khadas/Desktop/flask_video.mp4 -f v4l2 -pix_fmt yuyv422 /dev/video1

Step 2: Run NPU inference for the RTSP stream using my own model and library:

python3 flask_phrd_tflite.py --model /home/khadas/Desktop/phrd.nb --library /home/khadas/Desktop/libnn_phrd.so --device 1 --level 0

As you can see from the picture, these tasks almost fully utilize the CPU capacity, leading to performance that is only half as fast as when I process with images, in terms of overall process and NPU inference.

Performance comparison on images and RTSP stream

I tested on the same model and library with 1920x1080 frame resolution

500 Images: Total inference time: 22.9483 seconds; FPS: 500/22.9483 = 21.78.

500 Frames from RTSP: Total inference time: 38.1780 seconds; FPS: 500/38.1780 = 13.09.

Are the results for RTSP normal? Is there another way to make it faster for RTSP stream?

@keikeigd ffmpeg is very much a CPU intensive application, the NPU thread will also use the max capabilities of one thread for the procedures like moving data to and from system and NPU domain.

Maybe you can try to do taskset for the ffmpeg pipeline and limit it to cores 2-5 (A73 cores) and NPU thread can run on either 0-1 (A53 cores)

Maybe this can improve the performance a fair bit.