In this post, we walk through the complete workflow of deploying the large language model on Khadas Edge2, a high-performance SBC powered by the RK3588S SoC. This setup leverages the on-board NPU to enable efficient inference at the edge, making it a solid choice for running LLMs in low-power or offline environments.

You’ll learn how to:

- Set up the environment and dependencies

- Use the

khadas_llm.shscript to download and build models - Run the demo with NPU acceleration

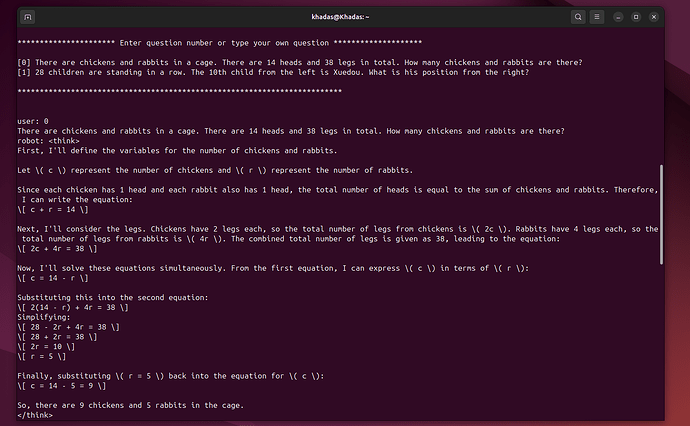

- Explore example outputs and performance