Which system do you use? Android, Ubuntu, OOWOW or others?

Ubuntu

Which version of system do you use? Please provide the version of the system here:

Ubuntu 22.04

Please describe your issue below:

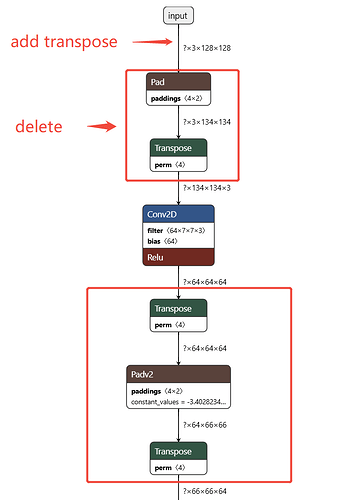

I tried to convert the TFLITE model to ADLA and run it.

On Python, this model worked fine.

The input to the model was (3,128,128), as shown below.

So I put the same (3,128,128) in the conversion script.

The ERROR Message says that the image shape and input shape are different.

How can we solve this problem?

Post a console log of your issue below:

TFLITE In/Out Tensor info

=== Input Tensor ===

name : serving_default_input:0

index : 0

shape : [ 1 3 128 128]

shape_signature : [ -1 3 128 128]

dtype : <class 'numpy.float32'>

quantization : (0.0, 0)

quantization_parameters: {'scales': array([], dtype=float32), 'zero_points': array([], dtype=int32), 'quantized_dimension': 0}

sparsity_parameters : {}

=== Output Tensor ===

name : PartitionedCall:0

index : 147

shape : [1 8]

shape_signature : [-1 8]

dtype : <class 'numpy.float32'>

quantization : (0.0, 0)

quantization_parameters: {'scales': array([], dtype=float32), 'zero_points': array([], dtype=int32), 'quantized_dimension': 0}

sparsity_parameters : {}

Convert Script

$adla_convert --model-type tflite \

--model ./my_model.tflite \

--inputs "serving_default_input:0" \

--input-shapes "3,128,128" \

--dtypes "float32" \

--quantize-dtype int8 \

--outdir tflite \

--channel-mean-value "0,0,0,255" \

--inference-input-type "float32" \

--inference-output-type "float32" \

--source-file "dataset_128_128.txt" \

--batch-size 1 --target-platform PRODUCT_PID0XA003

Converting ERROR Message

I Quantize_info: input_type:<dtype: 'float32'>, quant_type:int8, output_type:<dtype: 'float32'>, disable_per_channel:True, quantizer:True

weights_quantize:0,opt_algo:1,thres_size:4,scale:0.999,iterations:500,iterations_num:10

I Quantize_info: rep_data_gen shape:[[1, 3, 128, 128]], source_file:dataset_128_128.txt,g_channel_mean_value(Only valid for pictures):[[0.0, 0.0, 0.0, 255.0]]

E image[./data_128_128/1.jpg] channel not equal to model input channel,img_shape:(3, 128, 3),shape:[1, 3, 128, 128]

I ----------------Warning(0)----------------

E image[./data_128_128/1.jpg] channel not equal to model input channel,img_shape:(3, 128, 3),shape:[1, 3, 128, 128]

I ----------------Warning(0)----------------

...

Traceback (most recent call last):

File "tvm/adla_convert.py", line 185, in <module>

File "tvm/adla_convert.py", line 180, in main

File "tvm/contrib/target/adla_interface.py", line 87, in convert

File "tvm/adlalog.py", line 209, in e

ValueError: image[./data_128_128/1.jpg] channel not equal to model input channel,img_shape:(3, 128, 3),shape:[1, 3, 128, 128]

[6224] Failed to execute script 'adla_convert' due to unhandled exception!