Also, how can we run the same example for a video sequence?? should we just follow the similar procedure of copying the files to detect_demo_picture to detect_demo? If you could provide some instructions on that it will be really helpful for us to move forward in the development of our product. Thanks in advance.

@Dhruv_Gaba Hello

When I train yolov4 , I use 416x416 . And if you get the cfg file with darknet github , it’s 608x608 , 608x608 puts too much pressure on the NPU. I do not recommend using 608x60. I have tried many versions. In the case of combining the recognition accuracy and frame rate, 416x416 is the most suitable one.

So, I suggest you train a 416x416 model yourself. When 608x608 runs on VIM3, if there are more classes recognized, the frame rate may be 0. If you need to use 608, you need to edit the source code and recompile

yolo_demo_fb_mipi/main.cpp:67:#define MODEL_WIDTH 416

yolo_demo_fb_mipi/main.cpp:68:#define MODEL_HEIGHT 416

sample_demo_fb/main.cpp:110: amlge2d.ge2dinfo.dst_info.canvas_w = 416;

sample_demo_fb/main.cpp:111: amlge2d.ge2dinfo.dst_info.canvas_h = 416;

yolo_demo_x11_mipi/main.cpp:67:#define MODEL_WIDTH 416

yolo_demo_x11_mipi/main.cpp:68:#define MODEL_HEIGHT 416

yolo_demo_x11_usb/main.cpp:64:#define MODEL_WIDTH 416

yolo_demo_x11_usb/main.cpp:65:#define MODEL_HEIGHT 416

yolo_demo_fb_usb/main.cpp:65:#define MODEL_WIDTH 416

yolo_demo_fb_usb/main.cpp:66:#define MODEL_HEIGHT 416

sample_demo_x11/main.cpp:107: amlge2d.ge2dinfo.dst_info.canvas_w = 416;

sample_demo_x11/main.cpp:108: amlge2d.ge2dinfo.dst_info.canvas_h = 416;

All need to be modified to 608

You can follow our docs . Here are detailed operating steps.

https://docs.khadas.com/vim3/HowToRunNPUDemo.html

https://docs.khadas.com/vim3/HowToUseNPUSDK.html

There are mipi cameras or usb cameras in our demo, I have explained in the docs

Thanks for the answer, this was the mistake I was doing. The compilation worked when I tried to compile in the VIM3 board instead of Host PC.

@Frank I wanted to run this model but it is giving me errors during the conversion, can you please shed some light on why it is not converting to .nb format??? Thanks.

@Frank I was able to convert this model using the sdk you have provided. And, then I follow the instructions you have given on this page and copy paste the libraries, and header files and also make the necessary changes in the yolov3_process.c file. But when I compile the model in the VIM3 board using /build_vx.sh script it fails? Can you show what all changes we need to make to the scripts and code’s to get it to work ??

@Dhruv_Gaba Can the original demo be compiled without any modification?

@Frank Yes the original demo (aml_npu_app) aplication compiles perfectly (buiild_vx.sh) , and when I transfer the .so and .nb files to the demo binaries to run, it work seamlessly.

Hey @Frank, I followed the following process to my_own.tflite model and try to run it on the board

- I have converted my .tflite model in the SDK

- Then I moved the files vnn_my_model.h, vnn_pre_process.h, vnn_post_process.h to the include folder of aml_npu_demo_binaries in the yolo_v3

- Then I compiled it, it works! (not sure whether I need to renname files to some other names so that it works during the compilation)

- Then moved the .so file generated in the bin_r folder and copied the .nb file to the demo binares

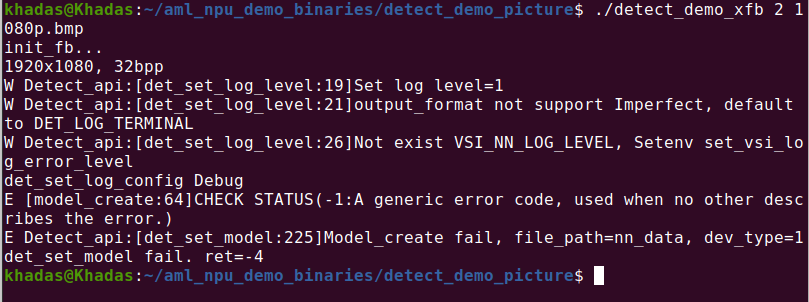

- When I try to run the ./detect_demo_xfb 2 1080.bmp it throughs out error

@Dhruv_Gaba What model is your tfilte? Is it yolov3?

If yes, how classified is your yolov3 model?

If no, you can’t replace it into the yolov3 demo, even if it can be compiled, it will not run

@Frank the models are not a yolo models. They are hand detection/tracking models .tflite, is there any way possible to get them to run on the VIM3 board?? We really need to deploy these models for the next production line urgently!

Please guide, me so that I can atleast run these models on the board somehow??

@Dhruv_Gaba You need to design and implement pre-processing and post-processing by yourself . You can’t use yolo’s pre- and post-processing methods

Got it! thanks for the clarification.

If I change the pre/post processing methods in pre/post processing files and change it in the demo folder? if I try to run the vnn_process.c file it should work right?

Or will I need to do some other compilation in the board to get it to work?

It would be great if you could breifly mention that necessary modifications and how could I run model through terminal. That will be really helpful. Thanks in advance.

@Dhruv_Gaba If your pre- and post-processing is correct, it will run.

I think your problem is that the data passed into the model is incorrect. This is what pre-processing needs to do. You need to convert the data into the data your model needs.

@Frank thanks for the advise, I will develop my own pre-processing and post-processing code and try again. Thanks for providing the direction.

@Frank I wanted to compile and run my own model on vim3 board.

Could you please tell how will I run my code (preprocess/postprocess) as the aml_npu_app just creates the .so and .o files in bin_r directory, but it does not create a application to run it??

when I transfer the shared object .so to the aml_npu_demo_binaries to run, it throws out error saying buffer overloaded?

can you please mention the process a bit more in detail, on how to compile and run my own code in the aml_npu_app (/dectect_library/model_code/detect_yolo_v3)

@Dhruv_Gaba aml_npu_app including applications and libraries, you can check the source code of this repository

Yeah trying to make sense of the repository so that I can see how can I compile my own code and then generate the application, and which path’s to use.

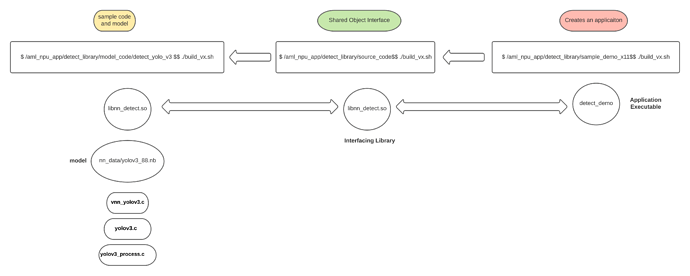

I got some idea from the https://docs.khadas.com/vim3/HowToUseAmlNPUApp.html but it still doesn’t clarify how the repositories are linked:

I understood the following :

But, still there are a few questions that remain un-answered.

- Can you please verify if the linking diagram that I have made above is correct or not? And, if there are some missing information in my understanding please let me know.

- Can we run the converted model (.nb format) in a local ubuntu system (not vim3 board) ?

- The generated ./detect_demo is the application that will run my model and code?

- So, do I need to make sure all the codes in these 3 folder are correct?

- Difference between fb and x11 modes?

- Do I need to compile the case code of the result from the SDK conversion (from tflite -> .nb) ? Do I need to compile here? And does the generated application run?

Please let me know your thoughts. Thanks in advance.