You can check the source code. ![]()

Updated the kernel and it works with the WD 570, no serious testing, just functionality. Your test kernel is configured differently so it did break nftables/UFW so I went back to my custom kernel config. Where is the kernel config file in fenix?

Really? No change to che config.

Willy Tarreau maintained 3.10 LTS kernel so he qualifies as ‘official’ kernel hacker. Me not at all, in fact I learned just few weeks ago how shitty the situation with those Android vendor kernels is since I believed for many years Google would require those SoC vendors to rebase all their code on upstream kernel of the version they require for a certain Android version.

But that’s not happening, they just forward port their crappy internal code base since forever, that way hiding every merge conflict that ever happened and as such even with a 5.4 version number the kernel running on any VIM4 is far away from official 5.4 LTS.

How far? You would need to take your time and efforts but it’s easily hundreds of thousands of differences. Anyone trusting into this crap is lost. But since people have no idea that neither SoC vendors nor board vendors give a shit they rely on this kernel even for security relevant stuff.

@foxsquirrel mentioned nftables. So I just checked out net/netfilter/nf_tables_api.c from official 5.4 LTS and from the Amlogic/Khadas mess:

https://www.diffchecker.com/frCrkIrU

That’s just a single file and that is something that has zero relationship to Amlogic adoptions (in such cases it would be understandable that official LTS and vendor kernel differ).

Yes, that is correct. I had to change the “official release” kernel to get nftables and UFW to work.

Thank you for the link.

Tried to post the diff and the file is too large for the forum.

So far I have not had any IO issues under load. The VIM4 has been building RetroPie for about 12 hours now. Thank you and the dev team for all their efforts. It seems this is a very messy situation.

The only thing that may be missing is the kernel headers, unless I’ve missed them.

I’m having problems with a Silicon Power SU001TBP34A60M28CA (1 TB Gen 3x4 NVMe). It appears as unclaimed under sudo lshw, it appears in sudo lspci, but not under sudo lsblk.

I’m also getting system errors:

sudo dmesg | grep nvme

[ 0.686377] nvme nvme0: pci function 0000:01:00.0

[ 0.686618] nvme 0000:01:00.0: enabling device (0000 → 0002)

[ 128.773790] nvme nvme0: Device not ready; aborting initialisation

[ 128.773893] nvme nvme0: Removing after probe failure status: -19

Dear All,

Do you have any solution?

nice board, but without NVME it is useless…

Thank you

Hello @aplsms

What issues you have ? Current image should fix this issue.

Oh, Sorry, my bad.

it works on 5.15.78.

is it possible to build 6.1.x of 6.2.x

it is interesting to use ZFS on this box, but it is an error to build it.

and will be very appreciated for Debian 12 image. i’f tried to build by Fenix and have a broken image (without partition table)…

Okay, so your SSD work, right?

6.x kernel is not supported.

What issues you have? Can you provide more details?

Do you mean the image you build can’t bootup? Do you build the eMMC or SD image? I checked SD image on my side, and use OOWOW to write it to eMMC, it boots well.

I was wrong. it does not work on latest kernel.

multiple SSDs. the same issue

Call trace:

nvme_queue_rq+0x7ac/0x864

__blk_mq_try_issue_directly+0x188/0x22c

blk_mq_try_issue_list_directly+0xa8/0x314

blk_mq_sched_insert_requests+0x1d4/0x1e4

blk_mq_flush_plug_list+0x120/0x200

blk_flush_plug_list+0xe4/0x120

blk_mq_submit_bio+0x248/0x600

__submit_bio+0x1dc/0x1f0

submit_bio_noacct+0x20c/0x244

submit_bio+0x48/0x18c

iomap_dio_submit_bio+0xac/0xc0

iomap_dio_bio_iter+0x2d4/0x504

__iomap_dio_rw+0x524/0x780

iomap_dio_rw+0x10/0x130

ext4_file_read_iter+0xac/0x144

new_sync_read+0xe8/0x180

vfs_read+0x14c/0x1e4

ksys_pread64+0x78/0xc0

__arm64_sys_pread64+0x20/0x30

invoke_syscall+0x48/0x114

el0_svc_common.constprop.0+0x44/0xfc

do_el0_svc+0x28/0x90

el0_svc+0x28/0x80

el0t_64_sync_handler+0xa4/0x130

el0t_64_sync+0x1a4/0x1a8

---[ end trace 9e07f80a0297f121 ]---

blk_update_request: I/O error, dev nvme0n1, sector 145951473 op 0x0:(READ) flags 0x4000 phys_seg 34 prio class 0

blk_update_request: I/O error, dev nvme0n1, sector 145959153 op 0x0:(READ) flags 0x4000 phys_seg 2 prio class 0

blk_update_request: I/O error, dev nvme0n1, sector 145963761 op 0x0:(READ) flags 0x4000 phys_seg 43 prio class 0

blk_update_request: I/O error, dev nvme0n1, sector 145964273 op 0x0:(READ) flags 0x4000 phys_seg 65 prio class 0

blk_update_request: I/O error, dev nvme0n1, sector 145964785 op 0x0:(READ) flags 0x4000 phys_seg 64 prio class 0

blk_update_request: I/O error, dev nvme0n1, sector 145965297 op 0x0:(READ) flags 0x0 phys_seg 53 prio class 0

khadas@Khadas:~$ uname -a

Linux Khadas 5.15.78 #1.5.2 SMP PREEMPT Thu Nov 2 11:26:43 CST 2023 aarch64 GNU/Linux

khadas@Khadas:~$ lsb_release -a

No LSB modules are available.

Distributor ID: Debian

Description: Debian GNU/Linux 12 (bookworm)

Release: 12

Codename: bookworm

I will create separate thread of ZFS

It is not latest kekrnel. Please check on latest image 1.6-231229, you can install with OOWOW online.

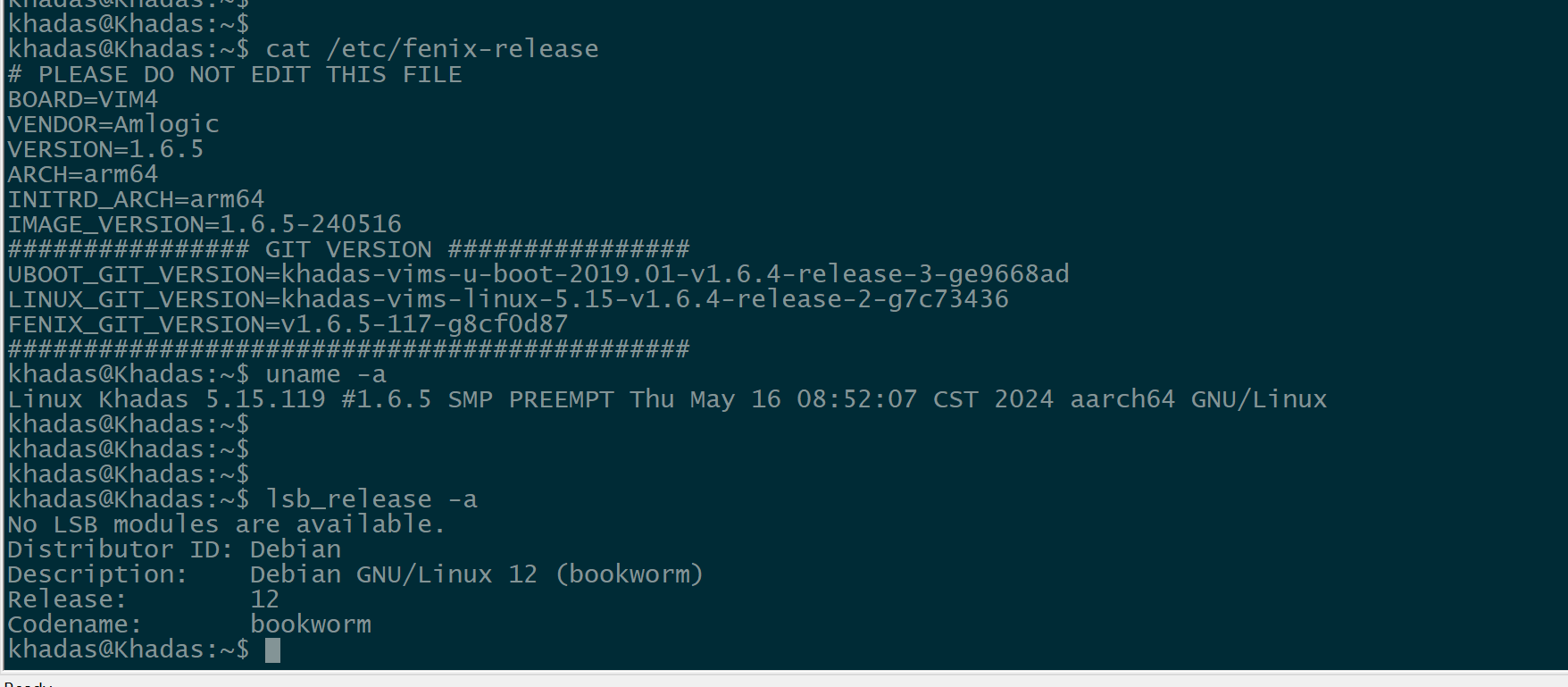

Latest kernel version should be: 5.15.119.

build image is stack on fdisk:

Using /home/apl/GIT/fenix/build/images/vim4-debian-12-minimal-linux-5.15-fenix-1.6.5-240515-develop.raw.img

fdisk is taking 100% CPU.

I have only vim4-debian-11-server-linux-5.15-fenix-1.5.2-231102-develop-test-only.img.xz in the dl.khadas.com - Index of /.images/vim4/

am I looking to the wrong place?

Please dont use the RAW image, just use SD image should be fine.

You can’t see other images on oowow?

I see a lot of images, but Debian is only one.

Hello @aplsms

Yes, we have not upload Debian images, you can check with other omages or build from fenix youself.

Hi again. the same problem with the latest kernel.

root@hassio:~# lsb_release -a

No LSB modules are available.

Distributor ID: Debian

Description: Debian GNU/Linux 12 (bookworm)

Release: 12

Codename: bookworm

root@hassio:~# uname -a

Linux hassio.petrenko.me 5.15.119 #1.6.5 SMP PREEMPT Thu May 16 15:32:56 UTC 2024 aarch64 GNU/Linux

a part of dmesg

[Thu May 16 16:19:28 2024] sg[0] phys_addr:0x000000016f0b4400 offset:1024 length:80896 dma_address:0x00000000c1500000 dma_length:80896

[Thu May 16 16:19:28 2024] sg[1] phys_addr:0x000000016f232000 offset:0 length:181248 dma_address:0x00000000c1514000 dma_length:181248

[Thu May 16 16:19:28 2024] ------------[ cut here ]------------

[Thu May 16 16:19:28 2024] Invalid SGL for payload:262144 nents:2

[Thu May 16 16:19:28 2024] WARNING: CPU: 3 PID: 4811 at drivers/nvme/host/pci.c:713 nvme_queue_rq+0x760/0x820

[Thu May 16 16:19:28 2024] Modules linked in: nf_conntrack_netlink(E) br_netfilter(E) bridge(E) stp(E) llc(E) iv009_isp(E) overlay(E) snd_seq(E) dhd(E) xt_addrtype(E) xt_MASQUERADE(E) xt_conntrack(E) nft_compat(E) nft_counter(E) nft_chain_nat(E) nf_tables(E) nfnetlink(E) nf_nat(E) nf_conntrack(E) nf_defrag_ipv6(E) nf_defrag_ipv4(E) amlogic_multienc(E) amlogic_jpegenc(E) media_sync(E) amvdec_avs2_fb_v4l(E) amvdec_mavs_v4l(E) amvdec_avs2_v4l(E) amvdec_avs3_v4l(E) amvdec_av1_v4l(E) amvdec_av1(E) amvdec_mavs(E) amvdec_avs2(E) amvdec_vp9_v4l(E) amvdec_vp9(E) zram(E) amvdec_vc1(E) amvdec_mmpeg4_v4l(E) amvdec_mmpeg4(E) usbkbd(E) amvdec_mmpeg12_v4l(E) amvdec_mmpeg12(E) amvdec_mmjpeg_v4l(E) amvdec_mmjpeg(E) amvdec_h265_v4l(E) amvdec_h265(E) amvdec_h264mvc(E) amvdec_mh264_v4l(E) usbhid(E) amvdec_mh264(E) apple_mfi_fastcharge(E) amvdec_ports(E) stream_input(E) pts_server(E) video_framerate_adapter(E) khadas_mcu(E) decoder_common(E) iv009_isp_sensor(E) iv009_isp_lens(E) mali_kbase(E) firmware(E)

[Thu May 16 16:19:28 2024] media_clock(E) iv009_isp_iq(E) amlcam(E) v4l2_fwnode(E) amlogic_seckey(E) amlogic_host(E) v4l2_async(E) mac80211(E) joydev(E) cfg80211(E) amlogic_snd_codec_ad82128(E) amlogic_snd_codec_tas5805(E) amlogic_snd_codec_tas5707(E) amlogic_snd_codec_tl1(E) amlogic_snd_codec_t9015(E) amlogic_snd_soc(E) amlogic_snd_codec_dummy(E) amlogic_jtag(E) amlogic_led(E) amlogic_socinfo(E) amlogic_rtc(E) dwc_otg(E) amlogic_dvb_ci(E) amlogic_dvb_demux(E) amlogic_audio_utils(E) i2c_dev(E) dwmac_dwc_qos_eth(E) dwmac_meson(E) system_heap(E) ntfs3(E) zsmalloc(E) sha1_ce(E) amlogic_wireless(E) amlogic_crypto_dma(E) ip_tables(E) x_tables(E) autofs4(E) amlogic_pcie_v2_host(E) dwmac_meson8b(E) stmmac_platform(E) stmmac(E) aml_drm(E) amlogic_mdio_g12a(E) amlogic_inphy(E) mdio_mux(E) amlogic_phy_debug(E) amlogic_irblaster(E) amlogic_usb(E) amlogic_thermal(E) amlogic_adc(E) amlogic_camera(E) aml_media(E) amlogic_dvb(E) amlogic_watchdog(E) amlogic_input(E) amlogic_pm(E) dvb_core(E)

[Thu May 16 16:19:28 2024] videobuf_core(E) aml_smmu(E) optee(E) amlogic_tee(E) tee(E) amlogic_rng(E) amlogic_spi(E) amlogic_i2c(E) amlogic_mmc(E) cqhci(E) amlogic_efuse_unifykey(E) amlogic_cpufreq(E) amlogic_cpuinfo(E) amlogic_power(E) amlogic_reset(E) pwm_regulator(E) amlogic_pwm(E) amlogic_mailbox(E) amlogic_pinctrl_soc_t7(E) amlogic_gpio(E) amlogic_aoclk_g12a(E) amlogic_clk_soc_t7(E) amlogic_clk(E) amlogic_secmon(E) amlogic_memory_debug(E) amlogic_debug(E) page_trace(E) pcs_xpcs(E) amlogic_hwspinlock(E) gpio_regulator(E) amlogic_debug_iotrace(E) amlogic_gkitool(E)

[Thu May 16 16:19:28 2024] CPU: 3 PID: 4811 Comm: mysqld Tainted: G E 5.15.119 #1.6.5

[Thu May 16 16:19:28 2024] Hardware name: Khadas VIM4 (DT)

[Thu May 16 16:19:28 2024] pstate: 60400005 (nZCv daif +PAN -UAO -TCO -DIT -SSBS BTYPE=--)

[Thu May 16 16:19:28 2024] pc : nvme_queue_rq+0x760/0x820

[Thu May 16 16:19:28 2024] lr : nvme_queue_rq+0x760/0x820

[Thu May 16 16:19:28 2024] sp : ffffffc00cb73630

[Thu May 16 16:19:28 2024] x29: ffffffc00cb73630 x28: 0000000000000013 x27: ffffffc00a008000

[Thu May 16 16:19:28 2024] x26: 0000000000000002 x25: ffffff8130a2a000 x24: ffffff81071e9300

[Thu May 16 16:19:28 2024] x23: ffffff812c923330 x22: 0000000000000002 x21: 0000000000000000

[Thu May 16 16:19:28 2024] x20: ffffffd60a2cbb18 x19: ffffff812c923200 x18: 0000000000000000

[Thu May 16 16:19:28 2024] x17: 64615f616d642038 x16: 34323138313a6874 x15: 0f310f320f360f32

[Thu May 16 16:19:28 2024] x14: 0f3a0f640f610f6f x13: ffffffd609ca39d0 x12: 0000000000002175

[Thu May 16 16:19:28 2024] x11: 0000000000000b27 x10: ffffffd609f639d0 x9 : ffffffd609ca39d0

[Thu May 16 16:19:28 2024] x8 : 00000000ffff7fff x7 : ffffffd609f639d0 x6 : 80000000ffff8000

[Thu May 16 16:19:28 2024] x5 : ffffff8229ea0b08 x4 : 0000000000000000 x3 : 0000000000000027

[Thu May 16 16:19:28 2024] x2 : 0000000000000000 x1 : 0000000000000000 x0 : ffffff810ab31240

[Thu May 16 16:19:28 2024] Call trace:

[Thu May 16 16:19:28 2024] nvme_queue_rq+0x760/0x820

[Thu May 16 16:19:28 2024] __blk_mq_try_issue_directly+0x188/0x230

[Thu May 16 16:19:28 2024] blk_mq_try_issue_list_directly+0x78/0x2b4

[Thu May 16 16:19:28 2024] blk_mq_sched_insert_requests+0x210/0x220

[Thu May 16 16:19:28 2024] blk_mq_flush_plug_list+0x110/0x1f0

[Thu May 16 16:19:28 2024] blk_flush_plug_list+0xfc/0x134

[Thu May 16 16:19:28 2024] blk_mq_submit_bio+0x25c/0x60c

[Thu May 16 16:19:28 2024] __submit_bio+0x1e8/0x1fc

[Thu May 16 16:19:28 2024] submit_bio_noacct+0x224/0x260

[Thu May 16 16:19:28 2024] submit_bio+0x54/0x180

[Thu May 16 16:19:28 2024] iomap_dio_submit_bio+0xbc/0xd0

[Thu May 16 16:19:28 2024] iomap_dio_bio_iter+0x2c4/0x500

[Thu May 16 16:19:28 2024] __iomap_dio_rw+0x55c/0x7e0

[Thu May 16 16:19:28 2024] iomap_dio_rw+0x10/0x50

[Thu May 16 16:19:28 2024] ext4_file_read_iter+0xb8/0x1f0

[Thu May 16 16:19:28 2024] new_sync_read+0xfc/0x1a0

[Thu May 16 16:19:28 2024] vfs_read+0x18c/0x1e0

[Thu May 16 16:19:28 2024] ksys_pread64+0x7c/0xc0

[Thu May 16 16:19:28 2024] __arm64_sys_pread64+0x20/0x30

[Thu May 16 16:19:28 2024] invoke_syscall+0x48/0x114

[Thu May 16 16:19:28 2024] el0_svc_common.constprop.0+0x44/0xec

[Thu May 16 16:19:28 2024] do_el0_svc+0x24/0xa0

[Thu May 16 16:19:28 2024] el0_svc+0x20/0x80

[Thu May 16 16:19:28 2024] el0t_64_sync_handler+0xe8/0x114

[Thu May 16 16:19:28 2024] el0t_64_sync+0x1b0/0x1b4

[Thu May 16 16:19:28 2024] ---[ end trace c07d6d3fc9ebed48 ]---

[Thu May 16 16:19:28 2024] blk_update_request: I/O error, dev nvme0n1, sector 145963761 op 0x0:(READ) flags 0x4000 phys_seg 2 prio class 0

[Thu May 16 16:19:29 2024] eth0: renamed from vetheb276f8

[Thu May 16 16:19:29 2024] IPv6: ADDRCONF(NETDEV_CHANGE): veth9272696: link becomes ready

[Thu May 16 16:19:30 2024] blk_update_request: I/O error, dev nvme0n1, sector 145951473 op 0x0:(READ) flags 0x4000 phys_seg 2 prio class 0

[Thu May 16 16:19:30 2024] blk_update_request: I/O error, dev nvme0n1, sector 145957617 op 0x0:(READ) flags 0x4000 phys_seg 2 prio class 0

[Thu May 16 16:19:30 2024] blk_update_request: I/O error, dev nvme0n1, sector 145963761 op 0x0:(READ) flags 0x4000 phys_seg 46 prio class 0

[Thu May 16 16:19:30 2024] blk_update_request: I/O error, dev nvme0n1, sector 145964273 op 0x0:(READ) flags 0x4000 phys_seg 65 prio class 0

[Thu May 16 16:19:30 2024] blk_update_request: I/O error, dev nvme0n1, sector 145964785 op 0x0:(READ) flags 0x4000 phys_seg 32 prio class 0

[Thu May 16 16:19:32 2024] blk_update_request: I/O error, dev nvme0n1, sector 145963761 op 0x0:(READ) flags 0x4000 phys_seg 2 prio class 0

[Thu May 16 16:19:34 2024] blk_update_request: I/O error, dev nvme0n1, sector 145951473 op 0x0:(READ) flags 0x4000 phys_seg 65 prio class 0

[Thu May 16 16:19:34 2024] blk_update_request: I/O error, dev nvme0n1, sector 145951985 op 0x0:(READ) flags 0x4000 phys_seg 65 prio class 0

[Thu May 16 16:19:34 2024] blk_update_request: I/O error, dev nvme0n1, sector 145952497 op 0x0:(READ) flags 0x4000 phys_seg 65 prio class 0

[Thu May 16 16:19:34 2024] blk_update_request: I/O error, dev nvme0n1, sector 145953009 op 0x0:(READ) flags 0x0 phys_seg 64 prio class 0

[Thu May 16 16:19:36 2024] blk_update_request: I/O error, dev nvme0n1, sector 145951473 op 0x0:(READ) flags 0x4000 phys_seg 31 prio class 0

[Thu May 16 16:19:36 2024] blk_update_request: I/O error, dev nvme0n1, sector 145951985 op 0x0:(READ) flags 0x4000 phys_seg 51 prio class 0

[Thu May 16 16:19:36 2024] blk_update_request: I/O error, dev nvme0n1, sector 145952497 op 0x0:(READ) flags 0x4000 phys_seg 65 prio class 0

[Thu May 16 16:19:36 2024] blk_update_request: I/O error, dev nvme0n1, sector 145953009 op 0x0:(READ) flags 0x4000 phys_seg 64 prio class 0

[Thu May 16 16:19:36 2024] blk_update_request: I/O error, dev nvme0n1, sector 145953521 op 0x0:(READ) flags 0x0 phys_seg 49 prio class 0

[Thu May 16 16:19:39 2024] blk_update_request: I/O error, dev nvme0n1, sector 145951473 op 0x0:(READ) flags 0x4000 phys_seg 33 prio class 0

it just docker is running on NVME.