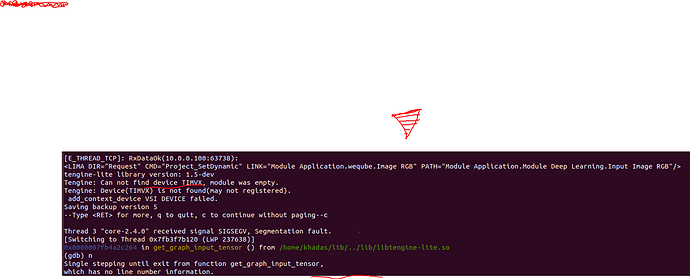

@Frank I am using gdb to track the error. I found that TIM-VX is an issue. DO you have any idea where I need to clarify? I initially thought the program was not able to read the tengine model (But I think it does read it correctly as I get no error message printed for model not being read). Please find the screenshot below. Any inputs would help me solve the issue.

I traced the program and found the below snippet is getting executed.

struct device* selected_device = find_device_via_name(dev_name);

if (NULL == selected_device)

{

TLOG_ERR("Tengine: Device(%s) is not found(may not registered).\n", dev_name);

return -1;

}

Can you share any inputs what might be missing in this case?