Ok, that makes sense, but it still doesn’t make any detections. I have a sample test image that I know should generate a single detection from the model.

I first reconverted the model with the new mean values with this command

./convert --model-name pose_densenet121_body --platform onnx --model ~/src/khadas/pose_densenet121_body.onnx --mean-values '0 0 0 0.0039215686' --quantized-dtype asymmetric_affine --source-files mjdataset.txt --batch-size 1 --iterations 1 --kboard VIM3 --print-level 0 --inputs input --outputs "'cmap paf'" --input-size-list "'3, 256, 256'"

I ran the original ONNX model with the onnxruntime using the following python code:

#!/usr/bin/python3

import onnxruntime

import cv2

import numpy as np

def postprocess_pose(output_heatmaps, output_pafs, threshold=0.1):

"""

Postprocesses the output of a pose estimation model to extract keypoints and skeletons.

Args:

output_heatmaps (np.ndarray): Heatmaps output from the model.

output_pafs (np.ndarray): PAFs output from the model.

threshold (float): Confidence threshold for keypoint detection.

Returns:

list: List of detected poses, where each pose is a list of keypoints

(x, y, confidence).

"""

num_keypoints = output_heatmaps.shape[1]

heatmaps = np.squeeze(output_heatmaps)

pafs = np.squeeze(output_pafs)

poses = []

for kpt_idx in range(num_keypoints):

# Find peaks in heatmap

heatmap = heatmaps[kpt_idx]

_, thresh = cv2.threshold(heatmap, threshold, 1, cv2.THRESH_BINARY)

contours, _ = cv2.findContours(thresh.astype(np.uint8), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for cnt in contours:

M = cv2.moments(cnt)

if M["m00"] != 0:

cX = int(M["m10"] / M["m00"])

cY = int(M["m01"] / M["m00"])

confidence = heatmap[cY, cX]

if confidence > threshold:

poses.append((cX, cY, confidence))

return poses

session = onnxruntime.InferenceSession("pose_densenet121_body.onnx")

input_name = session.get_inputs()[0].name

output_name = session.get_outputs()[0].name

output_names = [output.name for output in session.get_outputs()]

print(f"{input_name} {output_names}")

image = cv2.imread("pose1.jpg")

image = cv2.resize(image, (256, 256)) # Resize to expected input dimensions

image = image.astype(np.float32) / 255.0 # Normalize pixel values

image = image.transpose((2, 0, 1)) # Change channel order to (C, H, W)

input_data = np.expand_dims(image, axis=0) # Add batch dimension

output = session.run(output_names, {input_name: input_data})

print(f"Output type={type(output)}")

print(f"Output Len={len(output)}")

print(output)

print(f"CMAP output shape={output[0].shape}")

print(f"PAFS output shape={output[1].shape}")

poses=postprocess_pose(output[0], output[1])

print(poses)

The output is:

input ['cmap', 'paf']

Output type=<class 'list'>

Output Len=2

[array([[[[-4.38258547e-04, 1.42941071e-05, -2.96796425e-05, ...,

-2.43663235e-05, 6.50559377e-05, -1.20143573e-04],

[ 5.52342226e-06, 4.34457361e-05, -5.59325690e-06, ...,

-4.82940595e-06, 6.06977210e-06, 6.93790425e-05],

[ 5.79954103e-05, 3.37156598e-05, -5.81956465e-06, ...,

-4.12151849e-06, -2.94739402e-06, 3.60250306e-05],

...,

[ 6.78446231e-05, 3.65443157e-05, -4.69990391e-06, ...,

-1.19122296e-06, 5.85721973e-05, 1.94626555e-04],

[ 2.72795907e-04, 2.05694487e-05, -1.18826492e-05, ...,

-3.42658495e-07, 4.78150287e-05, 2.60828179e-04],

[ 6.22820808e-04, 3.06129623e-05, -4.34198446e-05, ...,

2.43719078e-05, 2.06580531e-04, 6.61183672e-04]],

[[ 2.16633816e-05, 5.72611862e-05, 6.70433201e-06, ...,

7.77542391e-06, 8.08852201e-05, -5.52270736e-04],

[ 7.29129242e-05, 5.66046729e-05, 1.87763198e-05, ...,

2.18868518e-05, 2.58310865e-05, 3.64165135e-06],

[ 1.17881602e-04, 6.13801458e-05, 2.01291659e-05, ...,

2.17817633e-05, 2.15128603e-05, 3.94502968e-05],

...,

[ 1.62543962e-04, 4.55523623e-05, 1.97590944e-05, ...,

2.77979834e-05, 6.63646060e-05, 1.27297215e-04],

[ 3.54883523e-04, 3.64387306e-05, 1.74369889e-05, ...,

3.36093799e-05, 1.02052887e-04, 4.54059831e-04],

[ 3.91395035e-04, 4.44106518e-05, -7.40098621e-05, ...,

1.81446958e-05, 3.65421467e-04, 2.58796616e-04]],

[[-5.33516752e-04, -2.25007443e-05, 1.26433151e-05, ...,

-2.39886522e-05, -2.10368253e-05, -3.48687026e-05],

[ 6.13946668e-06, 4.81417592e-05, 1.75952955e-05, ...,

1.72941909e-05, 2.23786301e-05, 2.42734295e-05],

[ 5.27131197e-05, 3.40496881e-05, 1.68342776e-05, ...,

1.84594974e-05, 1.72041164e-05, 1.61031421e-04],

...,

[ 1.77219234e-04, 4.54067231e-05, 1.67203416e-05, ...,

1.88760223e-05, 4.59752409e-05, -9.34354830e-07],

[ 2.02952404e-04, 4.55703121e-05, 1.56401275e-05, ...,

3.07885421e-05, 6.52645540e-05, 2.62622663e-04],

[-8.19856214e-05, 9.59621684e-05, -1.20737765e-04, ...,

-6.67629720e-05, 1.82195854e-05, -4.52700770e-04]],

...,

[[-3.16275720e-04, 6.69998553e-05, 8.68840725e-05, ...,

1.36585004e-04, 1.88858510e-04, 5.95541671e-04],

[-4.13454909e-05, 3.95883799e-05, 4.08163542e-05, ...,

1.56171227e-05, 8.02815484e-05, 6.98445074e-05],

[-8.97708378e-06, -8.77945513e-06, -7.17432704e-06, ...,

-1.33917192e-05, -3.48519916e-06, 5.40822548e-05],

...,

[-6.49168651e-05, 1.66437894e-05, -1.42672479e-05, ...,

-3.47545210e-07, 3.73968105e-05, -7.99871050e-05],

[ 1.17308555e-05, -1.44123187e-05, -1.26414243e-05, ...,

1.20882942e-06, -3.43281499e-06, -8.54631508e-05],

[-3.88355169e-04, -1.82456461e-06, -3.74960800e-05, ...,

6.66807864e-06, -7.68914324e-05, -8.76843755e-04]],

[[-4.45454469e-04, 8.64812027e-05, 1.85020526e-05, ...,

7.76505476e-05, 1.58696843e-04, -3.87071341e-05],

[ 1.63681194e-04, 3.12959310e-05, -6.69378096e-06, ...,

-1.20192735e-05, 3.32272539e-05, 4.21607052e-04],

[-2.73062524e-05, -2.17704564e-05, -2.32534476e-05, ...,

-2.47958815e-05, -9.16034332e-06, -5.18101297e-06],

...,

[-1.98033867e-05, -1.57800860e-05, -2.73654259e-05, ...,

-2.35437237e-05, -1.54106074e-05, -1.97953545e-04],

[-9.70092660e-05, -2.32342300e-05, -2.63364309e-05, ...,

-2.03803193e-05, -1.10458896e-05, -9.41137550e-05],

[-2.49945442e-04, -6.91625100e-05, -7.54482098e-05, ...,

-6.88704458e-05, -1.54247991e-05, -6.87016174e-04]],

[[ 1.25692226e-04, 2.84862144e-05, 2.96489961e-05, ...,

5.83393085e-05, 7.65087025e-05, -3.30271178e-05],

[ 1.98257796e-04, 5.98743754e-05, -4.33488367e-06, ...,

-4.91459014e-06, -5.74451406e-06, 6.39905047e-05],

[ 5.48749776e-05, 5.82853863e-05, -6.03009175e-06, ...,

1.65152778e-06, -1.01725109e-05, 3.11455078e-05],

...,

[ 4.54043147e-05, 1.41492310e-05, -2.35322659e-07, ...,

-1.29708405e-05, 1.82312506e-05, 6.47778070e-05],

[ 2.58017099e-04, 1.07355561e-04, 1.02606755e-05, ...,

1.31050074e-05, 1.26958239e-05, -5.21557631e-05],

[ 5.81798551e-04, 2.13615094e-05, -7.83945579e-05, ...,

1.19052245e-04, -6.44724205e-05, 3.56668716e-05]]]],

dtype=float32), array([[[[ 6.81468926e-04, 8.30375939e-06, -7.16405484e-05, ...,

1.28261527e-05, -7.01578028e-05, 6.41877239e-04],

[-3.26606387e-04, -2.69025040e-04, 1.62706157e-04, ...,

4.02966834e-05, -2.34022096e-04, -3.54795193e-04],

[-6.93092516e-05, -6.89344888e-05, -6.78861034e-05, ...,

-8.85761910e-05, -1.13957940e-04, -5.51639496e-05],

...,

[-3.07946393e-05, -1.08405038e-05, -4.56886846e-05, ...,

-3.58929246e-05, 1.40899327e-04, 1.01967671e-05],

[-4.30289656e-05, -6.48865389e-05, -6.48854402e-05, ...,

-7.41075055e-05, -6.41715960e-05, -2.24139163e-04],

[-1.14201056e-03, 3.34838842e-06, 3.15008656e-05, ...,

-2.31472986e-05, -4.53194953e-05, 9.57547629e-04]],

[[-2.92814511e-04, -3.31833435e-05, -8.33570539e-06, ...,

1.02068771e-06, -1.57782670e-05, 7.47047452e-05],

[-2.45611045e-05, -2.11868391e-05, 1.96279943e-05, ...,

6.99556949e-06, -9.04299486e-06, -3.34338656e-05],

[-1.53204310e-05, -3.82930284e-06, -2.91912284e-06, ...,

3.43414149e-06, 1.06314628e-05, 5.68490213e-06],

...,

[ 2.66103280e-06, 1.43032867e-06, -1.97261170e-06, ...,

-8.95269295e-07, 1.67372418e-05, 4.88224759e-06],

[-2.51190468e-05, -8.68001553e-06, -5.41173813e-06, ...,

-8.03459898e-07, -1.07922842e-05, -2.34125946e-05],

[-2.80420849e-04, -7.76202069e-06, -7.35341837e-06, ...,

-9.16753925e-06, -4.62116077e-05, -3.62043502e-04]],

[[-6.11959433e-04, -2.79179105e-04, -2.24991498e-04, ...,

-1.00047422e-04, -1.03330549e-05, -5.51681151e-04],

[-1.91685467e-04, -2.98391333e-05, -2.74827980e-05, ...,

-2.99587628e-05, -8.94658806e-05, -4.12231602e-05],

[-5.25284122e-05, -2.37871373e-05, -3.44281107e-05, ...,

-8.88461727e-05, -1.51471002e-04, -9.60764592e-05],

...,

[-1.00278696e-04, -2.83212557e-05, -2.85250153e-05, ...,

-2.83314548e-05, -2.78153602e-05, -2.28077897e-05],

[ 1.51940967e-05, -1.70032108e-05, -2.53161688e-05, ...,

-7.14475827e-05, -1.52784978e-05, -2.35756932e-04],

[-6.27330563e-04, 1.06265179e-06, 1.44480164e-05, ...,

-3.42344341e-04, -5.26957738e-04, -4.86333825e-04]],

...,

[[-4.83533251e-04, 1.46470426e-04, -2.49106688e-06, ...,

-4.12518875e-06, -4.98606423e-05, -2.26729717e-05],

[ 2.63934809e-04, 1.19048609e-05, 7.57502539e-06, ...,

1.14361837e-05, 1.99962378e-05, -7.52096603e-05],

[-8.64601461e-05, 1.78132832e-05, 1.46616476e-05, ...,

1.92745574e-05, 2.67112064e-05, 1.55227579e-04],

...,

[ 1.60837211e-04, 1.20368513e-05, 1.21119265e-05, ...,

1.17347981e-05, 1.05395648e-05, -5.07147015e-05],

[-5.24510921e-04, 1.31911547e-05, 1.33498015e-05, ...,

1.29748814e-05, 1.39230124e-05, -2.21359165e-04],

[ 8.05606076e-04, 1.06572596e-04, 1.28883417e-04, ...,

3.73878465e-05, 3.11715237e-04, 5.61388268e-04]],

[[-4.52847380e-05, 1.16553507e-03, -2.00211332e-04, ...,

1.73310807e-04, -5.23059105e-04, -2.21532700e-03],

[ 1.72093336e-04, 4.24333120e-06, 2.48589204e-05, ...,

-3.30561525e-05, 9.56717777e-05, -4.14871174e-04],

[-1.68121711e-04, -5.97650796e-07, -3.12069023e-05, ...,

1.13616443e-04, 3.23493790e-04, 5.98082028e-04],

...,

[-1.55922840e-04, -4.02975929e-05, -4.58366339e-05, ...,

-4.52321910e-05, -2.90891203e-05, -1.46856910e-04],

[-3.81018908e-04, -4.94033084e-05, -4.17494521e-05, ...,

-4.52931126e-05, -2.60552624e-05, 1.11810550e-04],

[-1.42595312e-03, -4.17845469e-04, 1.84095552e-04, ...,

-1.09522475e-03, -1.00946857e-03, -9.14987701e-04]],

[[-4.52920329e-04, 3.13173368e-05, -4.23565216e-05, ...,

-6.42458544e-05, 1.92667048e-05, 4.91063809e-04],

[-8.21880676e-05, -2.51202764e-05, -2.43366740e-05, ...,

-3.22823485e-06, -3.70852249e-05, 3.09521056e-05],

[ 5.64269476e-06, -5.71718601e-06, 2.08367805e-06, ...,

-3.31687916e-05, -1.10432135e-04, 3.49664842e-05],

...,

[-1.20726647e-04, 4.25169532e-07, -2.70589936e-07, ...,

-5.78260824e-07, -3.74405090e-06, -5.50971345e-05],

[-5.06536511e-04, -8.04895535e-07, 1.71349734e-06, ...,

-8.92751905e-06, -5.16608861e-06, -5.02192881e-04],

[-1.13720278e-04, -2.44772295e-04, -2.25195021e-04, ...,

-1.42401348e-06, 1.24353595e-04, -1.15157120e-04]]]],

dtype=float32)]

CMAP output shape=(1, 18, 64, 64)

PAFS output shape=(1, 42, 64, 64)

[(31, 11, 0.6139334), (32, 10, 0.7885559), (31, 10, 0.6215377), (32, 11, 0.43233508), (30, 11, 0.57079566), (34, 18, 0.6081526), (29, 18, 0.66460234), (36, 26, 0.7507798), (28, 25, 0.64223593), (33, 30, 0.2270495), (29, 30, 0.56159514), (33, 33, 0.35248762), (30, 33, 0.5204712), (34, 44, 0.44216946), (29, 45, 0.47740406), (35, 57, 0.32568738), (28, 58, 0.5527475), (32, 17, 0.52170956)]

and you can see a single pose detected at the end that has been extracted from the model output. I then take the converted model and run it with KSNN using the following python code:

#!/usr/bin/python3

import numpy as np

import os

import argparse

import sys

from ksnn.api import KSNN

from ksnn.types import *

import cv2

import time

import ctypes

def postprocess_pose(output_heatmaps, output_pafs, threshold=0.1):

"""

Postprocesses the output of a pose estimation model to extract keypoints and skeletons.

Args:

output_heatmaps (np.ndarray): Heatmaps output from the model.

output_pafs (np.ndarray): PAFs output from the model.

threshold (float): Confidence threshold for keypoint detection.

Returns:

list: List of detected poses, where each pose is a list of keypoints

(x, y, confidence).

"""

num_keypoints = output_heatmaps.shape[1]

heatmaps = np.squeeze(output_heatmaps)

pafs = np.squeeze(output_pafs)

poses = []

for kpt_idx in range(num_keypoints):

# Find peaks in heatmap

heatmap = heatmaps[kpt_idx]

_, thresh = cv2.threshold(heatmap, threshold, 1, cv2.THRESH_BINARY)

contours, _ = cv2.findContours(thresh.astype(np.uint8), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for cnt in contours:

M = cv2.moments(cnt)

if M["m00"] != 0:

cX = int(M["m10"] / M["m00"])

cY = int(M["m01"] / M["m00"])

confidence = heatmap[cY, cX]

if confidence > threshold:

poses.append((cX, cY, confidence))

return poses

model = KSNN('VIM3')

print(' |--- KSNN Version: {} +---| '.format(model.get_nn_version()))

model.nn_init(library='model/libnn_pose_densenet121_body.so', model='model/pose_densenet121_body.nb', level=1)

image = cv2.imread("pose1.jpg")

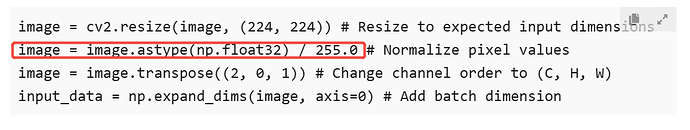

image = cv2.resize(image, (256, 256)) # Resize to expected input dimensions

image = image.astype(np.float32) / 255.0 # Normalize pixel values

image = image.transpose((2, 0, 1)) # Change channel order to (C, H, W)

input_data = np.expand_dims(image, axis=0) # Add batch dimension

start = time.time()

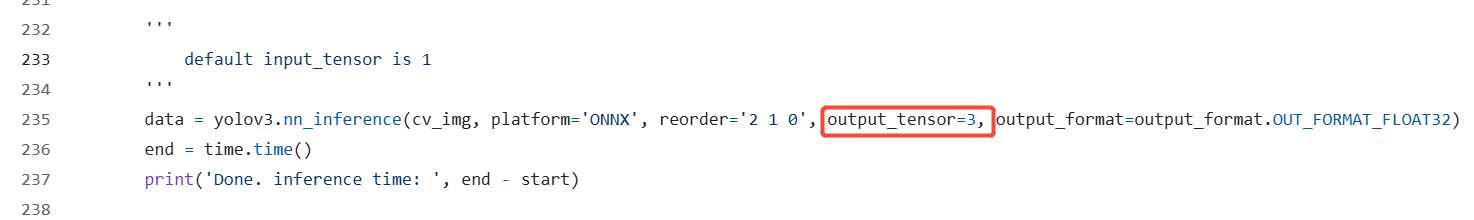

outputs = model.nn_inference(input_data, platform = 'ONNX', reorder='2 1 0', output_tensor=2, output_format=output_format.OUT_FORMAT_FLOAT32)

end = time.time()

print('Done. inference time: ', end - start)

print(f"Output type={type(outputs)}")

print(f"Output Len={len(outputs)}")

print(outputs)

print(f"CMAP output shape={outputs[0].shape}")

print(f"PAFS output shape={outputs[1].shape}")

heatmaps=outputs[0].reshape((1,18,64,64))

pafs=outputs[1].reshape((1,42,64,64))

print(f"Reshaped CMAP output={heatmaps.shape}")

print(f"Reshaped PAFS output={pafs.shape}")

poses=postprocess_pose(heatmaps,pafs)

print(poses)

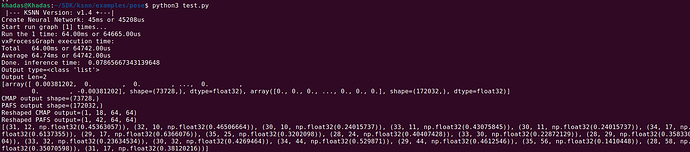

and the output is

|--- KSNN Version: v1.4 +---|

Create Neural Network: 22ms or 22191us

Start run graph [1] times...

Run the 1 time: 63.00ms or 63055.00us

vxProcessGraph execution time:

Total 63.00ms or 63129.00us

Average 63.13ms or 63129.00us

Done. inference time: 0.15249395370483398

Output type=<class 'list'>

Output Len=2

[array([-0.00026079, 0.00013039, 0. , ..., 0. ,

-0.00039118, -0.00013039], dtype=float32), array([ 0. , 0. , 0. , ..., 0. ,

0. , -0.00281812], dtype=float32)]

CMAP output shape=(73728,)

PAFS output shape=(172032,)

Reshaped CMAP output=(1, 18, 64, 64)

Reshaped PAFS output=(1, 42, 64, 64)

[]

The pre and post processing code is the same. The only real difference is that the KSNN outputs from the converted model are not in the right shape. They come out as a single dimension array, so I reshape the outputs into the expected shape and then pass them to the post processing code that is the same as in the onnx script. The output from the model is noticeably different and the detections list is empty.

The mean values should now be correct, the pre and post processing code work, as you can see them work with the original onnx format model, but the KSNN converted model still cannot make any detections.

Do you know what else I should be checking? I really appreciate the help so far.