@enggsajjad If the verification fails, it means that your so file and your nb file do not match

you mean libnn_yolo_v3.so?? After generation I copy the *.c and *.h files (as mentioned in the documentation page) into detect_yolo_v3 generate the .so file and then copy this .so and .nb file into detect_demo_picture lib and nn_data folder. I don’t know what is wrong here.

@enggsajjad If you are using the official cfg and weights, then the files you converted should be the same as those in the demo repository. Have you compared the c file, is there a difference?

@Frank I generated the difference for both the cfg and weight (you suggested and the one on the darkent website YOLO: Real-Time Object Detection )

Using your suggested cfg and weights

khadas@Khadas-teco:~$ diff hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3/vnn_yolov3.c hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3A/vnn_yolov3.c

2,3c2,3

< * Generated by ACUITY 6.0.12

< * Match ovxlib 1.1.34

---

> * Generated by ACUITY 5.21.1_0702

> * Match ovxlib 1.1.30

31c31

< memset( _attr.size, 0, VSI_NN_MAX_DIM_NUM * sizeof(vsi_size_t));\

---

> memset( _attr.size, 0, VSI_NN_MAX_DIM_NUM * sizeof(uint32_t));\

151,152d150

< vsi_bool inference_with_nbg = FALSE;

< char* pos = NULL;

163d160

< memset( &node, 0, sizeof( vsi_nn_node_t * ) * NET_NODE_NUM );

172,177d168

< pos = strstr(data_file_name, ".nb");

< if( pos && strcmp(pos, ".nb") == 0 )

< {

< inference_with_nbg = TRUE;

< }

<

211,212d201

< if( !inference_with_nbg )

< {

228,235d216

< }

< else

< {

< NEW_VXNODE(node[0], VSI_NN_OP_NBG, 1, 3, 0);

< node[0]->nn_param.nbg.type = VSI_NN_NBG_FILE;

< node[0]->nn_param.nbg.url = data_file_name;

<

< }

283,284d263

< if( !inference_with_nbg )

< {

300,308d278

< }

< else

< {

< node[0]->input.tensors[0] = norm_tensor[0];

< node[0]->output.tensors[0] = norm_tensor[1];

< node[0]->output.tensors[1] = norm_tensor[2];

< node[0]->output.tensors[2] = norm_tensor[3];

<

< }

khadas@Khadas-teco:~$ diff hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3/include/vnn_pre_process.h hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3A/include/vnn_pre_process.h

2,3c2,3

< * Generated by ACUITY 5.11.0

< * Match ovxlib 1.1.21

---

> * Generated by ACUITY 5.21.1_0702

> * Match ovxlib 1.1.30

78c78

< const vsi_nn_preprocess_map_element_t * vnn_GetPrePorcessMap();

---

> const vsi_nn_preprocess_map_element_t * vnn_GetPreProcessMap();

80c80

< uint32_t vnn_GetPrePorcessMapCount();

---

> uint32_t vnn_GetPreProcessMapCount();

84a85

>

khadas@Khadas-teco:~$ diff hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3/include/vnn_post_process.h hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3A/include/vnn_post_process.h

2,3c2,3

< * Generated by ACUITY 5.11.0

< * Match ovxlib 1.1.21

---

> * Generated by ACUITY 5.21.1_0702

> * Match ovxlib 1.1.30

16c16

< const vsi_nn_postprocess_map_element_t * vnn_GetPostPorcessMap();

---

> const vsi_nn_postprocess_map_element_t * vnn_GetPostProcessMap();

18c18

< uint32_t vnn_GetPostPorcessMapCount();

---

> uint32_t vnn_GetPostProcessMapCount();

khadas@Khadas-teco:~$ diff hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3/include/vnn_yolov3.h hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3A/include/vnn_yolov3.h

2,3c2,3

< * Generated by ACUITY 6.0.12

< * Match ovxlib 1.1.34

---

> * Generated by ACUITY 5.21.1_0702

> * Match ovxlib 1.1.30

20c20

< #define VNN_VERSION_PATCH 34

---

> #define VNN_VERSION_PATCH 30

khadas@Khadas-teco:~$

Using cfg and weights from darknet website

khadas@Khadas-teco:~$ diff hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3/vnn_yolov3.c hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3A/vnn_yolov3.c

2,3c2,3

< * Generated by ACUITY 6.0.12

< * Match ovxlib 1.1.34

---

> * Generated by ACUITY 5.21.1_0702

> * Match ovxlib 1.1.30

31c31

< memset( _attr.size, 0, VSI_NN_MAX_DIM_NUM * sizeof(vsi_size_t));\

---

> memset( _attr.size, 0, VSI_NN_MAX_DIM_NUM * sizeof(uint32_t));\

151,152d150

< vsi_bool inference_with_nbg = FALSE;

< char* pos = NULL;

163d160

< memset( &node, 0, sizeof( vsi_nn_node_t * ) * NET_NODE_NUM );

172,177d168

< pos = strstr(data_file_name, ".nb");

< if( pos && strcmp(pos, ".nb") == 0 )

< {

< inference_with_nbg = TRUE;

< }

<

211,212d201

< if( !inference_with_nbg )

< {

219,222c208,211

< input - [416, 416, 3, 1]

< output - [13, 13, 255, 1]

< [26, 26, 255, 1]

< [52, 52, 255, 1]

---

> input - [608, 608, 3, 1]

> output - [19, 19, 255, 1]

> [38, 38, 255, 1]

> [76, 76, 255, 1]

228,235d216

< }

< else

< {

< NEW_VXNODE(node[0], VSI_NN_OP_NBG, 1, 3, 0);

< node[0]->nn_param.nbg.type = VSI_NN_NBG_FILE;

< node[0]->nn_param.nbg.url = data_file_name;

<

< }

242,243c223,224

< attr.size[0] = 416;

< attr.size[1] = 416;

---

> attr.size[0] = 608;

> attr.size[1] = 608;

252,253c233,234

< attr.size[0] = 13;

< attr.size[1] = 13;

---

> attr.size[0] = 19;

> attr.size[1] = 19;

262,263c243,244

< attr.size[0] = 26;

< attr.size[1] = 26;

---

> attr.size[0] = 38;

> attr.size[1] = 38;

272,273c253,254

< attr.size[0] = 52;

< attr.size[1] = 52;

---

> attr.size[0] = 76;

> attr.size[1] = 76;

283,284d263

< if( !inference_with_nbg )

< {

300,308d278

< }

< else

< {

< node[0]->input.tensors[0] = norm_tensor[0];

< node[0]->output.tensors[0] = norm_tensor[1];

< node[0]->output.tensors[1] = norm_tensor[2];

< node[0]->output.tensors[2] = norm_tensor[3];

<

< }

khadas@Khadas-teco:~$ diff hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3/include/vnn_pre_process.h hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3A/include/vnn_pre_process.h

2,3c2,3

< * Generated by ACUITY 5.11.0

< * Match ovxlib 1.1.21

---

> * Generated by ACUITY 5.21.1_0702

> * Match ovxlib 1.1.30

78c78

< const vsi_nn_preprocess_map_element_t * vnn_GetPrePorcessMap();

---

> const vsi_nn_preprocess_map_element_t * vnn_GetPreProcessMap();

80c80

< uint32_t vnn_GetPrePorcessMapCount();

---

> uint32_t vnn_GetPreProcessMapCount();

84a85

>

khadas@Khadas-teco:~$ diff hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3/include/vnn_post_process.h hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3A/include/vnn_post_process.h

2,3c2,3

< * Generated by ACUITY 5.11.0

< * Match ovxlib 1.1.21

---

> * Generated by ACUITY 5.21.1_0702

> * Match ovxlib 1.1.30

16c16

< const vsi_nn_postprocess_map_element_t * vnn_GetPostPorcessMap();

---

> const vsi_nn_postprocess_map_element_t * vnn_GetPostProcessMap();

18c18

< uint32_t vnn_GetPostPorcessMapCount();

---

> uint32_t vnn_GetPostProcessMapCount();

khadas@Khadas-teco:~$ diff hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3/include/vnn_yolov3.h hussain/aml_npu_appwog/aml_npu_app/detect_library/model_code/detect_yolo_v3A/include/vnn_yolov3.h

2,3c2,3

< * Generated by ACUITY 6.0.12

< * Match ovxlib 1.1.34

---

> * Generated by ACUITY 5.21.1_0702

> * Match ovxlib 1.1.30

20c20

< #define VNN_VERSION_PATCH 34

---

> #define VNN_VERSION_PATCH 30

khadas@Khadas-teco:~$

I also tried witht the recent sdk version and my scripts are as follows:

0_import_model_416.sh

#!/bin/bash

NAME=yolov3

ACUITY_PATH=../bin/

pegasus=${ACUITY_PATH}pegasus

if [ ! -e "$pegasus" ]; then

pegasus=${ACUITY_PATH}pegasus.py

fi

#Darknet

$pegasus import darknet\

--model /home/sajjad/sajjad/models-zoo/darknet/yolov3/yolov3/yolov3.cfg \

--weights /home/sajjad/sajjad/yolov3.weights \

--output-model ${NAME}.json \

--output-data ${NAME}.data \

#generate inpumeta --source-file dataset.txt

$pegasus generate inputmeta \

--model ${NAME}.json \

--input-meta-output ${NAME}_inputmeta.yml \

--channel-mean-value "0 0 0 256" \

--source-file data/validation_tf_416.txt

# --source-file dataset.txt

1_quantize_model_416.sh

#!/bin/bash

NAME=yolov3

ACUITY_PATH=../bin/

pegasus=${ACUITY_PATH}pegasus

if [ ! -e "$pegasus" ]; then

pegasus=${ACUITY_PATH}pegasus.py

fi

$pegasus quantize \

--quantizer dynamic_fixed_point \

--qtype int8 \

--rebuild \

--with-input-meta ${NAME}_inputmeta.yml \

--model ${NAME}.json \

--model-data ${NAME}.data

2_export_case_code_416.sh

#!/bin/bash

NAME=yolov3

ACUITY_PATH=../bin/

pegasus=$ACUITY_PATH/pegasus

if [ ! -e "$pegasus" ]; then

pegasus=$ACUITY_PATH/pegasus.py

fi

$pegasus export ovxlib\

--model ${NAME}.json \

--model-data ${NAME}.data \

--model-quantize ${NAME}.quantize \

--with-input-meta ${NAME}_inputmeta.yml \

--dtype quantized \

--optimize VIPNANOQI_PID0X88 \

--viv-sdk ${ACUITY_PATH}vcmdtools \

--pack-nbg-unify

rm -rf ${NAME}_nbg_unify

mv ../*_nbg_unify ${NAME}_nbg_unify

cd ${NAME}_nbg_unify

mv network_binary.nb ${NAME}_88.nb

cd ..

#save normal case demo export.data

mkdir -p ${NAME}_normal_case_demo

mv *.h *.c .project .cproject *.vcxproj BUILD *.linux *.export.data ${NAME}_normal_case_demo

# delete normal_case demo source

#rm *.h *.c .project .cproject *.vcxproj BUILD *.linux *.export.data

#rm *.data *.quantize *.json *_inputmeta.yml

rm *.data *.json *_inputmeta.yml

validation_tf_416.txt

cat data/validation_tf_416.txt

./1080p-416x416.jpg

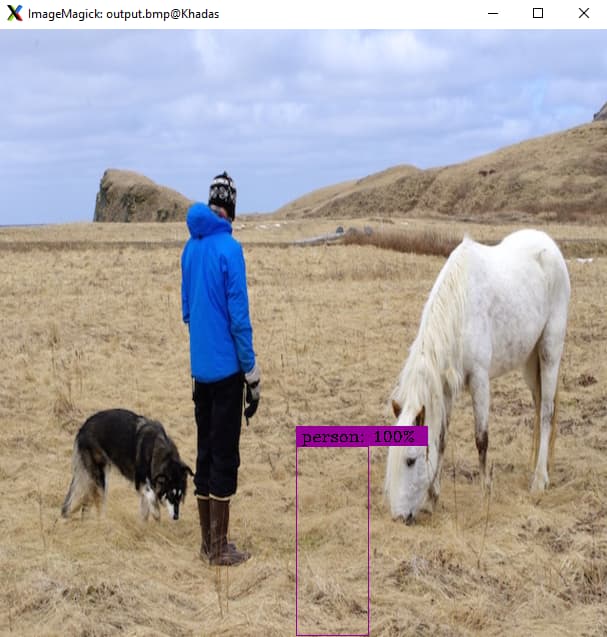

When used these scripts and generated the .so and .nb files, it worked but giving wrong results as attached.

steps:

detect_demo_picture$ sudo ./UNINSTALL

detect_demo_picture$ sudo ./INSTALL

detect_demo_picture$ ./detect_demo_x11 -m 2 -p ./1080p.bmp

@Frank Thanks for the responses.

I installed a new Khadas OS on another SD Card and then cloned fresh aml_npu_app and aml_npu_binaries. Then copied .so and .nb file. Still the yolov3 can not be run seccessfully. @Frank @numbqq @osos55 sos55

@enggsajjad

Please use the least version with SDK

#!/bin/bash

#NAME=mobilenet_tf

NAME=yolov3

ACUITY_PATH=../bin/

pegasus=${ACUITY_PATH}pegasus

if [ ! -e "$pegasus" ]; then

pegasus=${ACUITY_PATH}pegasus.py

fi

#Tensorflow

#$pegasus import tensorflow \

# --model ./model/mobilenet_v1.pb \

# --inputs input \

# --outputs MobilenetV1/Predictions/Reshape_1 \

# --input-size-list '224,224,3' \

# --output-data ${NAME}.data \

# --output-model ${NAME}.json

#generate inpumeta --source-file dataset.txt

#$pegasus generate inputmeta \

# --model ${NAME}.json \

# --input-meta-output ${NAME}_inputmeta.yml \

# --channel-mean-value "128 128 128 0.0078125" \

# --source-file dataset.txt

#Darknet

$pegasus import darknet\

--model ${NAME}.cfg \

--weights ${NAME}.weights \

--output-model ${NAME}.json \

--output-data ${NAME}.data

$pegasus generate inputmeta \

--model ${NAME}.json \

--input-meta-output ${NAME}_inputmeta.yml \

--channel-mean-value "0 0 0 0.003906" \

--source-file dataset.txt

Using these settings with the new image and recent sdk, the yolov3 worked for me while using following cfg and weights:

https://github.com/yan-wyb/models-zoo/blob/master/darknet/yolov3/yolov3/yolov3.cfg

https://pjreddie.com/media/files/yolov3.weights

while it is not working the cfg and weights from:

git clone https://github.com/pjreddie/darknet

wget https://pjreddie.com/media/files/yolov3.weights

So doesn’t this solve your problem? You can compare the differences between the cfg files

Yes, the problem is solved with the cfg and weights you provided.

should I use the these values for:

- Yolo-v3 while using cfg and weights from (

GitHub - pjreddie/darknet: Convolutional Neural Networks and https://pjreddie.com/media/files/yolov3.weights). - Yolo-Tiny while using the above cfg and weights.

- Yolo-Tiny while using the cfg and weights you provided.

Please guide which values I should use for the parameter –channel-mean-value for Yolo-v3, and Yolo-Tiny for different cfg/weights.

Thanks

For cfg and weights from GitHub - pjreddie/darknet: Convolutional Neural Networks and https://pjreddie.com/media/files/yolov3.weights).

My scripts are:

0_import_model_608A.sh

#!/bin/bash

NAME=yolov3

ACUITY_PATH=../bin/

pegasus=${ACUITY_PATH}pegasus

if [ ! -e "$pegasus" ]; then

pegasus=${ACUITY_PATH}pegasus.py

fi

$pegasus import darknet\

--model /home/sajjad/sajjad/darknet/cfg/yolov3.cfg \

--weights /home/sajjad/sajjad/darknet/yolov3.weights \

--output-model ${NAME}.json \

--output-data ${NAME}.data \

$pegasus generate inputmeta \

--model ${NAME}.json \

--input-meta-output ${NAME}_inputmeta.yml \

--channel-mean-value "0 0 0 0.003906" \

--source-file data/validation_tf_608.txt

1_quantize_model_608.sh

#!/bin/bash

NAME=yolov3

ACUITY_PATH=../bin/

pegasus=${ACUITY_PATH}pegasus

if [ ! -e "$pegasus" ]; then

pegasus=${ACUITY_PATH}pegasus.py

fi

$pegasus quantize \

--quantizer dynamic_fixed_point \

--qtype int8 \

--rebuild \

--with-input-meta ${NAME}_inputmeta.yml \

--model ${NAME}.json \

--model-data ${NAME}.data

2_export_case_code_608.sh

#!/bin/bash

NAME=yolov3

ACUITY_PATH=../bin/

pegasus=$ACUITY_PATH/pegasus

if [ ! -e "$pegasus" ]; then

pegasus=$ACUITY_PATH/pegasus.py

fi

$pegasus export ovxlib\

--model ${NAME}.json \

--model-data ${NAME}.data \

--model-quantize ${NAME}.quantize \

--with-input-meta ${NAME}_inputmeta.yml \

--dtype quantized \

--optimize VIPNANOQI_PID0X88 \

--viv-sdk ${ACUITY_PATH}vcmdtools \

--pack-nbg-unify

This gives the wrong result for Yolo-V3.

Result (wrong):

khadas@Khadas:~/hussain/standalone_initial/newscripts/sample_demo_x11B/bin_r_cv4$ ./detect_demo_x11 -m 2 -w 608 -h 608 -p ~/hussain/img/608/person_608.jpg

det_set_log_config Debug

det_set_model success!!

model.width:608

model.height:608

model.channel:3

Det_set_input START

Det_set_input END

Det_get_result START

--- Top5 ---

32804: 9.750000

32785: 7.500000

32803: 6.750000

32805: 6.250000

32443: 6.000000

Det_get_result END

resultData.detect_num=1

result type is 2

i:1 left:297.166 right:370.855 top:417.737 bottom:608

Can you please guide, what is wrong here? Thanks

@Frank @numbqq

The post-processing functions of yolo tiny and yolo are different. In most cases, the problem in your picture is caused by the improper processing of the output data by the post-processing function. This part of the code depends on your own exploration, maybe you should understand the structure of the yolo series mode

No, I have not tried yolo-tiny yet. I was asking that if I try to convert the model of yolo-tiny, should I use the same scripts?

The result I showed above, was generated from Yolov3, which is based on model conversion from cfg/weights from darknet official. Which is not working as you see! I am curious that why it is not working? Where should I change in the scripts?

This is important for me before I proceed to yolo-tiny model conversion and using it.

Thanks for the responses!

@Frank

I remember I gave you a cfg and weights file that you can use. I’m using a file from 19 years, if the structure of the yolo series changes now, then the post-processing program also needs to do the corresponding processing.

Yes, I know that and appreciate your help. May be I am not able to properly explain it.

We are confused and trying to understand that whatever is mentioned on the page convert and call your own model through NPU | Khadas Documentation, is out of date? And it should actually be what we have discussed in this thread. Right?

On last question! If I have to convert the yolo-tiny model, which cfg and weights should I use? Please share if you have the working one.

Thanks alot!

@Frank

Got it!

--channel-mean-value "0 0 0 0.003906"

will remain the same for yolo-tiny model convesion in 0_import_model.sh?

I will run and share with you.

Thanks alot! Regards